For those too young to have seen the James bond movie Goldfinger - meet Odd Job, the evil henchman.

His hat had a deadly, razor sharp blade in the brim. This was one frisbee you did not want to catch.

EDIT:

it seems the servos now attach in Nixie 1.1.408, EXCEPT for the torso servos which regale in being AWOL still, and have to be attached manually

I have been running multiple tests, first in 1.1.395 and now in 1.1.396 to find out which servos appear attached, but act as if they are not, after about 30 or so tests i found that it is always the same servos that appear attached but act as if they are not.

Hello Captains,

When running InMoov service ( same error occurs with InMoov2) in Nixie 1.1.394, I get googlesearch answering using my real locale (Fr) although I have changed it via runtime to en-US.

Note that I have also changed i01.ear, i01.mouth and i01.chatbot to en-US and still i01.chatbot.search gives the text answer in French saying it in English.

Ahoy Kwatters !

I was wondering the differences and the plan going forward for these 3.

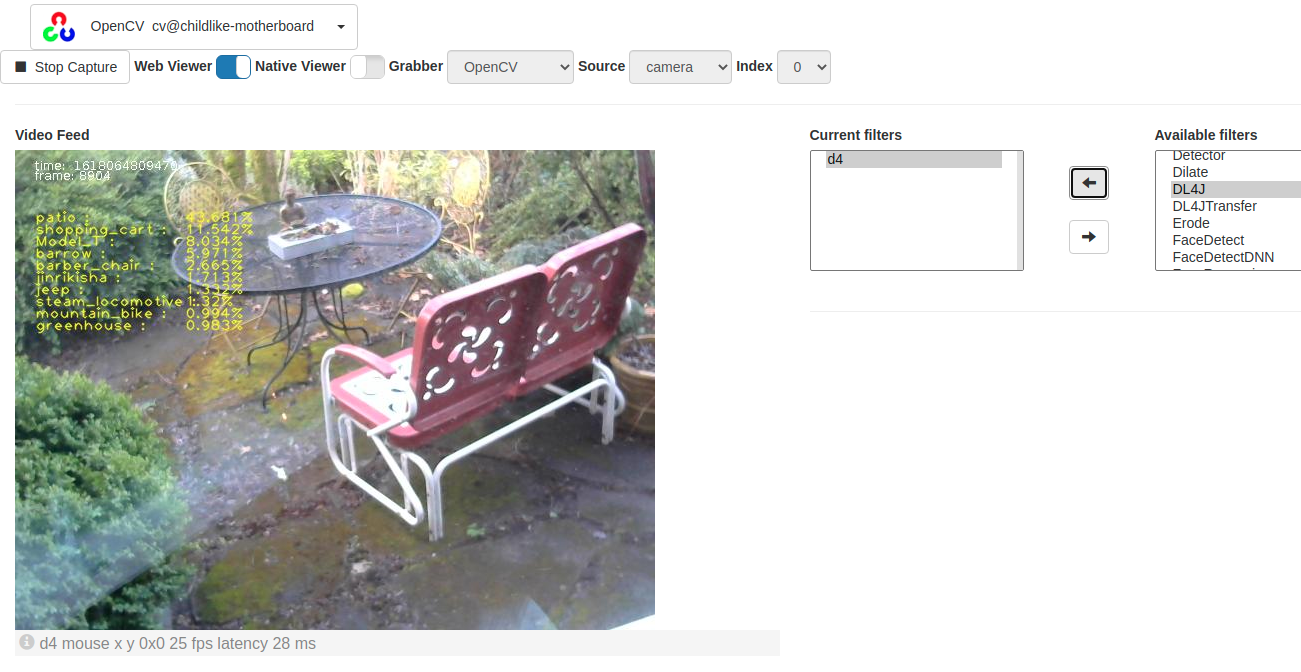

OpenCV DL4J Filter - Worky !

OpenCV Yolo Filter - Worky !

Deeplearning4J Service has no UI in swing or web - but I can see the filter spawns the service

I have been working with Nixie and windows 10 and had on various occasions a need for a running tally of the log file. In linux/unix this is easy, just use the tail command and Bob's yer uncle as they say.

That quest wasn't as straight forward in windows, but, to the rescue windows PowerShell [PS]. PS is very powerful and out of the box comes with restrictions. To be able to run scripts you have to tell the PS that it is ok to trust local scripts. To do this run PS in admin mode then execute the fillowing command:

This is not so much a question as a passing on of a tip.

I have been working with Nixie and windows 10 and had on various occasions a need for a running tally of the log file. In linux/unix this is easy, just use the tail command and Bob's yer uncle as they say.

Hi Folks

quick introduction and some questions. My robot is called Kryten, after the humanoid robot in the Red Dwarf series. He is pretty much build standard Gael, with the addition of the articulating stomach.

he stands on a manequin leg set liberated from a local department store, which makes him, ohh, about 6 foot 8 inches tall

For those of you who implemented Bob Houston's Articulating Stomach, how are u driving this from MRL?

Getting ready to hook up this servo pair, scratching head.....