I finally took the time to finish making the Wall Lamp from the Red Bull 2013 Creation Contest.

Next version 'Nixie' is coming soon !

hi

iam having HC-05 bluetooth module, arduion, bluetooth dongle, chasis with dc motors and driver, and a dc supply. now i want to tarck my robot from the camera placed in the celining of the room. as we discussed in the shout box first the robot need to be mapped.

UPDATE 06.19.13

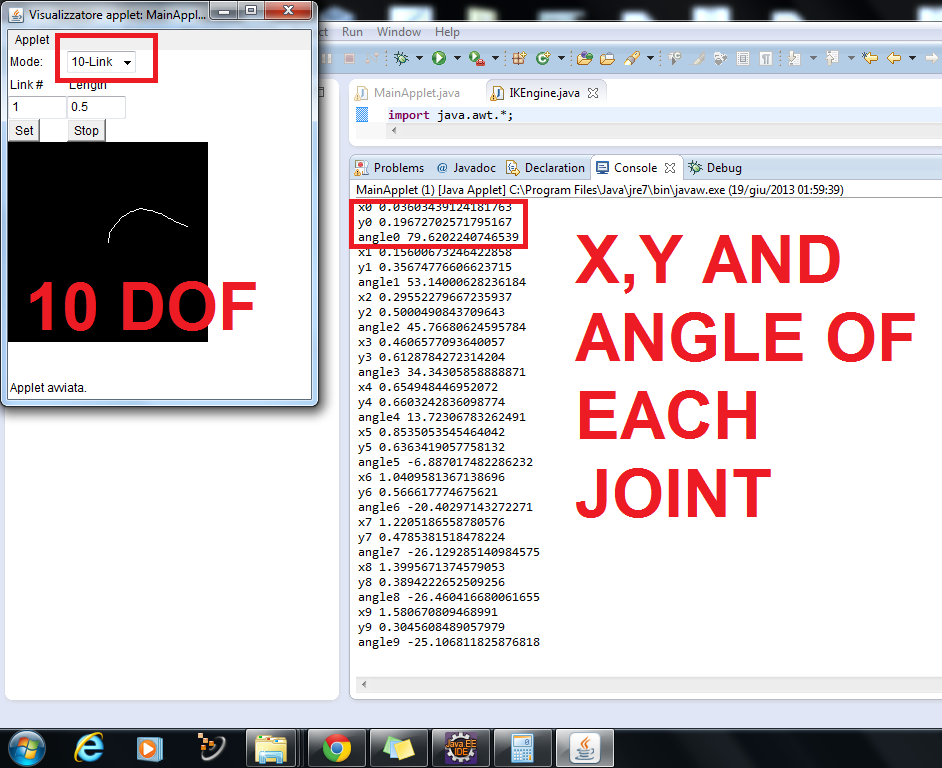

GET RID OF YOUR 2 DOF INVERSEKINEMATICS ENGINE !!!!

NOW WE HAVE THE 10 DOF ENGINE :D X,Y AND ANGLE OF EACH JOINT :D

UPDATE 06.15.2013

UPDATE 06.15.2013

Attempts to find humans through OpenCV facedetect. Utilizes a pan / tilt kit and LKOptical track points to track human after detection.

So far you have to use Eclipse and change the FindHuman.java file in the service directory to set your Arduino pin and com port settings, etc. Then start it like you would any other MRL Service.

I feel like this is a dumb question, but I am going to ask it anyway.

I have been playing around with the vision examples that come with MRL. These are very cool and work well. The OpenCV GUI is very nice and friendly. It is what brought me to MRL. Playing with faceTracking.py, I see that that the location and size of the box is printed to the python window. My question is this, how would one send this data across a serial port?

On many robots, when they move their arm, it tends to sway back and forth as the motion stops. Do you use some sort of sensor system, and active control to compensate for this swaying?

Joe Dunfee

.png)