Arm needs development. The proposal is to have a service where lengths, joints and limits can be specified and forward or inverse kinematics can be used to predict or plan spatial location.

# start the service arm = runtime.start("arm","Arm")

# start the service arm = runtime.start("arm","Arm")

Arm needs development. The proposal is to have a service where lengths, joints and limits can be specified and forward or inverse kinematics can be used to predict or plan spatial location.

|

|

| This is an simple "empty" scene, the camera is pointing at the end of a table. |

during many years i would like to build a robo Screenshotst and with many help i try it

######################################### # Pid.py # categories: pid # more info @: http://myrobotlab.org/service/Pid ######################################### import time import random pid = runtime.start("test", "Pid") pid.setPid("pan", 1.0, 0.1, 0.0) pid.setSetpoint("pan", 320) pid.setPid("tilt", 1.0, 0.1, 0.0) pid.setSetpoint("tilt", 240) for i in range(0,200): i = random.randint(200,440) pid.compute("pan", i) i = random.randint(200,440) pid.compute("tilt", i) time.sleep(0.1)

!!org.myrobotlab.service.config.PidConfig data: { } listeners: null peers: null type: Pid

A PID service allows the tracking and transformation of output in relation to iinput. This is currently used as one of the tracking strategies in the Tracking service. Input is sent to PID, a "compute" method is sent and appropriate output is sent to a servo.

References

My purpose is to just become more familiar with MRL.

I was trying to replicate the sample tutorial that you have and seem to be having some trouble.

I am interested in getting the speech aspect working for a simple project of face recognition and a comment of recognition.

as for right now though I would just like to get the example working.

Here is a screen shot of what I have done.

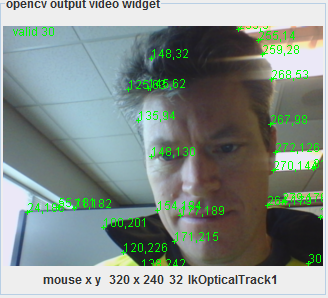

Yay ! - LKOptical tracking filter tracking 30 points simultaneously ! The Tracking service can proceed .... Wooh .. about time ! Still need to write a bug up for JavaCV, but the filter works around it.

.png)

Added an ouput panel - which allows recording of a video stream or individual frames from the output stream.

######################################### # ThingSpeak.py # more info @: http://myrobotlab.org/service/ThingSpeak ######################################### # virtual=1 comPort = "COM12" # start optional virtual arduino service, used for internal test if ('virtual' in globals() and virtual): virtualArduino = runtime.start("virtualArduino", "VirtualArduino") virtualArduino.connect(comPort) # end used for internal test readAnalogPin = 15 arduino = runtime.start("arduino","Arduino") thing = runtime.start("thing","ThingSpeak") arduino.setBoardMega() # setBoardUne | setBoardNano arduino.connect(comPort) sleep(1) # update the gui with configuration changes arduino.broadcastState() thing.setWriteKey("AO4DMKQZY4RLWNNU") # start the analog pin sample to display # in the oscope # decrease the sample rate so queues won't overrun # arduino.setSampleRate(8000) def publishPin(pins): for pin in range(0, len(pins)): thing.update(pins[pin].value) arduino.addListener("publishPinArray",python.getName(),"publishPin") arduino.enablePin(readAnalogPin,1)

!!org.myrobotlab.service.config.ServiceConfig listeners: null peers: null type: ThingSpeak

If your system has many parts, it is sometimes nice to detach and arrange them in the most convient way depending on how your working. As an example the InMoov service may have up to 22 servos. When capturing gestures its nice to have al lthe relevant service tabs arranged in such a way that gestures can be tweaked and code can be modified.

.png)

Debounce

Implemented digital pin debounce based on this . It puts in a 50 ms wait after a state change on a digital line. Needs testing.

It should help with encoders, bumpers, and other bouncy switches although remember boys & girls, you should always try to clean your signal at the source :)

Digital Trigger Only