hello again i need help im using this code:

opencv = Runtime.createAndStart("opencv","OpenCV")

opencv.addFilter("PyramidDown")

opencv.addFilter("FaceDetect")

opencv.setDisplayFilter("FaceDetect")

opencv.addListener("publishOpenCVData", python.name, "input")def input():

data = msg_opencv_publishOpenCVData.data[0]

bb = data.getBoundingBoxArray()

print 'opencv data ', bb

for rect in bb:

print "face found in rectangle ", rect.x, rect.y, rect.width, rect.height

sadly i can´t get data in the phyton / phyton register

i clicked on capture and the camera shows the red box but i can´t see what im doing wrong because there is nothing displayed.

i still think its a simple problem so any tip would be great

it seams that input delivers no data from the cam.

thanks

old way of passing data in python

It looks like this script is really out of date. It's using the old style syntax for passing data into python callbacks. Is this a script that is checked in somewhere?

Try changing:

thats it thank you (i

thats it

thank you

(i reworked the code from a post in the forum so it could be out of date)

many thanks to you

Hello again it seams I found

Hello again

it seams I found some code which has some problems and it seams it matches with my last syntax problems

https://code.google.com/p/myrobotlab/source/browse/trunk/myrobotlab/src…

can somebody point me out what's wrong in there

thank you

Hey Jay... That s the old

Hey Jay... That s the old repository.. So only old stuffs over there...

Here is where you can get new worky stuffs :

https://github.com/MyRobotLab/pyrobotlab?files=1

In particular for the tracking face purpose i suggest you this script :

https://github.com/MyRobotLab/pyrobotlab/blob/master/toSort/Tracking.fa…

If you want there is a much

If you want there is a much lighter script for face tracking, but it uses InMoov head... So informations for the tracking are a bit more hidden.. Let me know

The Service pages should have

The Service pages should have a reference each to one Service script... they all are in the pyrobotlab/service directory ... Maybe we should put the InMoovHead.py in there ?

Thanks for the ongoing

Thanks for the ongoing help

my goal was to modify the code (which finally works thank you)

to an face tracking without camera servo centering

so I wanted to mount the camera in a fixed position and move the servo to a specific coordinate from the picture

it's for an animatronic head I'm building the eyes should point on a person In the room ( th eyes will be small glas ball and can not be cameras)

later the head should get a neck with pan tilt too

sadly I recognized I need to learn a lot more of myribotlab

I worked a lot with Arduino code and have done much with it like eyelid blinking and stuff but bringing it to the next level is hard

I'm more I to the mechanic part of it at the moment so thank you for the great help and support here

maybe even such a script is exists so please forward it too me

2 Way Info

Glad we can help :)

Hope we can continue to help. Learning should not be painful - but understand we are learning and developing too .. Its "in process" work.

The more information you can give us regarding your project - e.g. pictures, inventory, parts, what things are connected to what, future goals, etc. the more quality help we can give to you.

o/

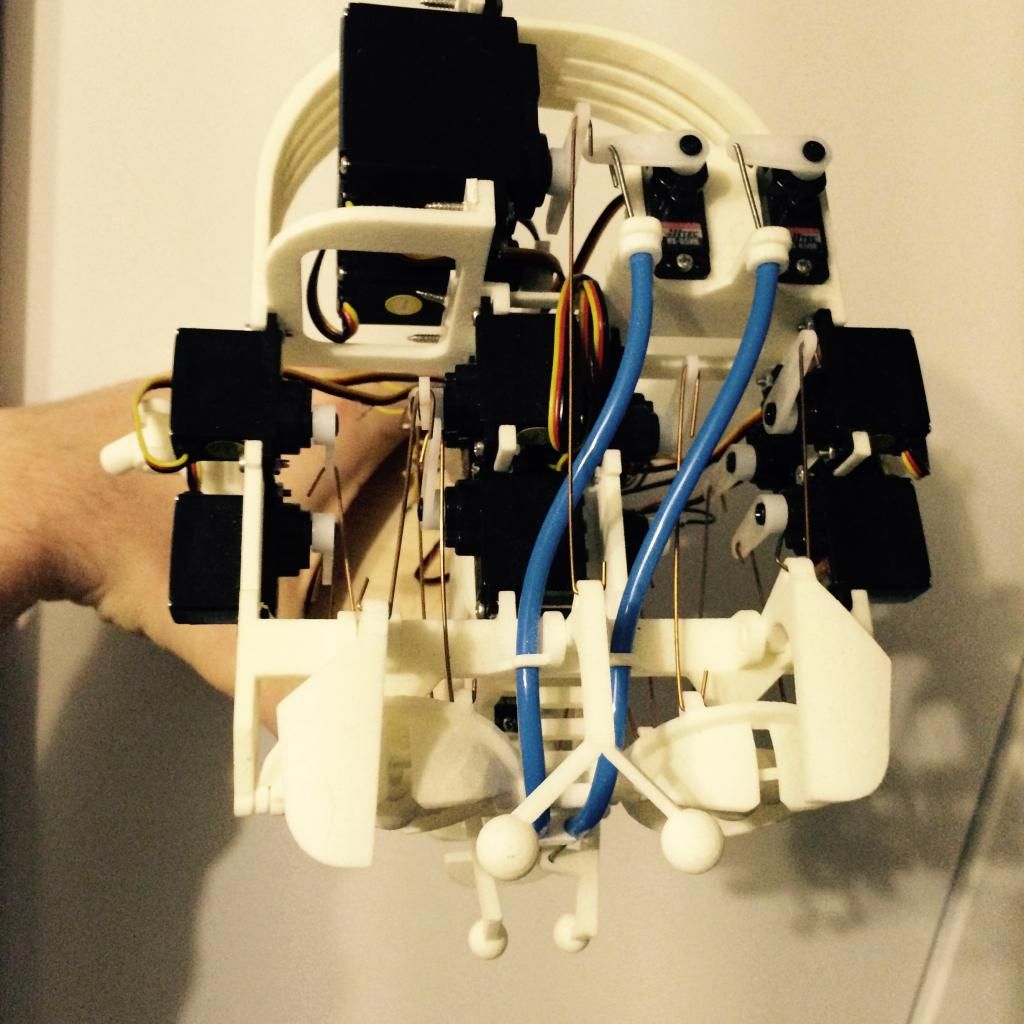

Hello and here are some

Hello and here are some details

im making a animatronic puppet head (mechanical stuff is more mine)

it consists of 14 servos at the moment for lips jaw eyes eybrows eylids head pan tilt.

my goal would be a software to get him alive

i made some progress with arduino to get the eyes blink and look but to take it to thenext level i need myrobotlab

first i wanted to make the basics but fot the full project the head should trake a face in the room with a fixed camera and look at it with eyes and head

blink they eyes randomly and talk this would be via done via an mp3 which is played and then a servo movement is generated.

so i wanted to alter the code from above to just use the coordinates from the opicture for a tracking of persons in the room.

is there hope for me?

and is it possible to alter the gesture creature from inmoov for such a project?

what would be the best way to get this all done

thanks for the ungoing support

Hello im back with an

Hello im back with an update

i got the eye tracking and blinking working now just need to find an idea to get the mouth to work with sound

is there a way to playback an mp3 and use this (amplitude) and map it to a servo position?

What about using the Speech

What about using the Speech service ? http://myrobotlab.org/service/Speech ?

It's more flexible than using canned mp3s in that you can dynamically change what is spoken when it runs.