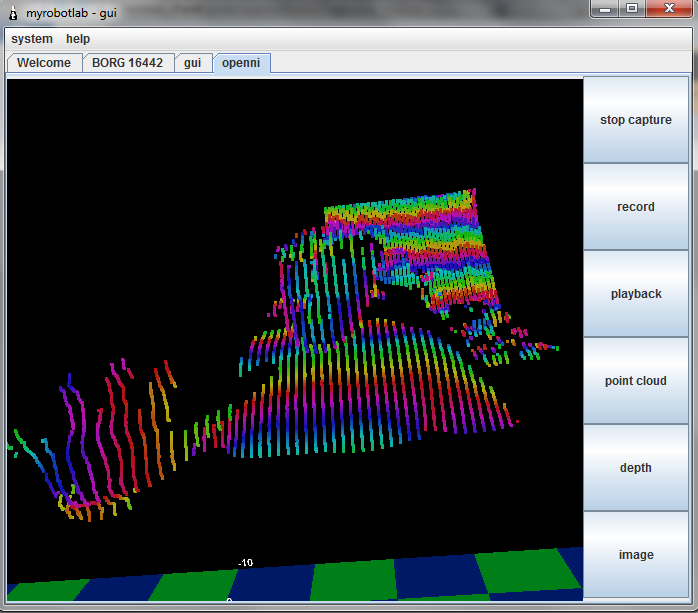

Update 2012.08.09 - High Density 3D Point Cloud in Java3D - Video Up

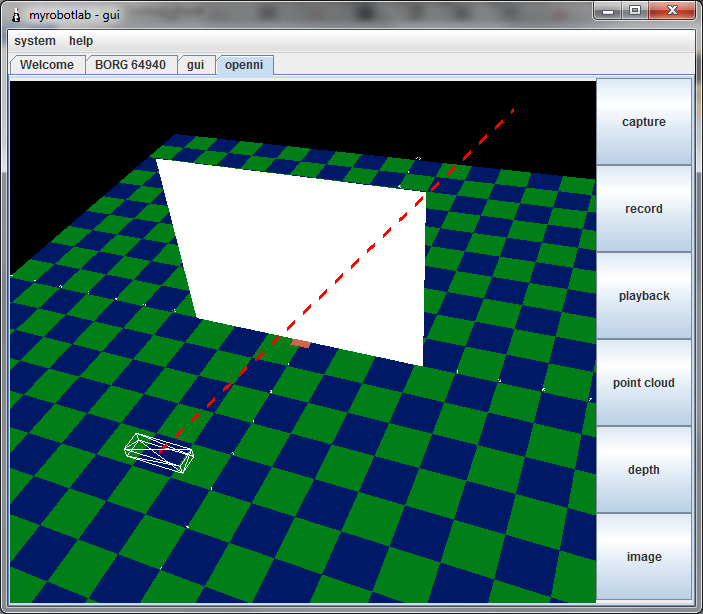

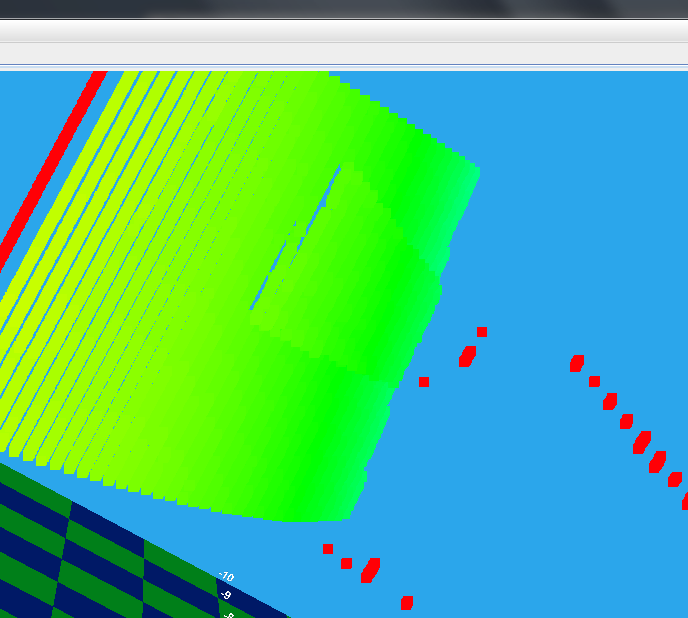

Same scene zoomed in

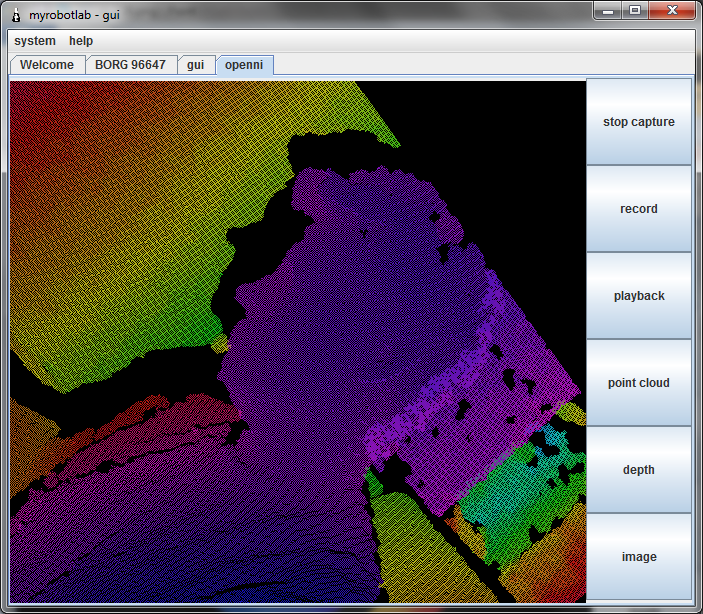

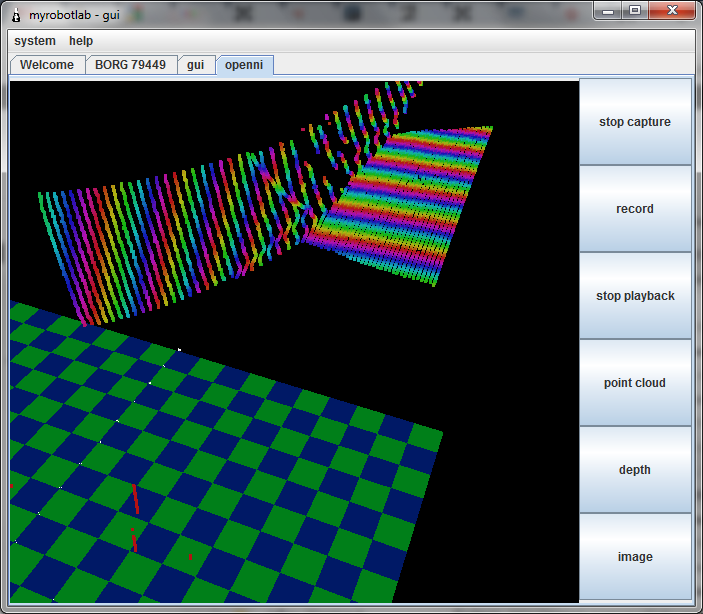

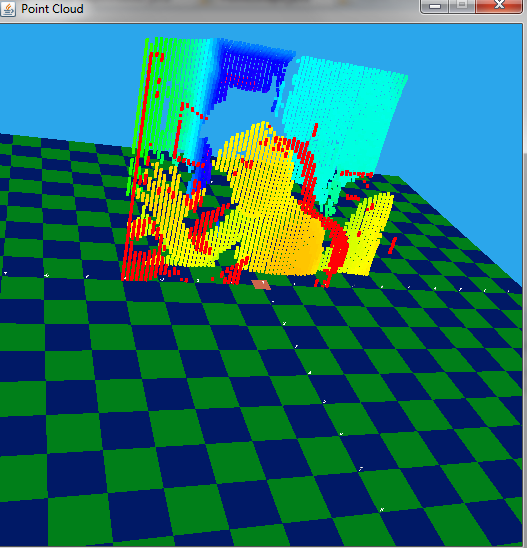

HSV - unknown scale colored depth over point cloud

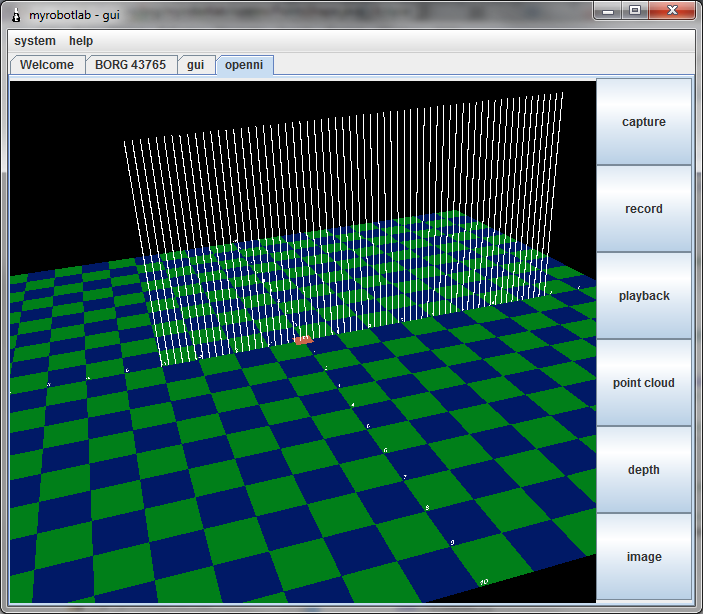

High density 3D point cloud of floor, door, & wall. Finally got some good results and I am beginning to understand the translation of Kinect data to Java3D view. Additionally, this was done from saved Kinect data (ie. anyone now can look at a 3 dimensional view of my office with MRL & the data file)... Next? I want to color code again with HSV over the full range of the Kinect - with a scale which show's the real distance values in centimeters. E.g. red = 300-400 cm orange = 400-500 cm etc.

Update 2012.08.07

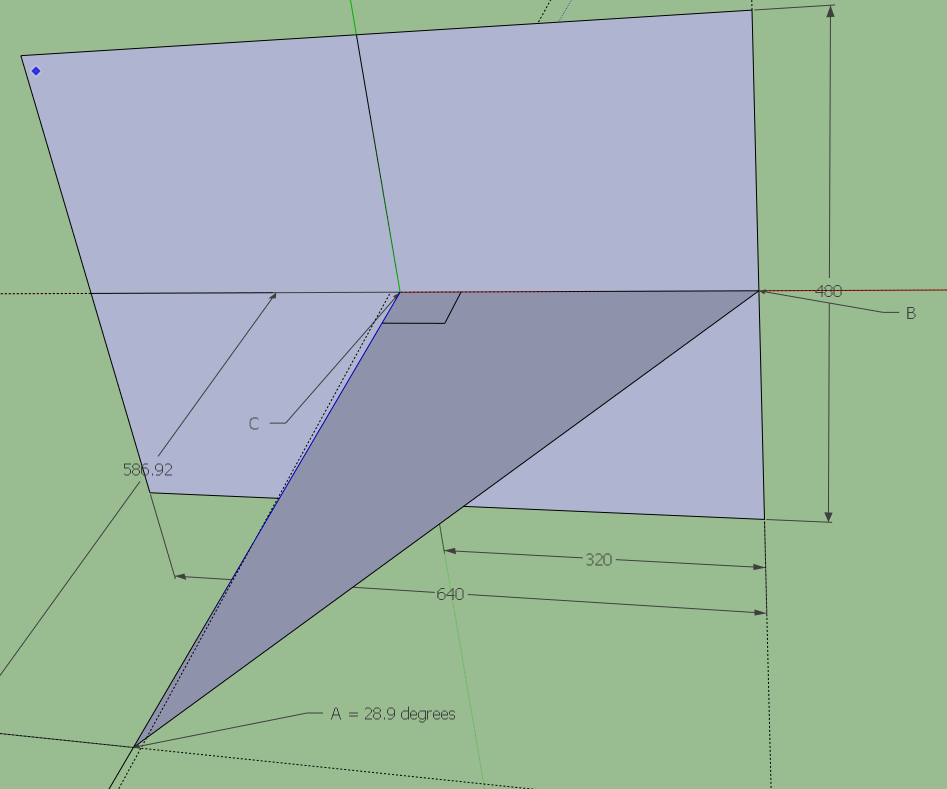

Well lets start scaling ! In Java3D the world is represented in a cartesian with 3 dimensions in float units. Which means we can make it "any length value".

For convienence, I have decided to make:

1 virtual Java3D unit = 1 meter

The solid point cloud wall has 640 x 480 points - this becomes 6.40 m x 4.80 m.

Now, how to find the distance of the camera from the center of the point cloud wall ?

Trig !

Solve right triangle :

A = 57.8/2 = 28.9 degrees

a = 320

B = 90 - 28.9 = 61.1 degrees

We are looking for b - distance kinect away from center of plane.

b = a/tan(A) = 320 / tan (28.9) = 320 / 0.545217 = 586.92 cm

The density is impressive (10X from previous example), initializing all the points of the point cloud with 0 z depth gives me a solid wall. Unfortunately, I've botched something up in the translation from kinect data to z depth so I can't show a 3d point cloud at the moment. Before I fix that, I want to work on the translation such that I know its scale, and all the coordinates and projection is known and accurate. Right now I'm starting to place a representation of the kinect into the model. After that is done, I'll adjust and caculate the distance to the depth plane using the kinects maximum field of view angle. This will help to get a visual representation of the location and heading of the kinect camera.

Update 2012.08.06

Artifact gone, but found a lot more work I need to do.... Going to see what MAX res does ....

Whoa ! I found out how to do tranparencies in Java3D - tried it out and everything got all wonky.. Digging deeper into the original PointCloud example I'm amazed that there is such a large amount of data which is NOT being used.. Or rather a Huge amount of data which is not being displayed. The author chose not to do this as an optimization. This ties into the differences of the PointCloud generators in Processing vs this particular one. The Processing demo's looked very detailed, this one "looked" low res. So, I've found the root cause of it, but I think what would be optimum, is the ability to dynamically change resolutions.. No?

First step is ripping out a bunch of code and have it display ALL the data - preferably at a correct scale with Trig negating the warping effect. Once that is done, I'll incorporate dynamic resolution and other optimizations like a Trig lookup table.

Figured out what the "artifact" is - it's simply all the points with 0 depth. A plane is drawn each frame and the Z-depth is calculated the points are "pushed" to the appropriate depth. I think this can be fixed by determining if the Z-depth is equal to min or max , if so - draw the point as transparent.

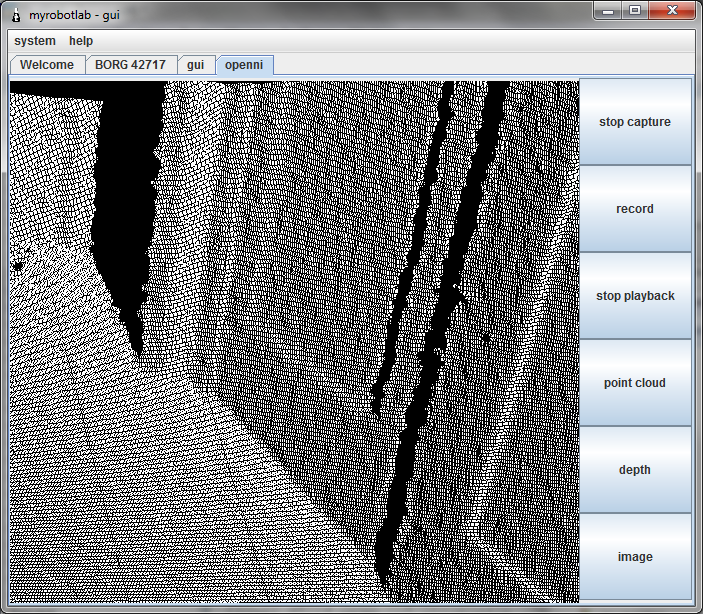

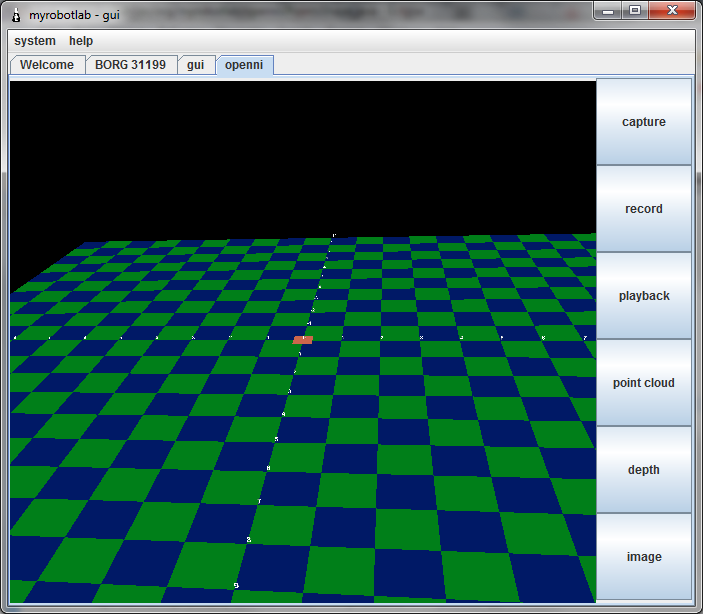

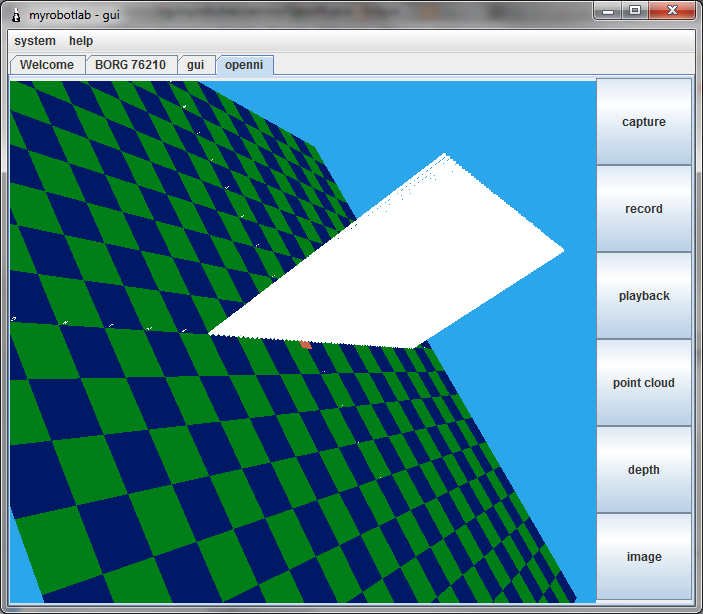

Got "Playback" working... now to straighten this wall & floor.

Update 2012.08.04

At this point I want to get some constants for the Kinect :

So many good resources here's one:

- The depth image on the Kinect has a field of view of 57.8

- The minimum range for the Kinect is about 0.6m and the maximum range is somewhere between 4-5m

- Currently the depth map is mirrored on the X axis

-

Need to get Z data value and determine how that corresponds to meters - according to this excellent resource - its depthInMeters = 1.0 / (rawDepth * -0.0030711016 + 3.3309495161);

Wow, Daniel Shiffman got some great results with Processing. I'll look at his examples in detail and try to get them to work without Processing and using Java3D.

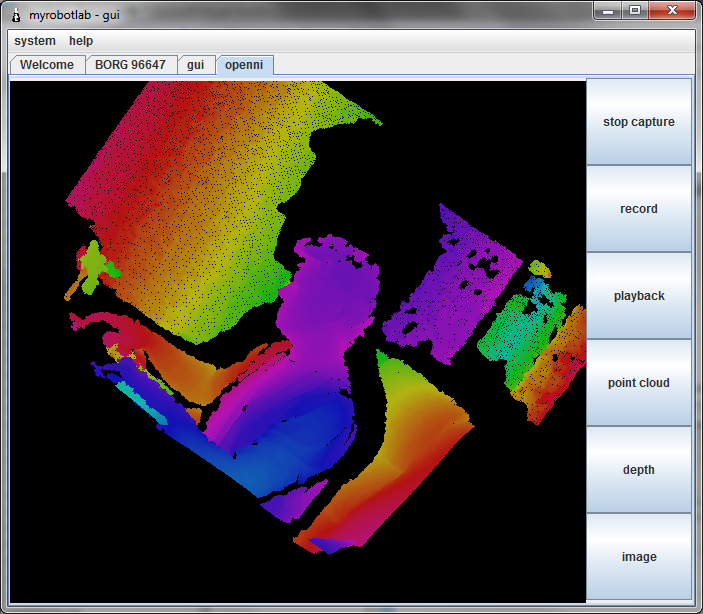

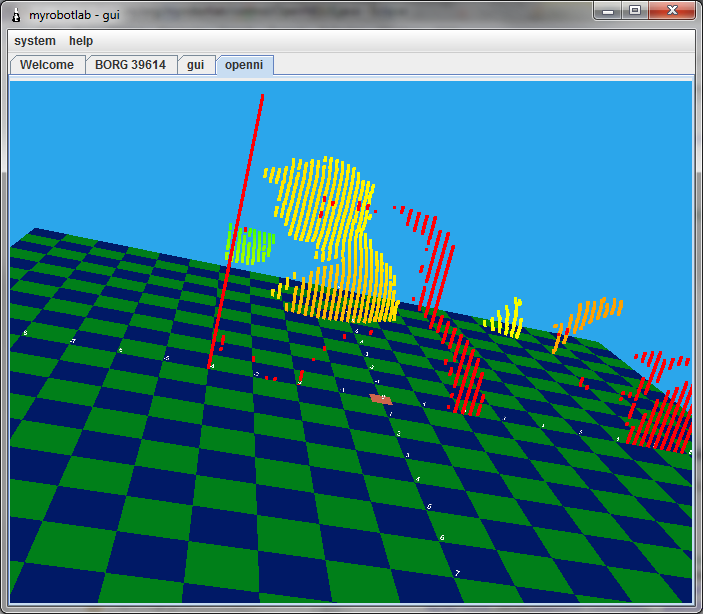

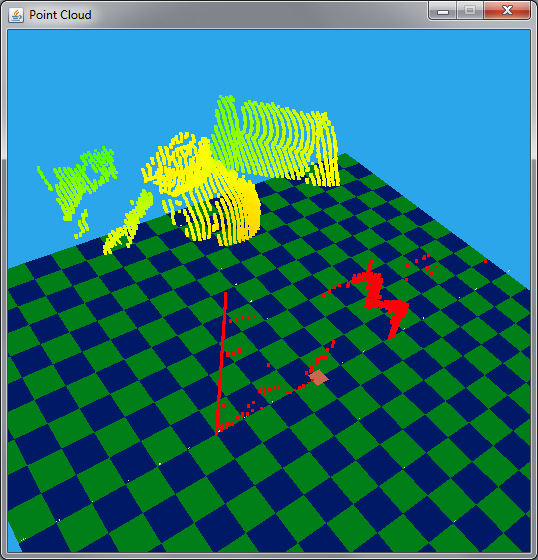

3D Rainbow monster

Yay - got the simple data to work - so now Playback is at least theoretically possible (not implemented yet). As I dived into the code I could see the original point cloud example used a ColorUtils jar to do HSV to RGB conversion. The Java JRE can do this conversion, so I'm using the native version now (although it this picture I have the cyclic range too short and you get several cycles of roygbiv). I found some potential optimizations too. - Non Trig distortions & 0 range artifacts still exist - will work on those next.

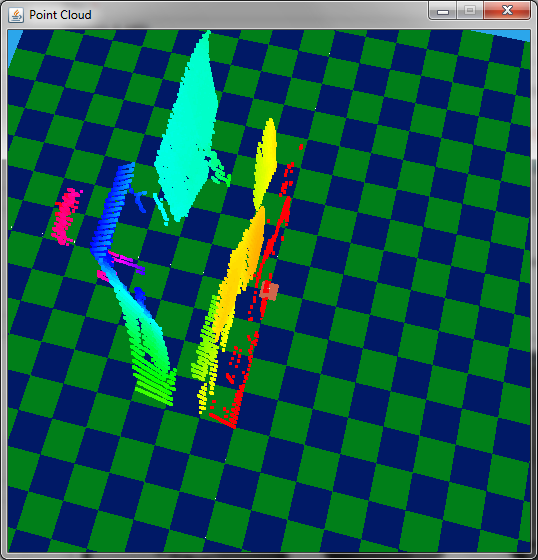

Missing Trigonometry...

Looking throught the example code I did not find any trig references to get the depth data into cartesian point form of the display. Here is confirmation that it's needed and lacking. Above I aimed the kinect at an angle against a straight wall - you can see that it curves. At the moment I'm dealing with trying to convert the data and display to use primitive data structures instead of the NIO buffers. This will allow the "playback" of a capture (The NIO Buffers are not serializable). It should help me understand how to use Java3D too. I'm pretty impresed with the display, you can make it fullscreen without reducing the framerate. In the process I inted to fix the Trig issue and the artifacts - in this frame they are red.

Update 2012.08.03

Adding functionality - currently "capture/stop capture" & "record/stop recording" work.

Record is a nice addition since we can then save the kinect data and use it without the actual sensor, so SLAM testing can be done without the kinect hooked up. I'm converting the nio ShortBuffer to a Java primitive short buffer so other services won't need to bring in the nio dependency to work on the depth data.

The current code I got from Dr. Andrew Davison's example will have to be modified to accept primitives. Then I'll need to find out the source of the artifact which is being created here.

Update 2012.08.01 It's been Borg'd in !

Yep, welcome the new OpenNI Service in MRL ! The example code was well designed. It had a sensor data layer (the Service) and a display layer (which I put in the GUIService).. So, as with all Services, the display can be on a different computer and the Kinect can stream the sensor data across the internet if necessary. Andrew Davison's example has some bugs I need to clean up and I'll bring in more functionality after looking at the Processing examples

Update 2012.07.31

Progress - I got Dr. Andrew Davison's example of a Point Cloud using OpenNI on Java running !

He uses Sun's Java3D, which makes it easy to mouse around the 3D view.

But, in comparison to the Processing demo's this look inaccurate... The depth is inaccurately represented as quantum jumps between plains. While the Processing examples show surface contours of the objects.

Next Steps :

- Begin organizing the repo - so that MRL can easily support OpenNI on all the various platforms

- Begin looking into the Processing examples for math and display differences

- Start the OpenNI service and control panel

The depth is definately "squished" in the representational display. Maybe it was just a choice to get everything on the grid. My head is flat, but not that flat. Going to dive in and look for the translation formula from Kinect data to Cartesian.

A little better, I'm not such a flat head. This was done by tweaking a few constants in the example. I'm guessing the Processing examples use a different representation of displaying the "points" of the point cloud. If I remember correctly at least one of the demos made bounding boxes which reached its neighbor. This would give a view of more "solid" objects. I can see a red plane too. I think this might be "residue on the minimum boundry". The Kinect has a minimum limit of 18" or so. This should probably be filtered out.

(Starting to look at OpenNI - ran some of the samples of simple-openni - nifty hand tracking... Wheee !)

![]()

(NiSimpleSkeleton & NiUserTracker64 crashes... but UserTracker.net.exe works !)

Well today starts my foray into OpenNI. OpenNI is an non-profit organization which produced drivers and middleware which supports gesture recognition. Primasense (creators of the Kinect) is one of the main members of OpenNI.

I figured I'd tackle the windows platform first, since I have a tendency to use 64 bit Windows 7 (work demands it). There is a download http://code.google.com/p/simple-openni/ for windows 64. It's a zip which contains 4 seperate installers. There might be another source, I'm not sure about the simple-openni vs OpenNI. Then of course there is OpenKinect. Yikes, so much software ... so little time.

This article succinctly explains the differences between OpenKinect & OpenNI.

Time to jump in !

After installing the 4 installers from the "google code" site, I started looking for examples to run. Some were console output which gave a distance value for the middle pixel. I could see possiblities for this since I'm interested in a Point Cloud. At this time, making a point cloud from a Kinect is (almost) old news. I think its been done from both OpenKinect & OpenNI (at least utilizing their drivers).

I'm specifically looking for Point Cloud info for Java. Java implementations often have to have shim adapters to get to data from platform specific drivers. I've begun googling and below are a few possible resources.

- Nifty Demo of a Point Cloud from Kinect - showing different point resolutions and other effects

- Another Point Cloud demo

- Processing display (Java) + Kinect - Good Point Cloud

- Daniel Shiffman - Point Cloud on OSX (only)

- Java 3D + Kinect

- Kinect Depth vs Actual Distance

One of the goals into this Kinect exploration is to implement a SLAM implementation using the point cloud and camera from the Kinect. That's what we all want right ! And gesture recognition too, so we can tell our robots to go stand in the corner when they've been bad.

Update 2012.07.30

It does not seem like the simple-openni is the most stable set of software. Several of the examples crash. Even the viewers have glitches in the camera feed., and crash. This was suprising as OpenKinect appears more stable. Weird no? The drivers made by hackers are more stable, than the community effort of the manufactures of the Kinect ? What the what?

Right now I have OpenKinect installed on Ubuntu 32 bit laptop working well & OpenNI on a 64 bit Windows 7 machine. Still more testing to do...

Holy JNI Wrappers Batman... There appears to be a Jar which came with OpenNI.. They've thought of Everything ! But wait, there's more - someones done an implementation of a point cloud using said distributed jar ! - http://fivedots.coe.psu.ac.th/~ad/jg/nui14/

Hmmm... his "cloud" seems very flat - maybe since its at a greater distance than the Processing videos? Hmmm... dunno.. will need to explore more... Definately feeling the need for a new Service !

Found an OpenNI programer's guide - http://openni.org/docs2/ProgrammerGuide.html ... hmmm looking for JavaDocs .... Java related shtuff - http://openni.org/docs2/tutorial/smpl_simple_view_java.html

No JavaDocs though .. not that I'm complaining. This is usually WAY more info than I usually get. I'm thinking this might not be so hard.... but after the PointCloud in MRL comes SLAM (more work !)

Amazing. . .

Every time I see Kinect based robot demos, I'm astounded that a relatively inexpensive device, arguably a childs toy, finally gives our robots the ability to see.

I agree, It's an impressive

I agree,

It's an impressive sensor for it's "toy" price.

How goes your project DWR? Considering the large "push" of developing software which was done when I was looking the other way, I believe I'll have some form of integration with MRL very soon....

Wow, This is great! The

Wow, This is great! The possibilities are endless with kinect and MRL together!

Uh. . .My project

Still exists. My life is full of minor distractions--and major ones. (The biggest one is my wifes health, but that's not so much time related as energy draining--Never mind.)

Mostly it's a matter of connecting a few things, which you've given me fresh incentive to do. I'm awaiting delivery of a 3d printer and plan to make body parts with it.

I'm sorry, I hope your wifes

I'm sorry, I hope your wifes health improves.

In regards to the 3d printer, I've tossed the idea of getting one too.. Are you going to get a Makerbot or some other derivative?

3d printer

The 3d printer I chose is a Makibox A6. My choice was based on two things--First, it's incredibly cheap at $300 plus $50 shipping. Second, it's creator comes from a product design background, (toys) and when you look at the Makibox, it's one of the few printers out there that doesn't look like it was assembled in a garage.

Currently the project is about 3 months late to ship. However the blog shows regular updates, andy they're close. That being said, they always seem to be working on something else "in parallel" to the main project. The most controversial change was a switch from plastic filament to pellet feed, and I was a hard sell on that one.

My plan is to fabricate certain structural components, such as arms that provide mounting areas for things like servos along with cable management.

As for my wifes health, she's a brittle diabetic and there's always something. Last thing was a sudden serious increase in her blood pressure, which seems to have been taken care of. But I don't want to go there any more.

Great functionality

Your ideas of capture/stop capture and record/stop record sounds like a great idea for low powered processors. Does a Kinect make a suitable camera for OpenCV applications, or is that better served with another camera?

The Kinect is the "best" usb

The Kinect is the "best" usb optical camera I have used so far. It' has a much faster frame rate than any other web cam I have. The OpenCV Service has had Kinect support for a long time.

There was a long standing bug which the depth was not represented correctly, but I've just seen a post on a possible fix.

The OpenNI Service will have the gesture recognition and skeleton tracking which OpenCV does not. I also intend to make the OpenNI Service capable of publishing images which the OpenCV Service can consume - (and vice versa). Everyone can chat with everyone in a free society, Yay !

After working on the functionality of OpenNI, my plans are to start the SLAM service in ernest. It will consume simple data types which can be sent from either OpenCV, or OpenNI, or possibly other Services.

At the moment I'm using a Windows 7 64 bit machine for my workstation. I downloaded this to get the OpenNI drivers & NITE stuff working (http://code.google.com/p/simple-openni/) . I was wondering if you used this or the "real" OpenNI packages from openni.org ? If so do you know the differences ?

drivers

The Kinect, or rather the concept of the Kinect is one of the things that got me back into robots in a serious way after a long hiatus.

When it finally came time for me to put my money down, I went with an Asus Xtion Pro Live, which has all the features of a Kinect except for the motion sensing and motor in a smaller package powered directly from the USB port.

When I finally got it I found out two things--Linux support seemed to be little more than an afterthought, and I had almost no idea where to start. In researching it, I came across the Simple OpenNI site. I managed to get it working on my 64 bit Ubuntu system, but while trying to install it on the robot board where I used 32 bit Ubuntu, it turned out there was a file missing from the binaries, which ultimately was solved.

As I recall, there were 3 parts to it--the drivers for Kinect or Xtion, the OpenNI files and the Nite files. As far as I know, everything is the same EXCEPT SimpleOpenNI is a wrapper for OpenNI. I do remember going directly to the PrimeSense website for the drivers I needed.

I remember I got OpenCV to work on Houston's mainboard through MRL, but not with the Xtion Pro Live. (I used a webcam that I happened to have bouncing around.) I heard that as long as the Xtion Live Pro has the proper drivers installed, it should work the same way a Kinect does. Heh. I guess we'll see.

Wow, not having this plug &

Wow, not having this plug & draw of 12 watts would be great... hmm another toy to get :D

heh

I like it when you have the same hardware I do. LOL

Just to be complete.

http://www.asus.com/Multimedia/Motion_Sensor/Xtion_PRO_LIVE/

http://www.newegg.com/Product/Product.aspx?Item=N82E16826785030

Thanks for the links, From

Thanks for the links,

From the details - it looks like the depth camera resolution is 640 x 480 30 fps - 320x240 @ 60 fps? Is that correct, I believe this is the same with Kinect with video 1280x1024 .

Found this - http://wiki.ipisoft.com/ASUS_Xtion_vs_MS_Kinect_Comparison

Have you gotten depth data from your unit? Was there software included? What is your take between the kinect & Xtion ?

Depth data

Uh. . .I don't think so. I know I've never actually used it to do anything with depth. I've gotten the Processing demos to run in Simple OpenNI. I've used the skeleton demo to do a simple "scan this direction" experiment. But never tried to use depth information. Sorry.

I have not heard of the

I have not heard of the makibot before - it looks good and $300 is a great price. The makibot site seems a bit broken at the moment.

Yesterday, after reading your comment about getting a 3D printer I found this well maintained matrix - http://www.additive3d.com/3dpr_cht.htm It gives a nice comparison of most 3D printers, albiet - makibot is not up there. And the price is great. I thought the Netherland's Ultimaker (Details here...) looked pretty good, but its 5 X the price.. Its one of the few printers in that price range which returns "fair to good" finish.

Keep me posted with your Makibot, hopefully it will arrive soon.

Uh yeah. . .

The Makibox (correction--I calledit makibot--with a "T". It hasn't been called that in months. Probably because of potential legal issues.) has a strong smell of "vaporware" to it, with a lot of people claiming it's a scam.

Back when they were keeping track of such things, they had about 400 supporters. I got in as one of the early 300s. There have been a few people who dropped out, tired of waiting I guess, and a few more dropped out when developement switched over to a pellet feed system. A pelleted plastic to filament is a sort of holy grail in the 3d printing world because the price and availability of plastic pellets (the "raw" form of plastic that everything made of plastic starts with.) is so much better. It's not an easy thing to do but they seem to be close.

I've seen that matrix too. I guess they're waiting until the Makibox actually ships before adding it. The site HAS run an article or two about the Makibox.

All that being said, it IS about 3 months late to ship, with another month or so before it does.

Wow

I gotta say Greg. Once you actually dive into one of these projects--You make things happen.

Thanks, I try - once I'm

Thanks, I try - once I'm attached I'll continue to nibble on it until I get distracted .... "SQUIRREL !"