Stereo Vision

Stereo Vision

Stereopsis or Stereo vision![]() is a process where two or more cameras can be utilized to determine range or depth of field. The result of the 2 cameras are two slightly different images. Objects are matched in the two pictures and the difference in position relative to each other (horizontal disparity) is used to determine their depth or range from the cameras. If distance between the two cameras is known, simple trigonometric functions can be used to calculate the distance between the cameras and objects in view. Stereopsis is most commonly referred to as depth perception. Sometimes more than two cameras can be used. This is referred to as Multi-View Stereopsis. With more viewers more data can be processed, and the possibility of more accurate distance calculations. Some strategies of determining depth or ranging targets employ moving the viewers to more than one location. Again collecting data from multiple locations can aid in the accuracy of determining depth.

is a process where two or more cameras can be utilized to determine range or depth of field. The result of the 2 cameras are two slightly different images. Objects are matched in the two pictures and the difference in position relative to each other (horizontal disparity) is used to determine their depth or range from the cameras. If distance between the two cameras is known, simple trigonometric functions can be used to calculate the distance between the cameras and objects in view. Stereopsis is most commonly referred to as depth perception. Sometimes more than two cameras can be used. This is referred to as Multi-View Stereopsis. With more viewers more data can be processed, and the possibility of more accurate distance calculations. Some strategies of determining depth or ranging targets employ moving the viewers to more than one location. Again collecting data from multiple locations can aid in the accuracy of determining depth.

Robotic relevance

Stereopsis can be used in mobile robotics to ovoid obstacles. With the right software inexpensive webcams can be utilized as avoidance sensors or even 3D modeling and mapping. Raw images sent to your robot are not necessarily very useful. The images must be "interpreted" in order to provide useful sensor data. Stereopsis range maps, object recognition, 3D models, are all examples of interpreted or processed data. These are things we do all the time, without even consciously thinking about it, and yet machine vision is a very young field of study.

Natural Examples of Stereo Vision

- They move their head back and forth slightly (like an owl).

- If the object is far enough away they will move their entire body a few steps to the left or right

- They will look near their feet and find something they know the length of. Typically they will look at their foot (it's usually easy to find). They will begin counting with an imaginary foot, stacking invisible feet all the way to the target point.

- They will squint out one eye then the other.

Another interesting fact is infants don't have depth perception, but develop it within 2 months. I thought this might be the process of their brain developing this faculty. But, I read later that it had more to do with an eye muscle, which is not strong enough at this period to pull and focus the eye inward.

Another interesting fact is infants don't have depth perception, but develop it within 2 months. I thought this might be the process of their brain developing this faculty. But, I read later that it had more to do with an eye muscle, which is not strong enough at this period to pull and focus the eye inward.

dealing with trauma. And the reason that we see double is that this is the natural state. Since we have two eyes, we would see the two disparate images if the brain wasn't constantly trying to correspond and converge everything.

dealing with trauma. And the reason that we see double is that this is the natural state. Since we have two eyes, we would see the two disparate images if the brain wasn't constantly trying to correspond and converge everything.

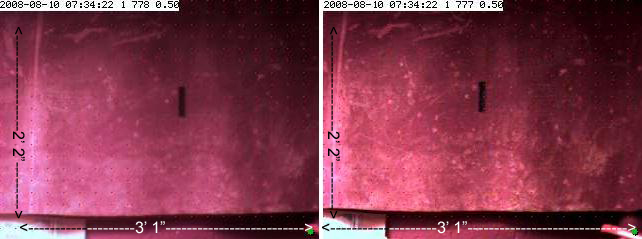

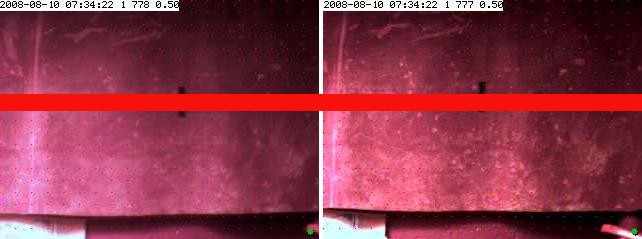

Average filter is the averaging of the pixels within a given area. Here, I chose to use vertical averaging tiles (10 X 60 pixels), because I was much more interested in vertical lines. Vertical lines are of use when the cameras are mounted on a horizontal plane. Vertical lines are used to find the horizontal disparity in stereopsis. Above are two pictures from a left and right camera.

Average filter is the averaging of the pixels within a given area. Here, I chose to use vertical averaging tiles (10 X 60 pixels), because I was much more interested in vertical lines. Vertical lines are of use when the cameras are mounted on a horizontal plane. Vertical lines are used to find the horizontal disparity in stereopsis. Above are two pictures from a left and right camera.

Image Clipping

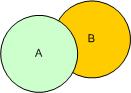

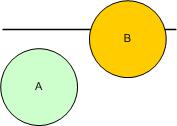

Object A appears to be in front of object B since object B is closer to the horizon.

Object A appears to be in front of object B since object B is closer to the horizon.

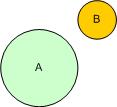

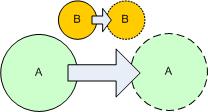

Object A travels further than object B in the moving frame of view. So, object A is closer than object B. If the camera's traveling distance is known, the distance to the two objects can be derived using some simple trigonometry.

Object A travels further than object B in the moving frame of view. So, object A is closer than object B. If the camera's traveling distance is known, the distance to the two objects can be derived using some simple trigonometry.

- Black Tape 4" X 1/2"

- Distance from camera to tape 49"

- Field of view of the camera at 49" Horizontal 37", Vertical 26"

- Camera pixel width 320

- Camera pixel height 240

"I dunno I'm working on my horizontal disparity map."

References

- Range imaging correspondence problem

- Multiple Camera Calibration for 3D positioning

- Microsoft robotics stereo vision C# SDK

- great applets of various image processing techniques

- Wikipedia edge detection

- Nic Sievers interesting edge detection work

- Tutorial from Bill Green

- Tom Gibara great java implementation

- Edge detection

- SIFT Key point algorithm

- Great Gaussian and other blurring tutorial

- Corner detection

- Moravec Interest Operator

- Great Applet demo for Corner Detection

- Corner Detection Site

- java machine vision toolkit

- CMU's Computer visions software list

- Disparity Analysis of Images in Java

- cooperative vision and panoramic cameras

- TELE2 allows to calibrate a camera from a special purpose calibration object.

- Stereopsis tool StereoFrame

- JAI Java Advanced Imaging builds including (native) support

- Computer Vision Demonstration Website - Harris + Java

- Stero at middlebury edu

- ImageJ a public domain, Java-based image processing program developed at the National Institutes of Health

- official JAI tutorial which does not work well

- JAI review

- Security Biometrics

- Sentience

- Sentience

- freelance writing opportunities

- Google Code PSentience

- roborealm list of links