7

7

Microsoft Kinect SDK kit http://www.microsoft.com/en-us/download/details.aspx?id=40278

Toolkit Microsoft Kinect : http://www.microsoft.com/en-us/download/details.aspx?id=40276

The book i'm following : http://www.arduinoandkinectprojects.com/

ebook link : http://it-ebooks.info/book/761/

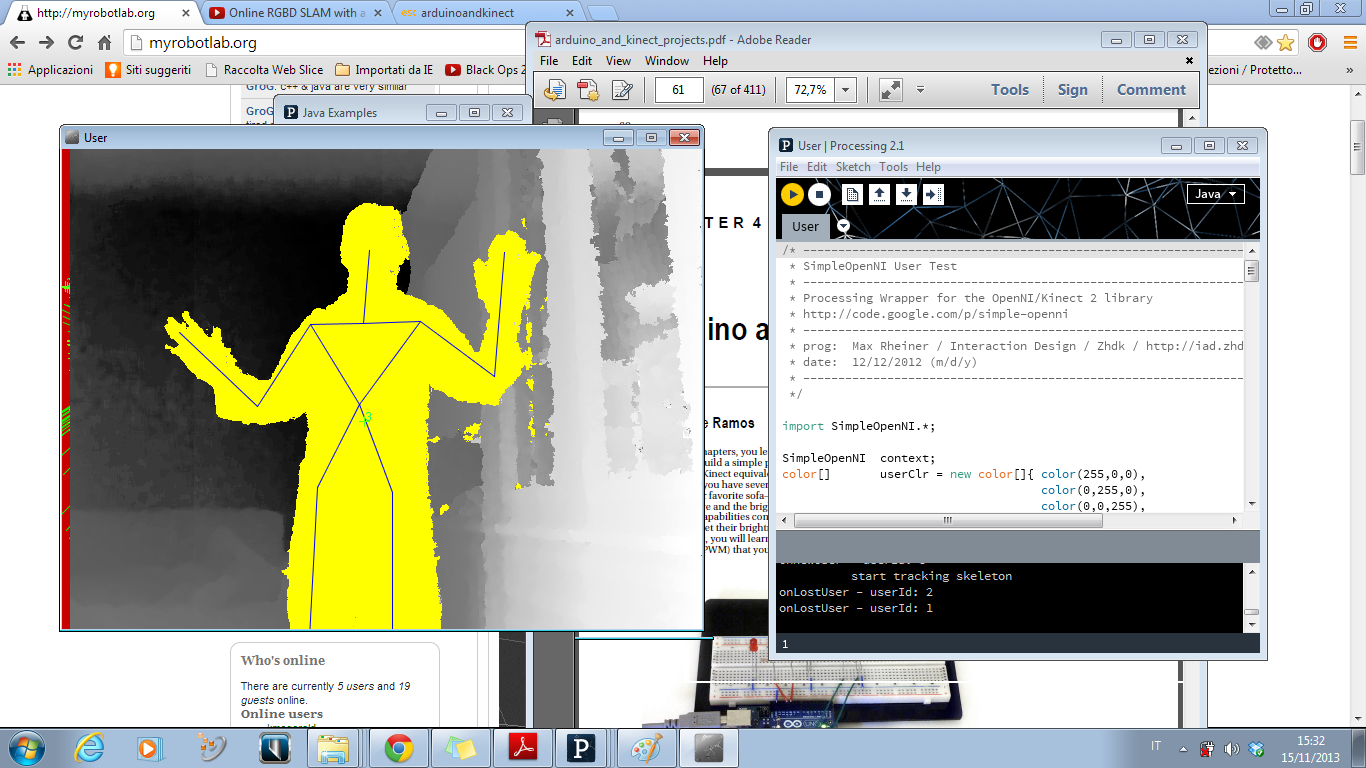

the processing library i'm using : https://code.google.com/p/simple-openni/

[[Servo.serial.py]]

We Need Video !

We Need Video !

Of course !!!! i'll make a

Of course !!!! i'll make a video as soon as i can control 2 servos, using x and y coordinates... !!!

Try something like this in

Try something like this in processing (make sure webgui is running on MRL)

Thanks grog...this worked

Thanks grog...this worked great :) now the problem is to put the int value X in that path (X instead of 20)...

How can i insert a variable in a string in that point??

Just figured out how to

Just figured out how to insert the X coordinate in that string...

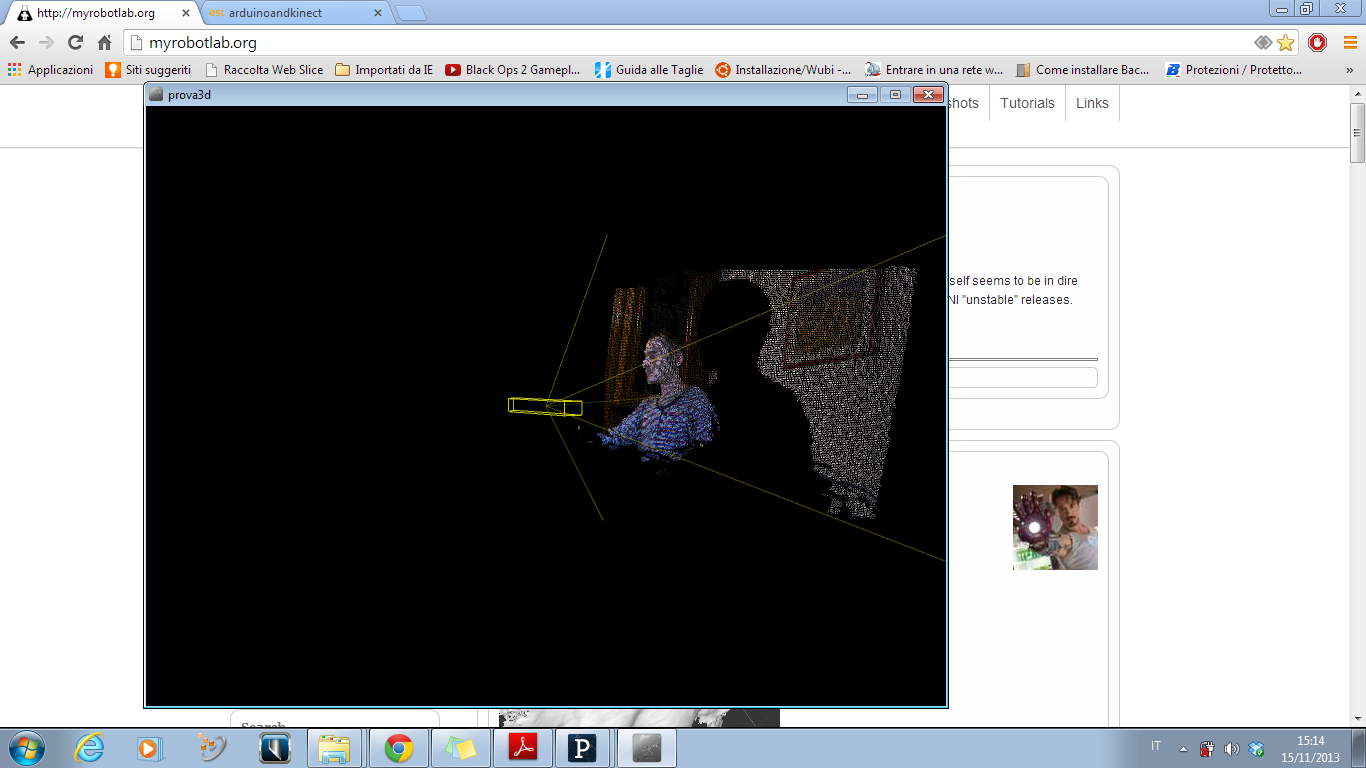

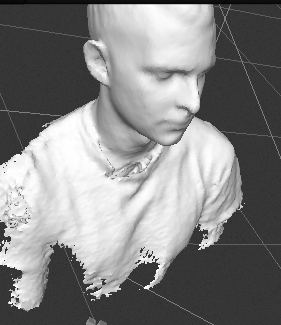

This is Processing code i'm using... Grog suggested me to get rid of the serial communication and to use a RestAPI call to send servos the Servo.write(X) command... And it seems to work quite well...

String host = "127.0.0.1";

The Robot Trainer !

Nice video Ale !

I was really surprised at the speed, I thought the communication over the REST API would be slow.

When the system pauses, is it because the kinect has lost track?

Excited to see you grab and move a block without touching anything - need a gripper though ...

Great Work !