Logitech C600 webcam - 2 Servos in Pan/Tilt kit - and $16 BBB Arduino clone... not pretty, but it works !

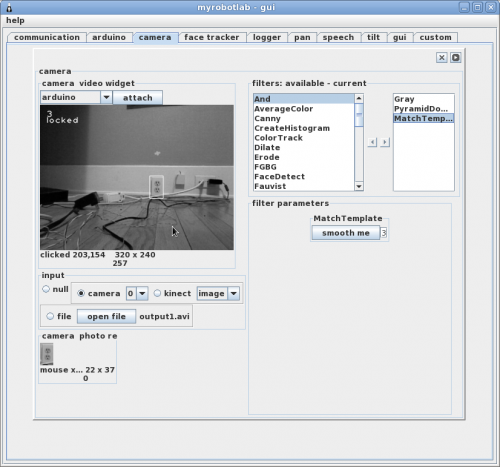

Gray Filter applied - Matching Template will match in a single Color Of Interest (COI) at a time

PyramidDown Filter applied - scales from 360 x 480 to 320 x 240

MatchingTempalte Filter applied - with template of selected power socket region

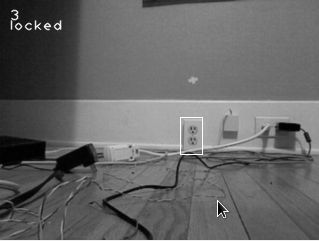

Image is "locked" and centered @ 3% deviation - BooYa !

320X240 view of locked image

Template Matching is now available through MRL.

Template Matching is a process of matching a small sub image within a larger global image. As an exercise I chose a wall socket since this could be the goal for a self charging robot.

When the target is locked and centered, an event will fire off. If this were a mobile platform and the goal was to mate with the socket, the next behavior would be to move closer to the socket and avoid obstacles. Since, this is not a mobile platform, I have chosen to send the event to a Text To Speech service with the appropriate verbiage.

The interface for creating a template can be programmed with coordinate numbers, or selected through the video feed. To select a new template the Matching Template filter should be high-lighted, then simply select the top left and bottom right rectangle of the new template. You will see the template image become visible in the Photo Reel section of the OpenCV gui.

Currently, I am using the Face Tracking service in MRL. The Face Tracking service will soon be decomposed into a more generalized Tracking Service, which can be used to track a target with any sort of appropriate sensor data. Previously I found tracking problematic. The pan/tilt platform would bounce back and forth and overcompensate (Hysterisis). The lag which video processing incurs makes the tracking difficult. In an attempt to compensate this issue, I have recently combined a PID controller into the Tracking service, and have been very pleased with the results. The tracking is bounces around much less, although there is still room for improvement.

PID is a method (and artform) which allows error correction in complex systems. Initially a set of values must be chosen for the specific system. There are 3 major values.

- Kp = Proportional constant - dependent on present errors

- Ki = Integral constant - is the accumulation of past errors

- Kd = Derivative constant - attempts to predict future errors

The video will show the initial setup. This involves connecting an Arduino to a COM port, then connecting 2 Servos to the Arduino (1 for pan & another for tilt). After this is done, I begin selecting different templates to match as the test continues. The template match value in the upper left corner represents and represents the quality of matching.

The states which can occur

- "I found my num num" - first time tracking

- "I got my num num" - lock and centered on target

- "I wan't dat" - not centered - but tracking to target

- "boo who who - where is my num num?" - lost tracking

More to Come

In the video you can see when I switch off the lights the lock is lost. Template matching is sensitive to scale, rotation, and light changes. Haar object detection is more robust and less sensitive to scale and lighting changes. The next step will be taking the template and proceeding with Haar training.

Kinect & Bag Of Words - http://en.wikipedia.org/wiki/Bag_of_words_model_in_computer_vision association