I'm finding that MarySpeech takes a while to start up on a Raspberry Pi - at least a minute before it starts working. Also sometimes the speech is broken up if other things are going on like setting digital pins using an Arduino and the MRL service.

I also tried to install destival and use localSpeech but that is just silent, not sure what I've missed. Does anyone have any tips or anything else that's working?

thanks

Slow Mary Speech

I kind of think that when I was first setting up Mary Speech that it took a bit for it to start and that was originally on a MacMini circa 2012 and if memory serves me correctly it is now running on Junior's RaspPi in his head and I am seeing probably a similar latency to what I had on the MacMini so I never thought much of it. Sounds like a great reason to fire up Junior today and see what he has to say.

Ahoy XRobots,MarySpeech "is"

Ahoy XRobots,

MarySpeech "is" heavy because its a rather good, fully implemented Java speech synthesis. The time you notice is probably time spent loading data files.

The advantages of MarySpeech are :

The downside is its a bit heavy on memory & processing.

LocalSpeech looks like it "wants" to implement calls to festival .. but its not there yet.

I found this - https://elinux.org/RPi_Text_to_Speech_(Speech_Synthesis)

Which has references to festival and several other .

I would be glad to finish the implementation of LocalSpeech to one of these speech services, but it would be helpful if you gave us your preference (espeak, festival, cepstral)

I'm almost positive the google one does not work, because google (being closed source for this service) has broken the interface.

We are also looking into wavenet neural network generated speech - but that is further down the roadmap.

Hey, thanks that would be

Hey, thanks that would be great,

Frankly anything that works and is lightweight would be ok, I don't really care what it sounds like but something easy to install and open source would be best I guess.

Thanks again

Ahoy XRobots .. it's Worky

Ahoy XRobots .. it's Worky !

took me longer than I thought (delay had to do with the pipe for festival commands and more minutia about calling a Linux executable from Java with lots of parameters)

I tested this on my raspi 1, and I thought I'd tell you some of my process which might be useful to you and others.

I never use the raspi X-windows desktop. I'm just too lazy, and figure all the processing & memory to support a windows manager and desktop are better utilized to do more "roboty" things.

I start with a SD card and new image of Raspberian.

They used to have ssh enabled, but now they don't, however, now I found a little gem from the forums.

If you write a file in the root of the SD card named "ssh" it will be enabled when you put it in the pi

This saves me the hassle of finding an hdmi cable, keyboard, mouse, and all that nonsense. Just plug it into the network, do a scan - find it and ssh into it.

If this is a brand new raspi - I usually make a directory /opt/mrl download the latest Nixie build on the develop branch, and fire it up with an install

Nixie installs are a big improvement over previous versions - the initial install takes a considerable amount of time, but upgrades are simple and very quick. Nixie, builds a local repo in a global .ivy directory which other installations can share. There is the option to selectively choose which parts to install, but to keep it simple - the -install will install everything. After issuing this command its usually a good time to leave and enjoy a nice walk with your favorite beverage (or 2)

LocalSpeech doesn't have any dependencies fufilled by -install, but its nice install everything just in case you use another service which does have dependencies.

LocalSpeech for Linux does depend on festival being installed - this is certainly easy

After festival is installed, you can start an instance of mrl up with this command

The -service {service name} {service type} {service name} {service type} ... flag allows you to decide which services you want to start immediately. In this case I only want the LocalSpeech service to test.

After a little time and a bunch of gobblygook to the screen it will stop.

At this point (with a little luck) you can type ls

In Linux this will list your files. In MRL it will list your services.

We see cli (the service we are currently typing into), runtime, security, speech & speech.audioFile are all running. So, lets make the speech service say something.

The notation is pretty easy if you know the functions of your service. All speech synthesis services have a "speak" function which takes a string. So if you type this.

The speech should come out of the RasPi. You can list functions of a service by typing

and you can list the data of a service by leaving off the end / . e.g.

It's a potentially powerful mechanism to inspect what a service can do.

As it stands we don't currently cache the sound in a file - which would help future speed, but someone might look into it as an enhancment. There are also several other native Linux tts applications which might be of interest to "Borg" into mrl.

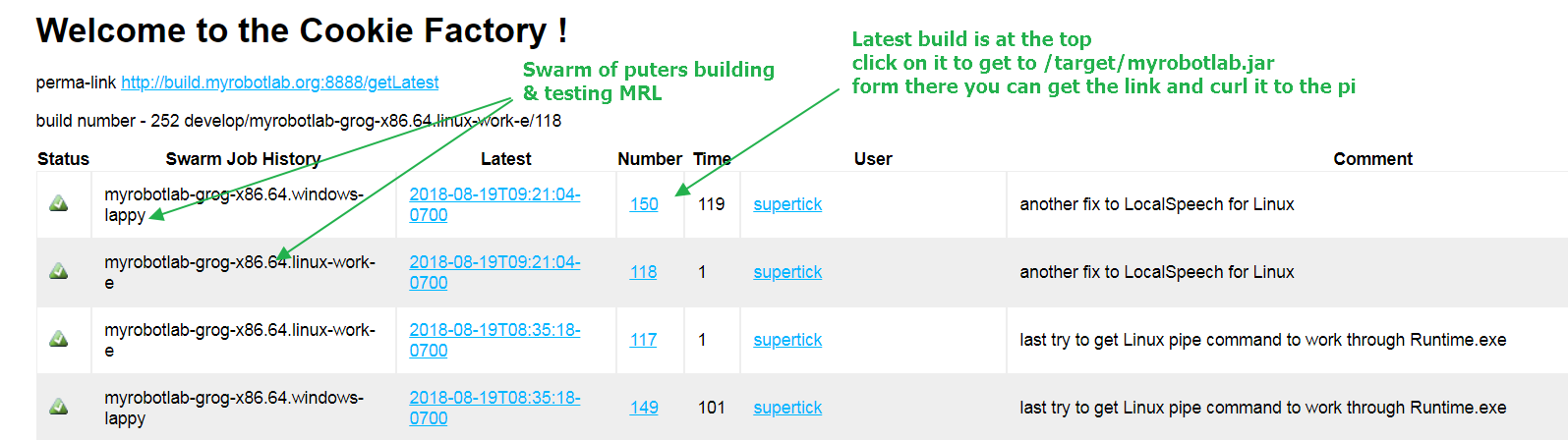

You can find MRL Nixie builds here - http://build.myrobotlab.org:8888/

I usually use curl to plop the latest one on the raspi ...

From the pi

BooYa .. upgraded

Wow thanks for the effort on

Wow thanks for the effort on this one - I'll give it a go later this week.

Thanks again

I downloaded the latest

I downloaded the latest version and updated all the services through the GUI. The speech works, but speakBlocking doesn't seem to work so it speaks over itself.

mouth=Runtime.start('mouth','LocalSpeech')mouth.speakBlocking(u"robotty robot")mouth.speakBlocking(u"hello hello hello")mouth.speakBlocking(u"12345")Causes it to say all the things at once instead of waiting for the last one to finish like Mary Speech does.

---------------------------------------

In addition to this, my code seems to have stopped working that worked before. Essentially it ignores the if and elif statements towards the end and doesn't do any of the actions. I can see it recognising what I say and printing it to the terminal, but it doesn't speak or move any of the motors. I tried both MarySpeech and then I modified the code for LocalSpeech but it's the same for both.

Occasionally I get a 'nullPointer Excelption Null' in red at the bottom, but it doesn't refer to any particular line of code.

Code as follows - this is a robot with DC motors for the wheel and head+arm servos. All those things are connected to an Arduino running the MRL Arduino code. It all worked fine before I updated to the latest version make LocalSpeech work.

---------------------------------------

One step forward Two back

One step forward Two back ?

Doh !

Ok, so I have not yet implemented speechBlocking for local speech... I can look into that.

In regards to the motor stuff I'll look into that immediately, because I believe its the last major "feature" in Nixie - the Motors have been refactored significantly - apparently we need to look into what happen with MotorPwm & Arduino - (I have those parts, and can test here)

For future collaboration I'd recommend maintaining your code in github. You can work on it in your own repo, or I can add you as a collaborator to the MRL team if you send me your github user.

Many people maintain they python scripts in our pyrobotlab repo here

https://github.com/MyRobotLab/pyrobotlab/tree/master/home

And I'd like to introduce you to the noWorky - it sends myrobotlab.log to the website, which is sometimes very useful in debugging..

http://myrobotlab.org/content/helpful-myrobotlab-tips-and-tricks-0#noWorky

ok, 1. problem:

[edit: well, seems like I took to long & GroG was faster ... : ) ]

ok, 1. problem: LocalSpeech.speakBlocking(...):

From looking at the source, it seems logical that this is not working correctly.

In contrast to other speech solution, LocalSpeech on Linux currently pushes out the Audio directly and doesn't cache it to a file and play the file afterwards (ref). It looks like festival comes with a script text2wave that allows for writing the output to a file (using the -o flag). (If this doesn't work we could always just write it to a file ourselves (e.g. using lame).

If this problem is still there in like 5 days, I will take a crack at it.

2. problem: script noWorky:

Unfortunately, your pasted script was stripped of any identation it had. Can't really help there.

But you might want to send a "noWorky", this will upload your log-file including your script and any errors you might have. This way one of us can take a look and help you in detail. (-> Here's how to do it.)

Ok, I sent a No Worky. I

Ok, I sent a No Worky. I don't have write access to the Github, can you give me access please - I'm: https://github.com/xrobots

The script did work fine until I tried the latest Nixie to make local speech work. I tried going back to the old install (in a separate folder) and that worked again - however I updated that install to see if I could get local speech to work there, through 'runtime - system - install all', and now that install is broken also.

I got the original Nixie jar file from approx one month ago, put that in a separate folder and installed all the services, but now that doesn't work either, so I don't currently have a working version.

All I get is 'NullPointerException Null' whenever it tries to do anything - it's not just motors that don't work, it's everything that's supposed to happen when it hears speech (speaking speaking using Mary Speech, setting Arduino digital and analog pins), so I think there's a bug in the IDE actually interpreting the code or something.

Your still starting MaryTTS

Your still starting MaryTTS and your running out of memory ... once you run out of memory ... nothing is happy :(

Step 1 - look for zombies eating

brainsmemory ... kill themhttp://myrobotlab.org/content/helpful-myrobotlab-tips-and-tricks-0#zombie

usually this can be done with the command

Step 2 - either use LocalSpeech as not the best stop-gap because of the non-blocking issue (which we'll fix) .. or try to give the process more memory. I think you can add more memory to the jvm when you start with the following command line to start mrl.

The values may need finessing - and you can usually verify its working when you do a top command

Well it was working with Mary

Well it was working with Mary Speech, here's a video of it (yes it's another open source robot project):

https://www.youtube.com/watch?v=7EqQhqgECak - The speech script stuff starts at 0:40

As you can see i've also had the openCV stuff running on it with no issues.

-------------------------------

it's a bit laggy somtimes but works fine - so that's why I asked for local speech to be implemented. However that is where all my issues started.

I've submitted another 'No Worky' for the example using Local Speech instead. It'll speak on startup as it should, it will repond to one command (you can see the digital pins turn on in the Java debug window, and they do turn the Arduino pins high. However after that it will no longer do anything in the if / elif statements at the bottom. Occasionally I can NullException errors, but mostly it's just unresponsive. It doesn't put any more text into the Local Speech tab either, so it's like my if /elifs are just not there, although it prints the spoken commands to the terminal ok.

But now I cannot make Mary Speech work again either as I detailed above, even by going back to the one month old version of the jar file. Is there some way to pull down older versions of all the services?

Oohhw, I already fell in love

Oohhw, I already fell in love with your robot : )

rel. MarySpeech-noWorky:

I suspect it might be using it's already cached files - this way it could play the speech even though the main marytts instance failed to start/crashed.

To be clear: running out of memory is still a bad thing & can lead to all sorts of undefined behaviour.

rel. LocalSpeech-noWorky:

This log file actually contains two types of errors:

1. some funky error related to Mappers, @GroG, would you mind to take a look at this? : )

2. AbstractSpeech complaining that LocalSpeech failed to generate the audio data (probably because LocalSpeech currently doesn't support caching?)

There is (surprisingly) not a single one NullPointerException recorded in this log file.

btw: it's really bad practice to run a script again, this will (most likely) result in some weird errors. (redefining functions and such should be fine, e.g. starting a service with the same name as an already existing service will probably fail (maybe silently, this may have changed).

Using an 'older' .jar should give you everything as it was back then, but I would want to rely on others to confirm this.

So I just tried comenting out

So I just tried comenting out all the MarySpeech/LocalSpeech stuff and running the script. It will hear what I say still and do the first thing I ask for, but then the subsequent actions in the if/elifs fail with '[WARN] msg --> runtime.getenvironments...'

This is with the old Nixie file from a month ago, but having updated the services. It's the same with the new one, I don't have anythign else to work with now.

I sent two a 'no workys'. The second one was a totally clean boot of the Pi with no other messing around (the first one I can't remember).

Aparently, it's recognizing

Aparently, it's recognizing everything, but the first with a space in front of it.

This looks like a bug, but maybe you could add "data = data.strip()" as the first thing in your function "def onText(data):" for testing? This should remove all space in the front and in the end.

(Both noWorkys show the same behavior.)

Ok that works. Everything

Ok that works. Everything works again including LocalSpeech!

Note sure where that bug came from or how it got back into the previous jar file - unless it came down when I did 'update all' - I guess a newer version of he Webkit stuff could have introduced the bug.

Thanks for spotting it!

This will need to further

This will need to further tested, looked into & maybe fixed.

But I'm happy it's finally working.

btw: look like GroG just fixed caching for LocalSpeech (-> even faster for already generated speech texts!)

Thanks - just tried the

Thanks - just tried the latest version. It appears that the first time you have it saya phrase it doesn't say it, but the second time it does - but from then on it's much more respoinsive so I guess that means the first time it just generates the file and caches it.

Seems useable though.

I fixed this ... testing it

I fixed this ... testing it now ;)

Ok, fixed & verified .. The

Ok, fixed & verified ..

The first time it utters something new - there will be a delay as festival creates plays and caches the file.

Subsequent, utterances of the same text will be fast.

MaVo mentioned in the shoutbox strange whitespace with WebKit ?

Are there other problems remaining regarding Motors ?

Heh, you want to give us the current list of issues ? ;)

Thanks, The only issue

Thanks, The only issue remianing is the whitespace.

The motors work now that that the if/elifs work properly.

I have added a JS trim()

I have added a JS trim() statement to webkit in what "might" be the appropriate location.. and pushed the change.

I cannot test it currently, but will try to tonight .. if not distracted by squirrels