Next version 'Nixie' is coming soon !

The below article is a work in progress, it talks a bit about InMoov and MRL at a higher level, but it does have some commentary about reworking the HS 805 BB servo for continious operation and extracting the potentiometer.

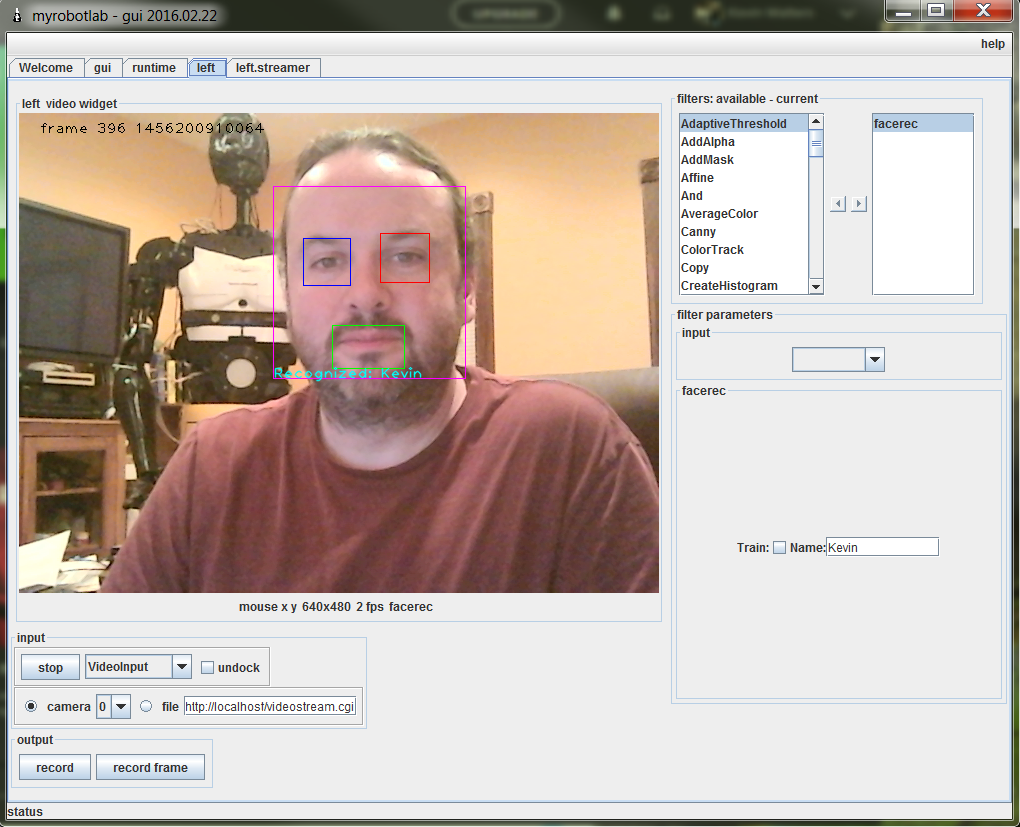

We're making slow but sure progress with face recognition...

HI ! before I start to code tell me if it's not useless : custom touchscreen interraction with MRL ( simple webviews and local media players api )

Somewhere in the python script, on event > PLAY fullscreen video / PLAY MP3 / Open picture / GRAB some WWW data / ...

Maybe I can interact between MRL and this External Custom GUI with some python html parser from htmllib ?

or i have more crappy if i can't do that because python doen"t like me ! : os.system ( cmd )

output :

Hello everybody!

I have a problem with the jaw. I am not able to get that the jaw moves when inmoov speaks.

This is my code that I have modified from different people.

I now have most off the InMoov head and torso printed and I can move each servo in turn by connecting it to a single channel of my Arduino. I am now ready to run the head service but I want to add some features - articulated neck, individual x control of the eyes, drive some LEDS and maybe even add a tongue control? What's the best way to do this, do I have to download and compile everything or can I somehow overload the default service with my own?

Regards

Andrew

Nice Video \o/

Test rig :- 4 IRs dangling from space

Its basically a Nintendo WiiMote camera which is able to track 4 infra red leds/laser giving XY coordinates and also the size of the blob seen.

Hi, I have followed the getting started procedure using Eclipse and downloaded the MyRobotLab Developer through git. All compiles and runs but Speech (The Lips) does not appear in the RunTime options! How do I get this service to run within the developer mode and where are the sources for this?

Kind Regards

MapSoft