I thought I do a quick post to describe some of the updates & refactoring I did with AudioFile.

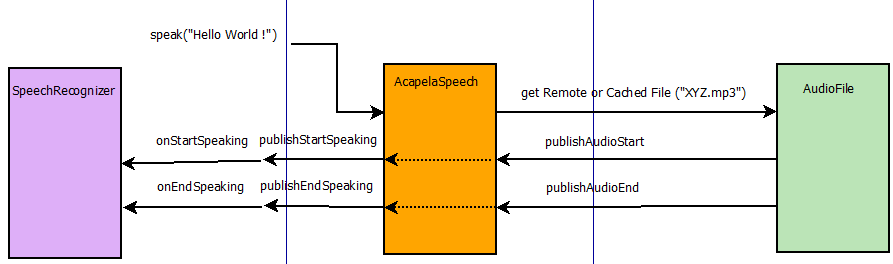

I believe previously most of the Speech Synthesis systems would inaccurately publish events because they did not directly hook into the events of the AudioFile playing of files.

Now the actual playing of the file (writing to an output line) triggers an Audio Start event, which triggers a Start Speaking Event. The same thing happens with Audio End & End Speaking.

Potentially the AudioFile can play many files, and send many events not related to speaking, but AcapelaSpeech looks only for its events, and will manage the publishing of Start & End Speaking correctly.

MouthControl

Hi

I changed MouthControl to use the StartSpeaking event ( was Saying ? ) I hope I didn't break anything. In case I did, please let me know. It is a bit confusing that the MouthControl shows an AcapelaSpeach service, but it's never started. I used the script below to test. The jaw moves in sync with the sound.

It's also a bit confusing that it now has two methods with similar names.

setMouth to set the speech service. I created it a few days ago based on that mouth is the internal name for speech .... perhaps it should be renamed to setSpeech ?

setmouth to set min and max values for the jaw movement. ( was there before and would probably be more descriptive if it was called something like setJawMinMax

I didn't change it since it's probably beeing used by other scripts, and I prefer not break anything.

Hi !thk you mouthcontrol

Hi !

thk you mouthcontrol works with this part of code i just change pin cause I use mega

i just change pin cause I use mega

so we dont use this anymore ? ( this cause crach cause port busy )

i01.startMouthControl("/dev/ttyACM0")

Someone know how to set jaw specific value after speak i try this but not work :

idea is to speak between 70>75 and rest to 40 at the end, not : mouth.setmouth(40,75)

mouth = Runtime.createAndStart("Mouth","MouthControl")

arduino = mouth.getArduino()

arduino.connect('/dev/ttyACM0')

jaw = mouth.getJaw()

jaw.detach()

jaw.attach(arduino,26)

mouth.setmouth(70,75)

speech = Runtime.createAndStart("Speech","AcapelaSpeech")

mouth.setMouth(speech)

speech.setVoice("MargauxSad")

speech.speakBlocking("hello")

jaw.moveTo(40)

sleep(3)

speech.speakBlocking("world")

Mouth rest position

mouth.setmouth(70,75) will set the limits for the jaw movement, and also set min and max for the servo movment. I added a few lines to make your script work.

The reason that I added a few sleeps is that speakBlocking will execute very fast. It returns before the mouth has stopped speaking. It's a quick hack. A better way is to listen to the onEndSpeaking event in the script. I will make an example of that too.

ok to work i need to add this

ok to work i need to add this every time : jaw.attach(arduino,26) it's better

problem we don't know speak duration so sleep(X) need to be hard coded

it's not very very clean :)

mouth = Runtime.createAndStart("Mouth","MouthControl")

arduino = mouth.getArduino()

arduino.connect('/dev/ttyACM0')

jaw = mouth.getJaw()

jaw.detach()

jaw.attach(arduino,26)

mouth.setmouth(70,75)

jaw.moveTo(70)

speech = Runtime.createAndStart("Speech","AcapelaSpeech")

mouth.setMouth(speech)

speech.setVoice("MargauxSad")

speech.speakBlocking("hello hello hello hello hello hello hello")

sleep(5)

mouth.setmouth(40,75)

jaw.attach(arduino,26)

jaw.moveTo(40)

sleep(5)

mouth.setmouth(70,75)

jaw.attach(arduino,26)

jaw.moveTo(70)

speech.speakBlocking("world world world world world world")

sleep(4)

mouth.setmouth(40,75)

jaw.attach(arduino,26)

jaw.moveTo(40)

Not clean

I agree. I will make a new cleaner example that listens to the StartSpeaking and EndSpeaking events to handle the closing of the mouth. But I need to be at home to be able to test.

Added a cleaner script

Hi

You can find a better script here: It listens to the EndSpeaking event.

https://github.com/MyRobotLab/pyrobotlab/blob/master/home/Mats/MouthCon…

thanks

Nice moz4r ! - He's lip

Nice moz4r ! - He's lip synching as good as Cher ! :)

Hmm, so I think there are a few features missing ..

I think speakBlocking is no longer working ... we should fix that too

yeh I think speakBlocking

yeh I think speakBlocking doesn't realy block ( i put some sleep() between Sentences but they are randomly executed )

2 or 3 Sentence like this is OK but too many cause mouthcontrol desyncronisation

Jaw movement

Hi

Thanks for the video. Nice to see that it works.