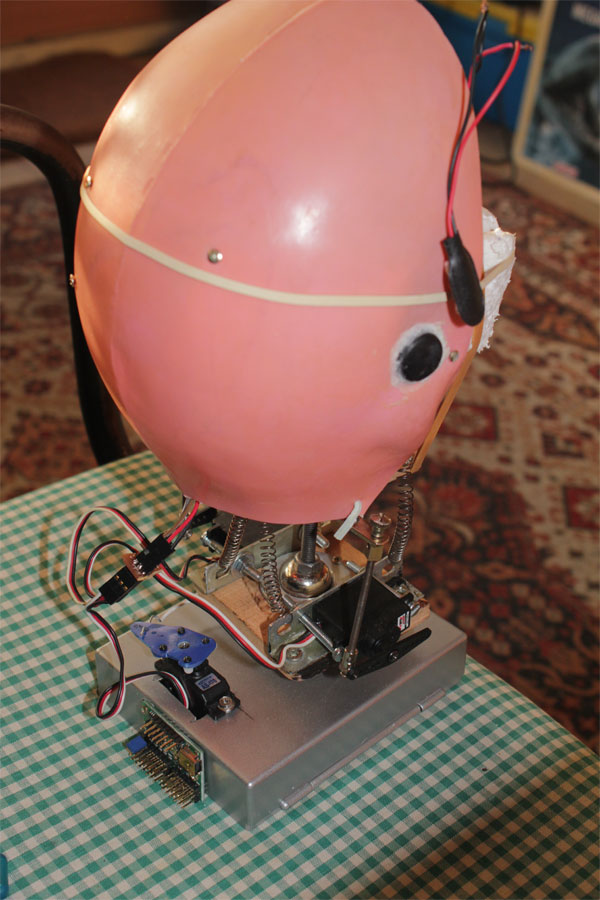

I am working on a new robotic head which should operate with MRL... it has a 3DOF neck mech for realistic head movements, 2 sound sensor ears and a 2eye mech for image processing... the eye mech has 2 eyes, one is a webcam with 2dof (pan-tilt) and a second eye which is a PIR sensor and can move sideways with the other eye... this post is just to announce my new project and to get some help from the MRL community... I am new to MRL and Python and working hard to learn about them.. I am dealing with robotics for years and familiar with Arduinos... this robotic head has 2 Arduino mini Pros on it... one dedicated to MRL (with MRL comm.ino) and the other is taking care of other jobs such as neck movements and ears... the ears are functioning to find the direction of coming sound and turn the head that way, but i am planning to integrate the webcam mic for MRL sound services, such as responding to comments... what do you think?... Any help will be appreciated....

[[Tracking.PIRTriggered.borsaci06.py]]

Great Post Borsaci !

I'd love to see video of it in action, I'm still having difficulty of figuring out how the serovs move in relation to the head. I'd be very excited to see PID work with this. My experience with PID is that it works along a single axis - you have a system which once coordinate on one axis affects the other coordinates on different accesses - complex ! But I'm wondering if PID will work well regardless of the complexity, since its always doing a relative error correction - Or maybe there are more effecient combinations of PID .. exciting !

I'm also wondering how you do sound location ? Is it 2 small mics and a differencing + trig algorithm?

MRL robotic head...

thx Grog... i havent integrated neck movements to MRL yet.. a dedicated Arduino takes care of neck movements... the single back servo turns the head sideways on a roller bearing turntable. the 2 servos on each side puls or pushes the neck to sides and back-front motion... the trick is the ball joint just over the bearing in the middle of the turntable... no PIDs for now... just pre-programmed head movements or serial commands from Arduino IDE... look up... look front-left... kinda things.... sound location is done via 2 sound sensors i made myself... i can post the circuit and the program for locating the direction of sound here if u want... or if anyone interested...

Holaaaa ;)

Hi I like this project would help me understand the code exactly does when there is a sound on the left side then the head turns to the left and vice versa? please I want to do this for my project can publish more information of the circuit? Thank you very much

MRL Robotic head ears...

none of scripts worky... :(

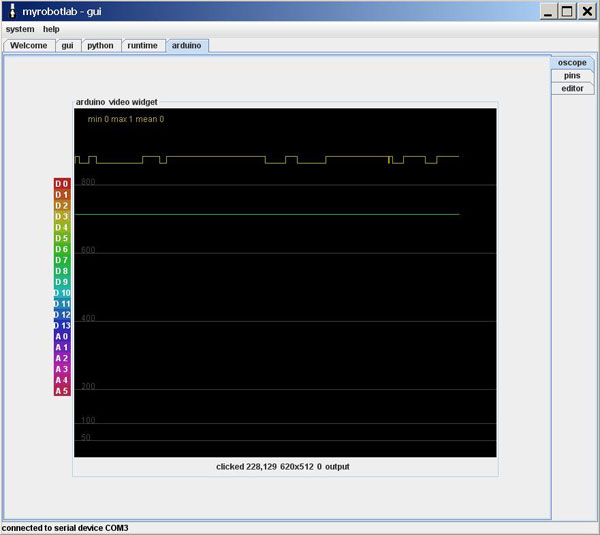

@Grog... i tried both scripts but neither worked for me... :( Ale's script gives python error and doesnt create a valid opencv.. the other one is only following the sensor triggering on arduino oscope but doesnt supply valid triggering data... i am posting my oscope output as u requested....

color coded legend on the left

Just in case you didn't see my "shoutbox" messages, it looks like your signal is coming in as D3 (yellow line same color as D3 on the left legend) and you are also tracking a flat signal on some of the green pins (D5?).

The oscope tab isn't exactly intuitive to me either but I think what I'm describing above is correct.

color coded legend on the left...

Thx KMC... i also read your shouts... i already realized that the yellow line on top is coming from my PIR sensor because it triggers as i move in front of it... the green line on D3 is there because i tried to enable D3 from pins tab on right, under oscope tab to "in" and "on"... i did that because the triggered data on yellow line doesnt have any affect on MRL python script and tracking service doesnt start tracking.. if you take a look at the script that Aless wrote, it should check whether PIR state is 1 or 0 but "if statement " there is never executed.. it cannot read the publishpin and gives msg error... so it doesnt activate face tracking... i am desperetaly trying to make MRL read input and execute branching with a simple if statement...

Video of simple face tracking...

I am adding a video i made during my tests of simple face tracking with "facetrackingminimal" python script... as you can see it can successfully find and track faces.. but still i am unable to integrate PIR sensor into the system to activate detecting human presence...

It Found You !

Nice to see you ! and thanks for the video post :D

What is "facetrackingminimal" ? I see a Tracking.minimal.py and a facetrackinglight.py in the Python/examples ..

In order to sucessfully integrate we need to know what what exactly we are integrating !

Nice demo !

FaceTackingMinimal.py script...

@GroG... the script i am using on this test is the following one which i slightly modified the tracking minimal script to my needs...

BTW pls tell me how i can paste the program block seperate on the post body...

You could send me the

You could send me the myrobotlab.log file after the error occurs using my script.. in that way i can see if i can help you...

myrobotlab.log file...

@Aless... yes i could but i didnot want to bother you... GroG was online and was working on the issue... many thx for your help till now... you were always there to help... in the end i see that the new script on my post is working flawlessly and it is a modified version of your old script... you are a Guru...

With some of the new features

With some of the new features of MRL - you can now debounce signals at a configurable interval -

So the signal will allow changes of states only after the debounce time has expired.

The code is :

After the signal changes - this setting will prevent the signal to change again until 1 second has gone by.

It looks just the opposite as how I would expect, but it is working as I would expect - the funny thing is the trace slows down. If the trace was at constant speed then you would see the fluctuations stretch out.. weird, but only a display issue.