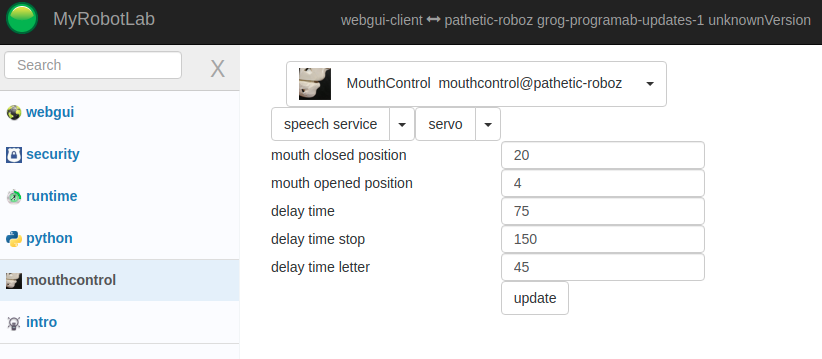

@Gael, I think something like this would be good - I'm close to finishing it out, but I want https://github.com/MyRobotLab/myrobotlab/pull/921 merged in first, because it makes getting the valid list of future and existing speech services and servos much more clean.

Changes in the UI can/will be saved out through config, and loaded if desired.

Helo Grog, Looks perfect for

Helo Grog,

Looks perfect for it's purpose!

When saving the config, will it automatically attach the jaw servo and speech engine for InMoov, if i01.head is activated via InMoov UI?

Yes... I don't remember if

Yes...

I don't remember if the InMoov service tries to attach it all together, but the config certainly could have the capability to attach the jaw and whatever speech service was specified.

Usually, the recent problems have occured when both InMoov & the config are trying to do the "right thing" and stomp over one another ... in these cases, I disable the functionality in the InMoov service and enable the configuration, since this gives more flexibility for the end user.

In Manticore the service use

In Manticore the service use to attach the parts together in a way it would work when launching the head.

Now in Nixie, via the InMoov2 UI, launching the head does set the jaw and mouthControl, but I noticed some strange behavior when running from the configs.

For example: Try launching the InMoov2_FingerStarter config and you will notice that the mouth says "entering into the gestures topic" after "loading gestures".

The fact it says "loading gestures" is normal because it is spoken by the service: https://github.com/MyRobotLab/myrobotlab/blob/develop/src/main/java/org/myrobotlab/service/InMoov2.java#L789 and it gets triggered by the InMoov2.html, this way gestures get loaded by default if launching a config.

But the fact it says "entering into the gestures topic", means he heard himself saying the word "gestures" and he replies to himself. This shouldn't happen normally.

I tried to avoid that by switching in many ways what attach what in the configs, but I never got it all proper.