Speed test with FaceDNN filter

Ahoy !

The new OpenCV service now supports "enable() & disable()" on each filter ...

In the past it was a bit of a pain of removing all filters and adding a new set for different functionality.

If you want to change from detecting to tracking - it can potentially be accomplished with defining your filter pipeline - then enabling or disabling the appropriate filters.

(in build #13 from "new" build server)

Oooh.. kwatters newly added

Oooh..

kwatters newly added facednn - detects multiple faces @ 27 fps !

Shweet ! - what could we possibly want next ?

Confidence rating ! Locally Trained ! - We want to recognize Morpheus !! :)

I see the confidence - I'll add a List of Classifications - and have the display print it ..

Mats took out the kinect

Mats took out the kinect depth grabbing in the "grabbing" area - and put it in a filter ...

Not sure why, but I was having contention with it, because it used a "new" frame grabber, while I think it should use the one which is currently providing the video frame.

I changed it to do so, and some more refactoring - then i gave this filter a little gui with a checkbox which allows it to switch from video to depth.

Added color as default to the

Added color as default to the kinect depth filter ... gray scale loses info, since its currently scaled at 1 byte channel, while color has 3xbyte channels, and the kinect depth is 1 channel at 16 bits..

more checkboxes too...

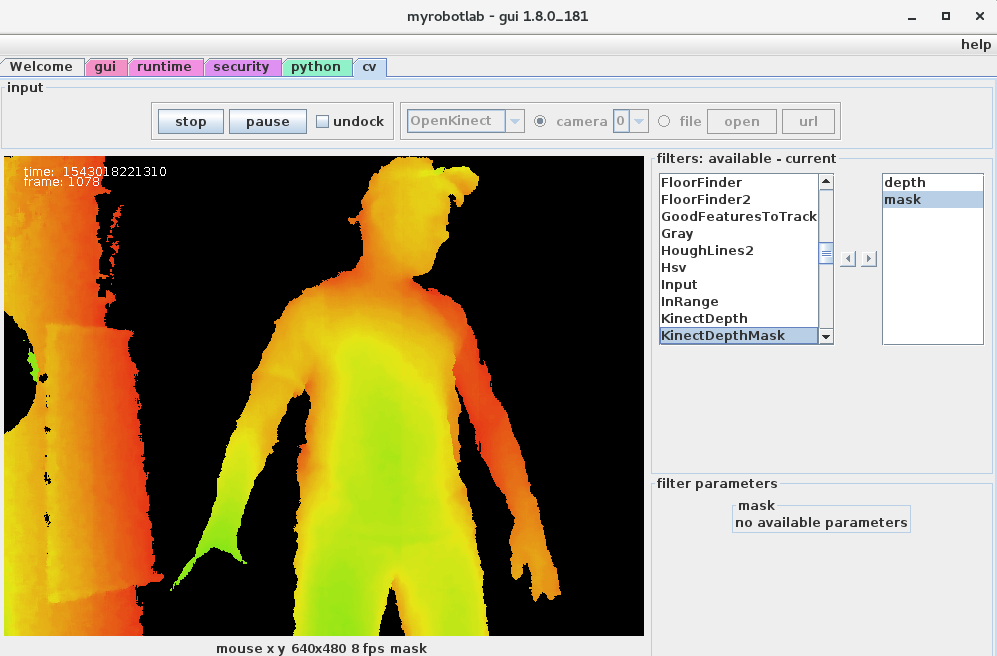

First stage of depth mask filter - worky :)

Well . .the first "stage" of the kinect depth mask filter is worky - this is with :

min depth = 6000

max depth = 20000

not sure of the exact meaning of the value - need to search the interweb for conversion to cm

Now letting the depth color

Now letting the depth color image run through the mask

Next will be to send the video + offset through the mask

My idea is to reduce the data

My idea is to reduce the data significantly, then do a houghline filter which should find the lines.

I can find the Z depth of the lines and construct a 3D set of lines, from which I hope to create a 3D bubble/mesh ... and eventually publish the data to JMonkey for an internal "virtual" world representation

the opencv filter should open

the opencv filter should open a lot of new imaginative way to do what you want.

What I did last year for IntegratedMovement is to convert the depth map into some kind of cloud point that fit into the coordinate system of the robot (x,y,z).

The points are then group (agglomerate) together to have individual object (so by example, 3 boxes stack one on another can be seen as 3 differents objects)

My idea was to use those group of points to make meshes, but I did not get to that point mostly because of the computing time, instead I have try to explain the group of points into basic geometric shape that fit most of the point. So far it sees all in cylinder shape wich is not great to explain everything, but work great at the stage I was

Hi Calamity, is what you

Hi Calamity,

is what you worked on currently worky ?

Do you have a demo or visualization ?

yes it's still worky, just

yes it's still worky, just test it

I think the best demo is that you start integratedMovement service main function with an arduino (change the port) and kinect plug in and use the ik tab in the swing gui so you can play with the virtual inMoov and what the kinect sees (in cloud point in jmonkey)

You can then select an arm and have the virtual inMoov move to a selected object

Here you can see me holing my

Here you can see me holing my cellphone in front of the kinect while clicking on my mouse. and in the left the <object> that the kinect sees (in form of cylinders)

forgot about the blue thing, it's a virtual beer... no real beer was used in this testing

That's awesome calamity !I

That's awesome calamity !

I think I might have bork'd this in the JMonkey updates, but I'd like to see your progress continue.

I appreciate your comment at the top of this service

"IntegratedMovement - This class provides a 3D based inverse kinematicsimplementation that allows you to specify the robot arm geometry based on DHParameters. The work is based on InversedKinematics3D by kwatters with different computation and goal, including collision detection and moveToObject"

How is it different in computation & goal ?

I see there are no direct references to InMoov in it - which is very good, except for one (indirectly). I see you made an interface which is good, but the JMonkeyEngine itself was very specific to InMoov - I've begun to change this so that its loaded by a json file - and potentially won't need recompilation to provide simulation of different models.

What is your vision regarding (what seems to be 3+ IK services) IK IK3D IntegratedMovement ?

What branch/version are you using ? - make sure you save it ;) .. cuz the develop branch will need a bit of help ...

The goal of is not only about

The goal of is not only about IK, it's about having a system that allow a robot (not just inMoov) to move and interract with his environment autonomously (sorry about the grammar) and that look 'natural'

It use IK to know where it's part are and position itself

it use the kinect (via openNI) to scan it's surrounding and make a map of it (Map3D module)

it use a collision detection module to avoid object in it's surrounding, or reach for them. ( CollisionDetection module)

it use some body physic to try to keep the center of gravity near it's center. if an arm reach far away, it will compensate de movement by also moving different body part (GravityCenter module)

So the IntegrateMovement service is like the manager of all those module. It could support multiple DHRobotArm at the same time (acting together) with the other module I make for IntegratedMovement. Maybe I could I write those module as different services, and maybe I will do in the future, but so far they are so intimely close and have no real use as standalone that so far I think it's fine as I have done it.

About the difference with the other ik services. I don't remember much the IK service, so I will leave that one out and only compare with IK3D.

the IKEngine (used by IK3D) and IMEngine (used by IntegratedMovement) are really similar. IMEngine was branched from IKEngine and I made only a few modification.

IKEngine compute using the jacobian pseudo inverse method to compute. the IK. That method give awesome results for small movement, or to follow an object (tracking) or move the arm with a joystick. But I did not like the way it move when a large movement is asked (ie moving the arm from bottom to overhead will give some kind of wavy move that I did not like). So I add an alternate method of computation that kick in when the pseudo inverse method did not solve quickly the IK problem. The alternated method gives more random results so it's no good for small movement, but for large movement, I feel it gives a more natural move than with the pseudo inverse method.

The second difference is that I add a way the IMEngine get feedback from the Servo service. I know servo don't give feedback, but knowing where a servo have been ask to move to and at what speed(velocity) IS some kind of feedback. That gives the possibility for the IMEngine to adapt if a body part was moved in another way than by itself, while the IK3D need to reset to do it.

well, I think that resume (lol) the goal and difference with the other IK services.

I did not make any change I think since the release of Manticore. so the code should be safe. It's fully working right now on the develop branch, but I expect some change will be need in the future.

And about the jmonkey app, it currently used a different and more generic app than the vinMoov app (at least when I write it). I'm sure you improved the vinMoov app a lot.

Wow . Thanks for the

Wow .

Thanks for the explanation Calamity. I'm always really impressed with your work. I'm finally starting to play with some of these concepts and now I feel I am on the fringe trying to get information.

I still have lots of work on JMonkeyEngine - but I'm sure I'll be asking you a lot of questions in the near future.

I'm interested in mesh simplification - so that the gazillion point cloud you currently have in jmonkey become larger polygons - so perhaps identifying the beer in less time ;)

Always nice to see what your doing.

I think that mesh

I think that mesh simplification is the way to go. the opencv filter should help a lot in this way. I wish I had those tool when I had work on this.

Let me know if you need any info on how to use the depthmap to have the point in coordinate value, I think that will still be useful

Now with points you can

Now with points you can sample with the mouse. I've identified the "unkown" value of 65287. Its all the area in green. The right green band is an artifact of the camera offset from the projector.

WOOHOO ! 30 fps !

Using the parallatizing feature in javacv. I'm very happy with this - because it shows full sampling of all points in a kinect frame can be done in less time to maintain 30 fps. :)

Canny seems likely that it

Canny seems likely that it could help in a reduced mesh filter with kinect data ...