Pioneers are the guys with the arrows in their backs. GroG can probably tell you all about that.

If you count the time I drew a face on a box and taped it to a toy car, I've been building robots for 45 years. Problem was, for most of that time, the electronics and mechanical components were expensive and didn't do all that much. Also, finding kindred spirits was difficult. I capered like a drunk monk the day I got the mutant serial port of a Vic 20 to control the speed of a toy motor. REAL computer control of a motor! All I needed was another Vic 20 for differential control!

Arduino's and motor controller shields sure have made motion easy. It's nothing to plug a servo and some sort of ultrasonic or infrared sensor into an Arduino, add a few lines of code and have them working together.

But, we were doing that in the 80s. Parallax and Basic Stamps have been around for a long time. And once upon a time, Polaroid had a nice ultrasonic developement kit that went into a few robots. Stuff cost more, and was (arguably) harder to use, but it was there. When you come down to it--for the longest time the average hobby robot wasn't doing much more than they've been doing since the 80's.

Then, some really cool things happened in the field of robot vision. Hobbyists noticed OpenCV and figured out how to use it. And Lady Ada risked the wrath of God and put up a bounty for the secrets of Kinect.

For the first time, hobby robots could SEE. REALLY see. And understand what they saw.

When I started looking into the Microsoft Kinect, I found a simliar device called the Xtion Pro Live, smaller, lighter, simpler power requirements--And that's where I decided to put my money. But when I finally got one, I quickly figured out that I had no idea what to do with it. I finally found SimpleOpenNI, and after a little work--My computer, (and therefore, a computer controlled robot) could see! Recognize human figures. Sense depth visually. Who needed ultrasonic sensors?

But. . .It still wasn't exactly easy. You had to be pretty good with C code. Well, SimpleOpenNI wrapped OpenNI in Processing, but--Hey wait a minute--What's this MyRobotLab stuff?

Through MyRobotLab, GroG makes OpenCV and OpenNI as easy as clicking buttons. Well, ok there's a little more to it than that. You have to do some Jython (Python) coding to get anything interactive happening. And, like much of the rest of MyRobotLab, it's still a work in progress. But progress was being made.

Uh. . .well, it worked if you had a Kinect. And it worked best under Windows. Java runs on Linux and Windows, so it SHOULD work under Linux.

Heh. Devil is in the details.

I had Grog's interest. But GroG didn't have an Xtion, and Ubuntu is sometimes trickier than Windows. And GroG is only one man. With a family. And a real job. And there are other components of MyRobotLab that need attention. Not everyone has a Kinect, (and fewer still have an Xtion.) And OpenCV worked--until the last video driver update. (Thanks Mark!) Oh I know GroG will get back to it. But. . .*sigh* after all this--I STILL had a blind robot.

What to do. . .

Well. . .SimpleOpenNI runs under Processing--And it WORKS. And Processing runs under Java--Just like MyRobotLab So, OpenNI should work, right?

Heh. Pesky details.

But, with a little fumbling and experimenting, I managed to install a missing freeglut3 file, and get the OpenNI examples to run through the command line. Then, when I compared library files between OpenNI and MyRobotLab I noticed they were different. Hmm. . .how different? Copy, paste and YES dammit--replace.

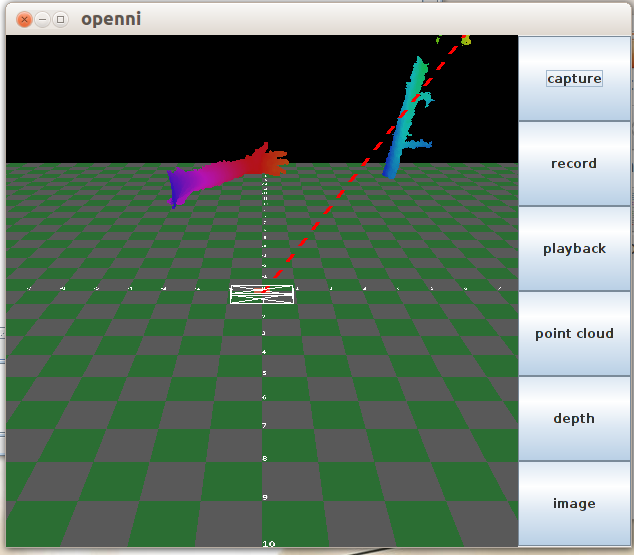

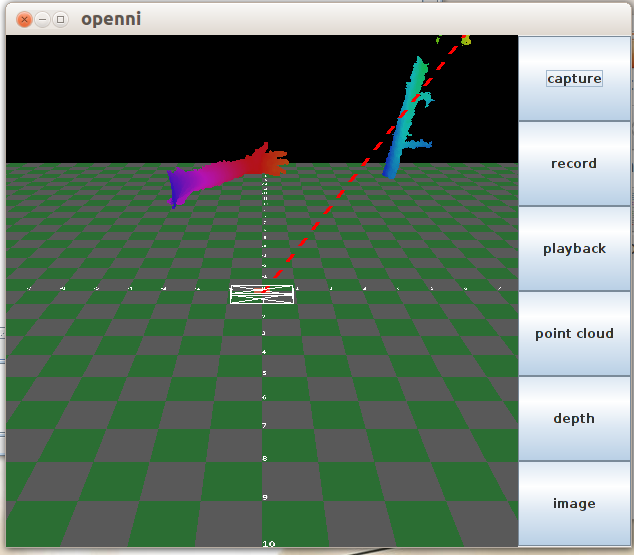

This is what I got with the capture button.

That's my arm. In MyRobotLab. My robot can see my arm in MyRobotLab. MY ROBOT CAN SEE MY ARM IN MYROBOTLAB!

Uh. sorry about that. Caps lock got stuck.

It's NOT a solution. This is only one OpenNI function working, and it wasn't in real time. I couldn't see the image until I hit "stop capture."

But it's progress. Up until a few hours ago, I didn't know if MyRobotLab would EVER talk to MyXtionProLive. Now I have an arm pointing the way.

Here are the exact steps I took to do it. NOTE--These instructions are specific to 32 bit Ubuntu 12.04, and an Asus Xtion Pro Live. You shouldn't have to do any of this for Windows a Kinect, and. . .something different if you have Linux and a Kinect. Maybe it's already working for you.

Download the unstable (most recent) OpenNI, middleware (NITE) and hardware binaries from

http://openni.org/Downloads/OpenNIModules.aspx

Unzip each module into a folder. (They should unzip into separate folders.)

Navigate to each modules folder and in a terminal, type "sudo ./install.sh" (No quotes) The installers should run.

In a terminal cd to the /OpenNI-Bin-Dev-(etc)/Samples/Bin/x##-Release folder (the exact path will depend on where you unzipped, and what version of the software, platform--ie 64 or 32 bit you downloaded.)

Once you are in the Release folder, type ./NiViewer. You should see a splitscreen of a relatively normal image, alongside a depth image.

I didn't get that. I got an error message. "error while loading shared libraries: libglut.so.3: cannot open shared object file: No such file or directory" I solved that by installing freeglut3 from the Ubuntu Software Manager.

If you can see the image as described--you've got OpenNI properly installed. Now to get it to work with MyRobotLab.

Run MyRobotLab and install the OpenNI service. Close MyRobotLab after it restarts.

Copy all the files from /OpenNI-Dev-Bin###/lib, and paste into your MyRobotLab's lib folder. (If it's a bleeding edge download, it will be "intermediate.#####/lib." Once you paste the files, you'll be informed that they already exist and asked if you really want to replace them. Yes you do. (dammit)

Start MyRobotLab, start the OpenNI service. You'll probably have to detach the service to see anything--which should be a green and blue checkerboard.

Position your Xtion sensor at a stationary target, and click capture. Then click stop capture.

If all goes well, you should see. . .some depth specific interpretation of your target. If you see anything else, click help, and about, and shoot GroG some no-worky love.

Bravo !

You've done it ! I see your arm and can't wait till Houston shakes it !

Thanks for putting all the pieces together and all the inspirational documentation !

Got to go back to the salt mines, so this will be brief. Can you attach or post the results from the following command ?

I'd like to see what drivers its using. Also if you could you post a screen-cap from NiViewer? What does it do in Processing (perhaps another screen-cap)? I'd like to see how close the views are (MRL vs NiVewer vs Processing)

Now, about riding faster into the future (and avoiding those arrows) - The 3D data is a pre-cursor to SLAM - but SLAM needs the additional information of heading & relative position. Is that your priority now? Or are you more interested in the gesture recognition?

YeeeHaaa !

Here's lsmod. . .

Module Size Used by

nls_utf8 12493 1

udf 84576 1

crc_itu_t 12627 1 udf

vesafb 13516 1

rfcomm 38139 0

parport_pc 32114 0

ppdev 12849 0

bnep 17830 2

bluetooth 158438 10 rfcomm,bnep

snd_hda_codec_hdmi 31775 4

nvidia 10257747 40

mxm_wmi 12859 0

snd_hda_codec_realtek 174313 1

snd_hda_intel 32765 5

snd_hda_codec 109562 3 snd_hda_codec_hdmi,snd_hda_codec_realtek,snd_hda_intel

snd_hwdep 13276 1 snd_hda_codec

ftdi_sio 35859 0

snd_pcm 80845 3 snd_hda_codec_hdmi,snd_hda_intel,snd_hda_codec

snd_seq_midi 13132 0

snd_rawmidi 25424 1 snd_seq_midi

snd_seq_midi_event 14475 1 snd_seq_midi

usbserial 37173 1 ftdi_sio

asus_atk0110 17742 0

wmi 18744 1 mxm_wmi

snd_seq 51567 2 snd_seq_midi,snd_seq_midi_event

snd_timer 28931 2 snd_pcm,snd_seq

snd_seq_device 14172 3 snd_seq_midi,snd_rawmidi,snd_seq

snd 62064 20 snd_hda_codec_hdmi,snd_hda_codec_realtek,snd_hda_intel,snd_hda_codec,snd_hwdep,snd_pcm,snd_rawmidi,snd_seq,snd_timer,snd_seq_device

mac_hid 13077 0

usbhid 41906 0

hid 77367 1 usbhid

i7core_edac 23382 0

soundcore 14635 1 snd

snd_page_alloc 14108 2 snd_hda_intel,snd_pcm

edac_core 46858 1 i7core_edac

psmouse 86486 0

serio_raw 13027 0

lp 17455 0

parport 40930 3 parport_pc,ppdev,lp

uas 17828 0

usb_storage 39646 0

r8169 56321 0

Great Thanks ! Now whats more

Great Thanks !

Now whats more important SLAM or gesture recognition?

Decisions decisions. . .

SLAM, or Simultaneous Localization and Mapping is a very cool thing. With this ability, after a robot has made its way around its environment, it has a map and it's aware of permanent obstacles. You can tell it to go to a specific location on its map, watch for changes in it's environment, or patrol a series of waypoints.

That being said. . .

I assume that gesture recognition would likely involve skeleton tracking. The thing I like about Kinect skeletons is that it's a simple, form that is easily recognized as HUMAN. I think that it's a very good thing for a robot to have the ability to quickly pick out people in a room. That's why I prefer it to SLAM. Not to mention, poses and arm pointing are easy ways to give commands to a robot. That's why I prefer gesture recognition over SLAM, for now.

But, of COURSE MyRobotLab is an evolving project. I figure someday, we'll get SLAM in there too.

More screenshots

This is a screenshot of NiViewer run from a command line right out of the OpenNI samples directory.

Yeah that's me with the mad scientist hair.

The original capture was a full screen 1920 x 1080. I quartered it for this post. I've also seen where the two side by side images fill the whole screen without the black void underneath. I'm not sure how that happened.

THIS one is from SimpleOpenNI. Specifically the DepthImage example

I uploaded it full size and ended up shrinking for the post. I don't remember what the dimensions of the window it runs in, but it doesn't take up the full screen like the NiViewer sample program.

SimpleOpenNI is a Processing wrapper for OpenNI. Basically, after installing OpenNI, you install Processing, and drop the SimpleOpenNI folder into Processing's sketch/library folder. There are versions for Windows Mac and Linux.

SimpleOpenNI seems to be getting a lot of attention because it's a fairly complete subset of OpenNI, and it's probably the easiest way to get involved with Kinect/Xtion based vision processing. It's what I'd be using if I didn't have GroG slinging code for me.

You Hair Looks Like Mine ! I

You Hair Looks Like Mine !

I always attributed it to testing too many leads with my tongue :D