UPDATE @ October 2, 2013

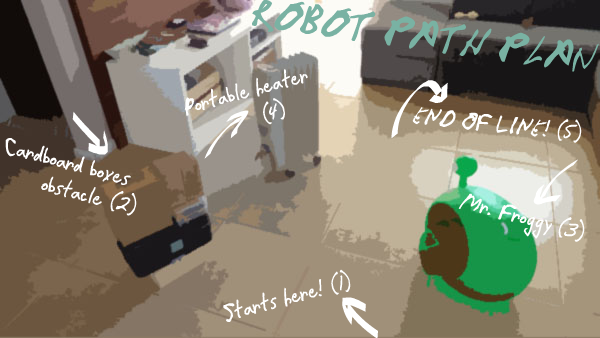

To continue the work of the attempt to light up the way of our robots, GroG suggested me to make a video with a simple path with some obstacles. So, below is the video (which is the same of the file attached, but the attached ones is in a bit lower resolution - 320x240).

For you guys who intend to give some help to us, just download the path_robot_vision.avi file and use it as the input of OpenCV, mark "file" instead of "camera", insert the file address (file:C:\........). On the Python screen, just write this opencv.setMinDelay(30) and execute (you can adjust it with the value which you think is better). This script prevents the video runs too fast! Then, hit capture button! You must see the below video on the OpenCV screen.

Then you can add the GoodFeaturesToTrack filter and you will see the optical flow values floating on the obstacles.

Ok... we are on the way! Follow the plan!!! :)

FIRST POST @ September 30, 2013

Working on my multi task robot, I was wondering about the tasks which I want it to be able to do. And I made a little list:

- Remote controlled with XBOX 360 controller (worky);

- Face tracking (worky);

- Speech recognition & TTS (worky, but need some adjusts);

- Obstacle avoidance with single camera and OpenCV (???).

- Raspberry Pi as the main brain running MRL with all the above services (not tried yet).

Excellent Post Mech

Great references - I've never seen the EVO-HTC one .. it is very good.

Ok, here is what I would suggest next :

We need data !!! - if the data is relevant and accessable then multiple people can work on this problem at once.

So find a good simple area and record through your robots eyes and movements the path it would take ! Make it easy - a few good obstacles and a clear simple path.

Attach it to the post as an .avi file (use compression). That way we are all working on the same data. Then we can start building filters and algorithms which solve the problem and can contrast and compare our results :)

Great start !

Yay ! we have data !

Great movie !

Now we'll be able to EVOLVE the solution !

How we might begin is for all this data we have the unknown of the correct path .. and a BIGGER unkown, of the computer not knowing what an obstacle is.

To teach a computer about obstacles.. we need to start with all the information which does not change.

The information which does not change is:

Right now I can see the Good Features filter has several bugs with the gui ... which I will fix first .. but the idea will be to get rough estimates of distances based on the points moving... This will be accomplished with LKOptical track after GF found good features to track..

Other static info

The bot will also need to know how big it is later down the road to find a path through obstacles unless it's going to rely on bump sensors to tell it that it won't fit through the gap it found between obstacles.

In order to judge the size of the gap it needs to also figure out if the obstacles bounding the gap are the same distance away. If they're not, the gap may be wider than it looks. It would be cool for the code to be able to estimate the gap size before seeing it at a perpendicular angle. That way it can plan the path better if there are three obstacles forming 2 gaps. Pick the path that looks like it leads to the bigger gap.

Problems for after it learns to detect obstacles but stuff to keep in mind ahead of time.

Ya .. all good points..

Great points KMC...

It seems trivial, but very useful if Obstacle detection began by putting in the X,Y,Z measurements of your robot, for all the aforementioned reasons KMC has mentioned.

Additionally, sensor (camera) location in that X,Y,Z cube too .. and field of view for the camera...

The more information you know before you start moving - the better obstacle avoidance you can do.

People have all this info about themselves .. since they have been living with themselves all their lives... imagine if you were suddenly 1 foot taller .. how many times you would bonk your head :D

also this information will lead us to "model" the robot in 3D space - which will be the simulator as the robot "thinks" of the optimal path.

"imagine if you were suddenly

"imagine if you were suddenly 1 foot taller"

That is part of why kids are so clumbsy. They develop some muscle memory for a task based on one set of bone lengths and balance points and then they hit a growth spurt and the training is off and needs to be recalibrated.

Another interested example was when I went bowling with someone that used to be in a league but then stopped before gaining a lot of weight. When his muscle memory kicked in, it didn't know about his new center of gravity and he wound up on the floor. Luckily he was able to laugh it off.

Robot Video 2

Ya it's blurry - cameras are lighter so they are effected by the vibration of the power train & treads ?

This is not good for good features track - if you see the below frame

the values represent the ratio of the number of frames which have repeatedly selected the same pixel as a GoodFeatures point. the bigger the number (biggest being 1.0) the better and more stable is the point...

Ya vibration makes tracking difficult ..

But !

This is FloorFinder, which usually requires a blur - it spreads across pixels of the same value and often it is reduced to only a few pixes since the images are sharp. But with a blur, it will spread further.. for the most part .. it got the floor correctly (there is a part at the beginning where the floor finder runs up a white box)

This filter (with tuning) would be helpful to find options of possible paths.