Recently I listened to a podcast about P-Zombies, Zimboes and Artificial Intellegence (the-dilemma-of-machine-consciousness-and-p-zombies).

The part I was interested in was how a "Zimboe" was defined. A Zimboe is the lowest common denominator for consciousness, and the primary requirements for a Zimboes are:

For consciousness :

- You must have some mind & emotional state

- You must be able to observe, reflect or examine this mind/emotional state

There were many more "tests" and definitions .. but these 2 rules seemed relatively easy to work with.

I created the beginning of an Emoji service some time back, because I thought it be fun to have a pictorial view for an emotional state of a robot. Now I'm interested in combining what I've learned from the podcast.

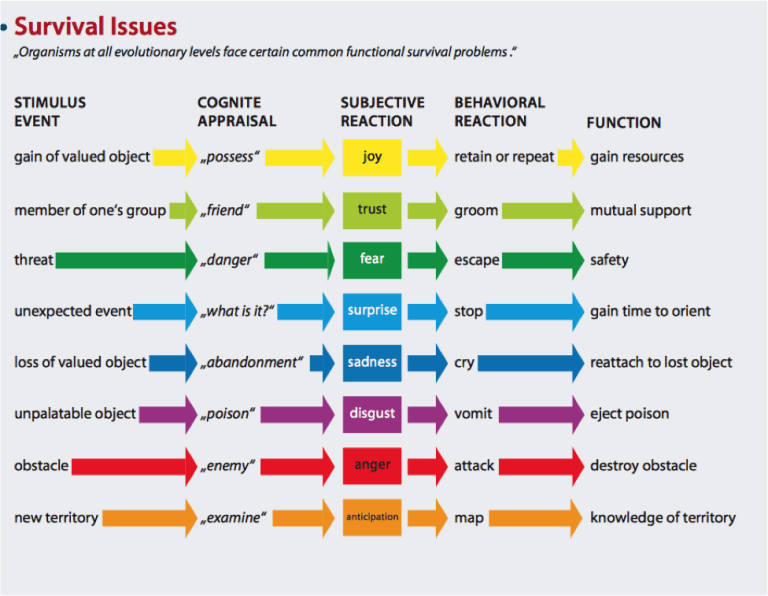

In order to start I figured I'd search for a simple graph of emotional state and Robert Plutchik name quickly came up.

Here is his graph

Cool ! ...

So my next task is to create some representation of this within the Emoji service. We can start by mapping this graph to emoji characters.

| emoji | state | uni-code | description |

|

neutral | 1f610 | Neutral face |

|

ecstasy | 1f923 | Rolling on the Floor Laughing |

|

joy | 1f602 | Face with Tears of Joy |

|

serenity | 1f60c | Relieved Face |

|

admiration | 1f929 | Star Struck |

|

trust | 1f642 | Slightly smiling face |

|

acceptance | 1f642 | Slightly smiling face |

|

terror | 1f631 | Face screaming in fear |

|

fear | 1f628 | Fearful face |

|

apprehension | 1f627 | Anguished face |

|

amazement | 1f92f | Head blown - exploding head |

|

surprise | 1f92d | Face with hand over mouth |

|

distraction | 1f928 | Face with raised eyebrow |

|

grief | 1f62d | Loudly crying face |

|

sadness | 1f622 | Crying face |

|

pensiveness | 1f614 | Pensive face |

|

loathing | 1f61d | Squinting face with tongue |

|

disgust | 1f623 | Persevering face |

|

boredem | 1f644 | Face with rolling eyes |

|

rage | 1f620 | Angry face |

|

anger | 1f621 | Pouting face |

|

annoyance | 2639 | Frowning face |

|

vigilance | 1f914 | Thinking face |

|

anticipation | 1f914 | Thinking face |

|

interest | 1f914 | Thinking face |

|

vomiting | 1f92e | Face vomiting - Core Dump ! |

|

sick | 1f922 | Nauseated face |

|

ill | 1f912 | Face with thermometer |

The next task would be to create methods in the Emoji service which could evaluate or accept changes in state, and a timer which would "age" things. In the most simplistic senario, it could minimally listen to its own logging and depending on the type of log entries currently being written decide a "Mind/Emotional State"

E.g. ERROR log entries push the state into apprehension, fear, terror .. while clean logs migh increase the serenity, joy, ecstasy level.

Other inputs could be wired to drive different levels of the emotional graph.

But all this "input" is less than half the system. It should "publish" its state so that it potentially influences other services. A fun example might be the way it changes the statistics of certain AIML responses in ProgramAB or Voice choices or sound files while synthesizing speech.

For example, Power levels are low, it becomes fearful and publishes this info to ProgramAB & the AbstractSpeechSynthesis. You now may get "different" responses than you typically expect. Additionally the emoji service will simply display what emotion state is running. Isn't this nice ?.. people can be so much more difficult ;) And then, you can ask, "Why are you afraid ?" - The robot evaluates/reflects how its emotional state moved to its current position, and replies ... "Because I'm feeling close to death ... Where is an outlet ?"

For us this graph works better because now we have 4 axis our internal state can drift around on.

I'm going to implement a FSM (finite state machine) who's internal emotional state can be describe on this graph as it moves around affected by events which transition between different emotions.

For finite state machines the following describes their core :

State(S) x Event(E) -> Actions(A), State(S')

These relations are interpreted like follows: If we are in state S and the event E occurs, we should perform action(s) A and make a transition to the state S'.

The states are well defined. Now I just need to create the Events & Transitions.

e.g.

State(Neutral) x Event(bad) -> Actions("publish I'm annoyed"), State("annoyed")

Found what I believe is a great FSM library - very simple, very concise here https://github.com/j-easy/easy-states

With it I've made all the above states, now its time to start describing the transitions and events.

I've started a diagram here which is editable - https://docs.google.com/drawings/d/1GBiKV9V-IQZjn6V1JFV6GGpf6pGpEUr_a6Jb_rdJK10/edit

At the moment I have this :

.png)

Its just showing how you can get to a vomiting state when you encounter 3 errors in a small amount of time.

This could be achievable easily by subscribing to errors. Also the Emoji service will have a timer which fires a "clear" event at some interval .. and that in turn has the possibility of moving the state back to "neutral"

References :

Hello Grog, I really like

Hello Grog,

I really like this concept!

How far are you setting it up in MRL?

It would be fantastic to use this in conjunction with programAB.

Its working now. The part

Its working now.

The part that I'm very happy about is the FSM is written in a very general way - and can be configured to manage "any" state diagram. The emotional thingy is just a single example.

The part I'm a little fuzzy with is what and how "Events" are characterized as.

We can be sure when an "anger" Event arrives our state machine will travel in the expected direction, but how/when/where is an anger event ? I guess one source could be ProgramAB using OOB "emotional" event tags. Maybe this is what your thinking about ?

Heh, however, I completely got distracted with Servos at the moment ;)

yes, I thin the user talking

yes, I thin the user talking to the robot will be the first to get the robot into events which will make it change of state.

Because currently InMoov doesn't have facial gestures others than moving the eyes and the jaw, the expression of emotions is more complex to express. So I guess it currently should be mainly expressed via language or body language (gestures)

Kwatters might have an idea of how we could currently classify AIML responses according to states without having to rebuilt a complete chatbot.

I was looking into this AIML which has some (bot name="feelings"), this was certainly used with emoticons via a screen.

https://github.com/MyRobotLab/pyrobotlab/blob/develop/home/kwatters/harry/bots/harry/aiml/emotion.aiml#L26

If we would add something like which would allow programAB to only select the corresponding state responses, it could be a way: