It Worky(ish) ...

This is Work-E doing a very short run, because there is little room, in my office towards the door. The OpenCV service now can save "frames" of kinect data, which I found exteremly useful. Great for testing without having to run the "real" robot.

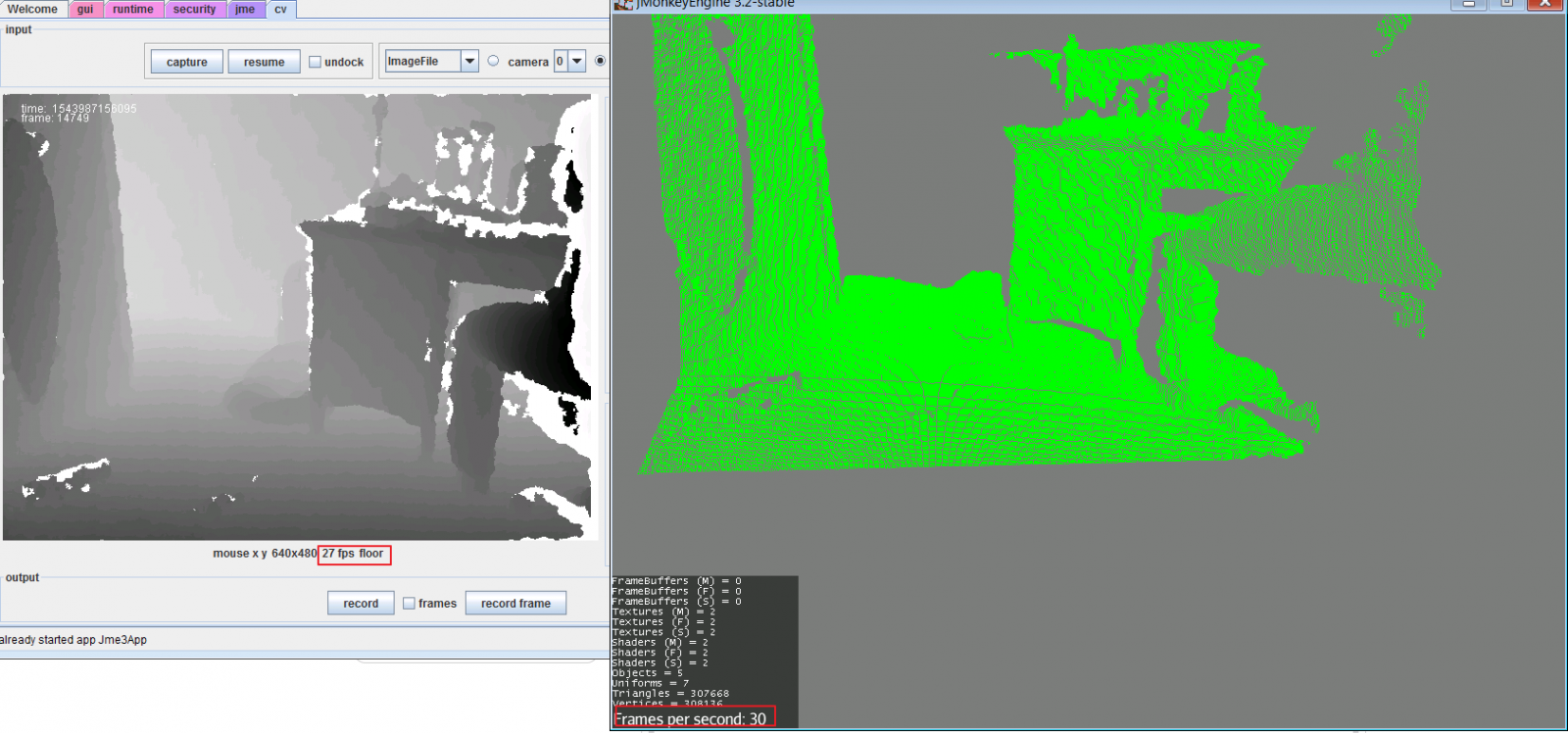

OpenCV service can publish PointClouds - and JMonkeyService listens for them. I believe I found some useful links on updating the point cloud mesh in JMonkey as quickly as possible.

reference : https://hub.jmonkeyengine.org/t/updating-mesh-vertices/25088/7

The fps seems quite satisfactory

So what next ? :

- lots of cleanup/polishing

- the 3D mesh in JMonkey still suffers from a triginomic distortion of not correctly converting the polar coordinates of the kinect into real world xyz cartesian coordinates.

- add color like Mats did :)

- right now there is only one viewport/pointcloud buffer, as the camera turns, I want to leave culled and strategically meshed buffers behind. Less detail, less memory, but begin to stitch the world together.

- looking at some of the SLAM strategies, I know one of the challenges is to "re-connect" - when the camera moves around randomly and does a 360 ....

- turning around, and realizing your back to where you started actually takes "recognition" or very precise gyro/accelerometer/compass ... but we want to recognize key features and MAP .. (the "M" of SLAM)

- Get Yolo to start publishing bounding boxes and labels in JMonkey 3D land of objects identified. So we can find the beer bottle in the room...

This is near where Calamity is I think... - so I'm starting to catch up. I saw a bunch of collision references in his IntegratedMovement service which is exciting.

So what should calculate collisions ? Preferrably, I like systems where graphics are not manditory do calculations. So much to play with ... so little time..

Gonna start needing to organize all this stuff with a search engine ....

Great work Grog, That look

Great work Grog, That look much better than my implementation. Seem much more faster, but i was also doing other transformation that I think slow down the computation of what I have implemented. But your work seem very promising

For the collision detection, what will compute the fastest will be the better. The complexity I found when I work on what I did is that you don't want to know if there is a collision, you want to know if there 'will be' a collision. You don't want worke to stop after he it your furniture, but before it chip them. So you need to find a way to predict the collision.

The simpliest way to do it is just to stop when it goes close to 'something' but that also mean it will never go close to anything. That's not what I want to do with the inMoov model, as utimatly I want it to be able to grab something (I'm still not there)

The strategy I use is to, considering the movements currently going on, will a collision happen in 0.1s, 0.2s ... till 1s or 2s. So that make a huge load of computation, and the reason I did not use directly the point cloud but use a basic geometric shape that is the best explain by the point cloud. While far then be perfect, the result is surprizingly good. This is also why I add the velocity parameter to the DHLink class (I have seen some comment about why it's there)

I'm very excited about what you currently doing.

Thanks for the words of

Thanks for the words of encouragement Calamity. I'm having fun and learning some math I should have learned long ago ;)

Right now I'm trying to figure out the correct kinect spherical transformation to do for accurate JMonkey 3d (x,y,z) .. Perhaps you have it in your Mesh3D.

Currently I'm looking into how Apache Math does it ... and they find the Jacobian first ... so much stuff to learn.

Looking at my code, I find

Looking at my code, I find out that I have a lot of cleaning to do. I have try many thing but finally did not use some part of it.

To get the 3d(x,y,z) look like I use OpenNi to do the transformation (data found in OpenNiData.depthMapRW)