It's been a goal of mine(ours?) for a long time to have a proper telepresent InMoov. I believe Lloyd is that. There is a new branch of MyRobotLab called "lloyd" based off the develop branch. This is where I'll be continuing work on my customizations without breaking horribly things that are already working.

Let's talk about Lloyd. Lloyd is named after the bartender from the moving the Shining.

As it turns out this is the same actor as Tyrell in Blade Runner.

Lloyd has a Brother named Harry, but I'm not really sure what that means when it comes to robots. It gets especially blurry seeing as how they have swapped arms and hands over the years. Harry is named for a slightly more family friendly character from the movie Dumb and Dumber...

But anyway, I digress... What does this all have to do with Lloyd, or Harry, or what ever you call it. This is an evolution of the InMoov robot, so let's talk about that.

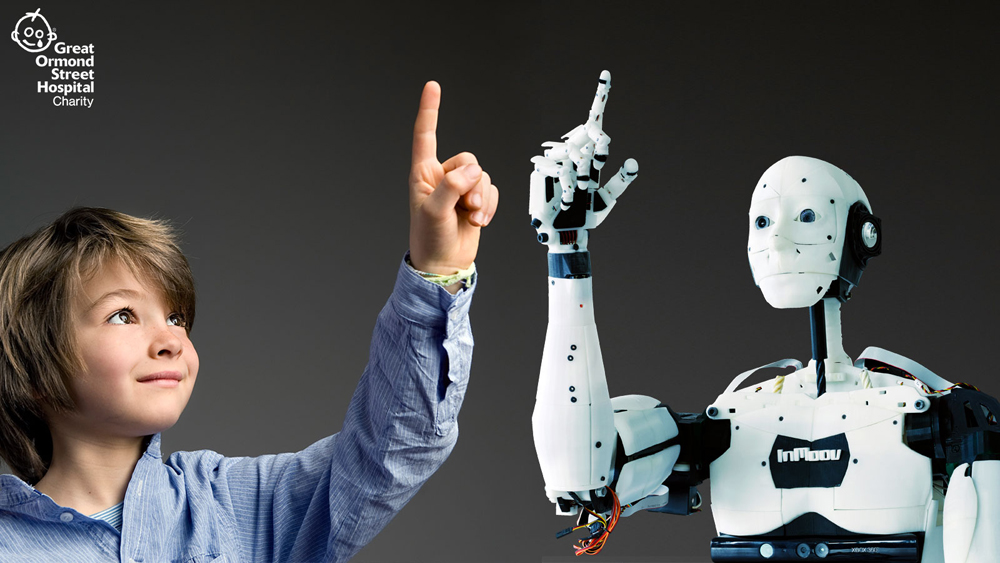

In a way this work inspired and was inspired by the RobotsForGood project. It's a great project that was(is?) hosted at WeVolver. The idea is that sick kids in a hospital could put on the oculus rift and drive around an InMoov at the Zoo right from their very own hospital beds. A great cause and I'm happy that I in some way helped out with it.

I had the chance to meet the wevolver guys at the NYC Maker Faire. This is the same time that I meet Gael and Greg in person for the first time. It was really something else, an incredibly tiring weekend, but so much fun. That experience has helped inspire me to continue all of the work here with the MyRobotLab project.

So... Where does this leave us, oh right, robots.. telepresent robots. WeVolver (Richard Huskies) was speaking at the RedHat Summit ( A conference that was held in San Francisco ) to talk about the RobotsForGood project. They invited me out, with my InMoov, to help with the presentation. I was very happy to help out, but it definitely ment that, on stage, in front of a couple thousand people, the robot needed to work. And.. Horray! It was worky!

I was able to sit in the audience, watching the presentation, while remotely controling the movements of the robots. The two cameras in the head of the robots were being streamed live to the audience on the "big screen"

There I was, like the mighty Oz, able to control the robots movements from the audience and on key, have the robot wave to say hello, all while streaming stereoscopic vision from the eyes to the big screen. It was a big day and I felt very happy with how MyRobotLab performed. The image quality of the cameras was horrible, but I didn't really care. It worked.

Let's fast forward a few years, to now. Yes, then became now. It seems like it happened soon too.

Anyway..

So, Now we're coming up with the Nixie release of MyRobotLab and I'm preparing for both the Boston Make Faire (Oct 6-7th) and .. the Activate Conference in Montreal (Oct 17th). The Lloyd branch is where I am doing most of the work to prepare for the showing at the Boston Maker Faire as well as the presentation that I will be giving at the Active Conference.

So, My hope is to take the presentation to the next level as compared to the Robots for good presentation. Previously, at the RedHat summit, the robot was controlled by using the WebGui, however, that was just a "quick and dirty" approach to validate the proof of concept.

The approach is as follows

Lloyd will consist of an InMoov robot with 2 ras pis.

One raspi is responsible for setting of mjpeg video streams, one for the camera in each eye. We won't ask this raspi3 to do anything more than that. As it turns out high resolution video streaming is actually a pretty difficult task for a ras pi, so best not to ask too much of it.

A second raspi will be installed in the inmoov to run MyRobotLab. This instance of MyRobotLab will conned to 2 arduinos that control all of the servos in the InMoov. It will do nothing more than control the Arduinos and servos.

The thirst part of this equation is my laptop, which is where I will run a 2nd instance of MyRobotLab with the OculusRift service. This currently can attach to the video streams from Harry's head. This means now, I can stream the stereo video to the Oculus Rift and see out of the head of the robot.

Each video stream runs through an OpenCV instance that applies a few filters to the video feeds before it is ultimately displayed in the Oculus. The filters perform an Affine transform to translate and rotate the image to achive stereo calibration and convergence. This means when you see the video through the oculus, you have depth preception and see in 3D. Horray!

Additionally, the Oculus Rift provides head tracking information that can (and will) be relayed to the raspi running on Harry to control the servos of the head position.

This means that as you turn your head and look around, the InMoov robot will track your movement and as you turn and look to the left, the head of the inmoov robot will turn and look left. The result is you will see to the left of the InMoov out of the eyes of the InMoov head providing a sense of presence.

One neat thing that has evolved over the past few years is good options for object recognition and tracking in the open source community. So, because we're using the OpenCV service to power the OculusRift, it means we can apply filters to do real time classification using technologies like VGG16 and Yolo to provide a heads up display to display in real time information about the objects that you are seeing through the head set.

Ok.. so this brings me to search. It's one thing to have a bounding box around the object you're seeing, but it's entirely a different thing to be able to display , in real time, information about that object that you could pull in from a data source such as wikipedia.

So, just freshly added is a Solr filter for OpenCV that can run a search and display infromation about the serach resuilt as an overlay that you can see in the oculus.

Yes, that's Augmented Reality...

Ok.. that's not enough though.. let's make it cooler... Some of the next goals are going to be to integrate the Oculus Touch controllers and to use the InverseKinematics service to memic the hand position information that is relayed back from the controllers to control the left and right hand of the InMoov.

That's right.. having a robot that has intellegent heads up display that you can stream in near real time to a remote computer with an OculusRift that you can drive around and control it's movements....

Move to come.. my fingers are getting tired from typing.. I feel like now that I'm starting to write down all some details about all of this functionality, I feel like i'm leaving out so much stuff!

More to come!

Wow kevin, really looking

Wow kevin, really looking forward on how the progress is. I will follow your work, because i want to set up something similar but not with oculus but with more simple raspicams, and i am going to work on the native stereoscopics with raspis and the needed hardware.

But i would love to see if the implementation of your hardware could be converged to raspberry py with raspicam V2's

nice history, nice goal. Will

nice history, nice goal. Will look forward for your work Wizard of Oz

Wow what a post! Using InMoov

Wow what a post!

Using InMoov for telepresence is definitly really cool. Currently one of my InMoov is in a exhibit in the Netherlands (for two and half months)and I can control it from France if ever I want. Currently I use Teamviewer which is a easy platform for Windows.

What is nice, is that, you can do telepresence, using OpenCV, listening to what people say to the robot and define via python to launch whatever script. Also you can make the robot speak what you want by writing "say" before the sentence in the chatbot tab. More I use it for telepresence and more I discover functions I can do with it.

Alessandro had done sometime ago the possibility to use a self made OculusRift to control InMoov via MRL, and I sure would like to be able to play with that soon.

So any development you do about Telepresence options will be super great!

Keep us updated!!

Now with better AI

Thanks Gael! This was a big weekend for the AI stuff in MRL. Lloyd is evolving to be both a telepresent and an artificially intellegent robot at the same time. These two applications don't need to be at odds with each other.

The latest advance is (on the lloyd branch) we can save training images into the memory powered by the Solr service. We can then create and train a "visual cortex" for the robot based on those robot memories. This uses this DL4J service to apply "Transfer learning" to an existing VGG16 model.

This means we re-configure and re-train the existing VGG16 model to classify new things. This turns out to be much much more effecient than trying to train a network like that from scratch. The result is that a new model can be trained in about 10 minutes (maybe faster depending on GPU access)

So, with Lloyd now, we can have a conversation like the following

I feel that this interactive teaching approach to the robots will be pretty successful. We now able to teach our robots like we teach our children.

One the telepresence side, this is just one more item of information that can be available on the heads up display in the Oculus rift.

more to come!