High Road - Kinect mounted high, pointed down (glview)

Low Road - Kinected mounted low - pointed straight (glview)

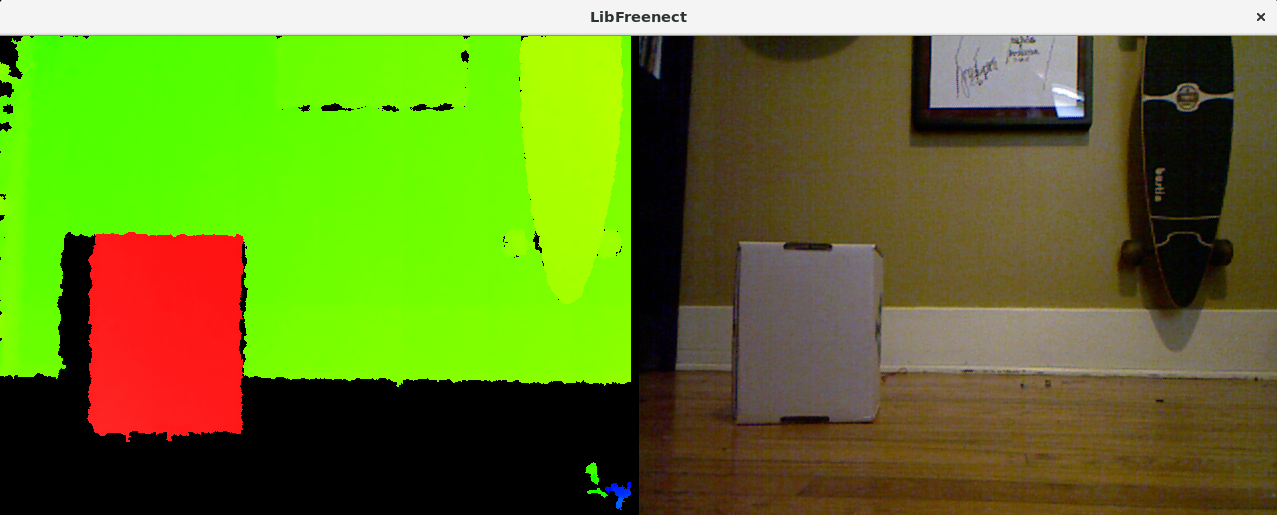

Mounted low and box pushed closer - since it blended withthe wall. GLView is nice in that it has "black" where there is "unknown" depth, while mrl dutifully paints it as "green" depth. I update this soon. GLView is also nice that you can change the angle of the kinect using keys. A big challege would be to do the same thing using JavaCv's FrameGrabber ? .. heh, that would be a funny update for JavaCV

.png)

Ahoy ! - there be my tush, desk, stool - but most importantly you can see a lovely smooth color gradient starting from the bottom of the frame gradually going up. Now, I'll be working on the depth navigation filter to determine if "something" interferes with the smooth rainbow. if it does, then "smooth green fields of floor" do not exist going forward, and it becomes an obstacle to avoid.

Oh, and in this frame you can clearly see the band of 12 pixels on the right edge. This is an artifact (blind spot) of the kinect's structured light sensor implementation works.

.png)

FIXED !! WAHOOO ! - now we can see "all" the data - the gradation as it cycles through the color spectrum. I finally decoded what needed to be done to display it. One part was not to forget the size of the pixel values, and the last one was to swap bytes in the depth (msb/lsb were previously in the wrong place).

.png)

Update - no missing depth data .. Yay ! - The problem of the recycling gradient is a Java display issue. A BufferedImage is created with the "correct" information telling its a GRAY_USHORT unsigned short single channel 16 bit depth image, but it's displayed as a 8 bit grayscale.

My idea is to convert it to a HSV value where H (hue) is an index which can represent depth. I'm pretty sure this is what some of the other common viewers are doing (such as the freenect-glview). Above you can see my first attempt of it, which iterates through all the bytes of lpImage.

You may be thinking "why not just fix the display vs iterate through all the pixel values and go through the work of translating it"? The reason primarily is, I'm interested in work-e navigating around, and I'll need the depth values of the image anyway. An extremely simple way is to avoid "stuff in the way" is check values at the bottom and if there is something which is above the ground plane ... avoid it.

Current problem is making the gray 1 channel 16 bit depth correctly translate into a rgb hsv 3 channel 8 bit image :P ... Don't have it yet .. :)

.png)

Here's a smooth floor looking from Work-E's eye - it should be a smooth gradient, but the gradient cycles at least 3 times. I remember looking at this a long time ago .. I think perhaps the data frame of the kinect framegrabber in javacv/opencv might be missing data ?

I'll look into this soon because I want a smooth change of depth for Work-E to understand what is close and what is far away ... hopefully its just an artifact of the display. Maybe if we have a gradient of colors too we can get "full depth"

not just the floor that look

not just the floor that look wrong, the chair(?) in the top center is also wrong, like it's too close for the depth range but the weels are fine?

My gut told me that it do not use the right data for the depth map, maybe it use one of the pixel color to compute your depth image.

I did not notice that kind of artifact using openNi when I play with it for IntegrateMovement

Hi Calamity, Great to see &

Hi Calamity,

Great to see & hear from you ;)

Hope you are doing well.

I found the root of the problem.

The kinect depth is 1 channel with 16 bit unsigned array of values.

Java's BufferedImage (the base of display) can not "display" it correctly. Instead it displays it as a single channel 8 bit array of values. That is why you see the horizontal gradients, its because its only displaying the least significant 8 bits :(

Now I have to "convert" it to something which displays appropriately. BufferedImage always "wants" to be RGB 3 channel 8 bits per channel.

So I'll so some HSV conversion from the 16 bit values to appropriate 24 bit RGB .. at least that is my current plan :)

Hi Grog I'm doing fine. Lot

Hi Grog

I'm doing fine. Lot of things happen and I don't have as much time to walk this playground as much as before. But I'm still looking on what's happening here from time to time

Glad to see that you are making progress, the visualisation of the depth map looks great.

I'm curious to see how you are planning to use the kinect data to navigate. I have try many approach in IntegratedMovement before settling for a way that is probably imperfect, but that give me fairly good results for the need I had.

Keep up your good work, it's always awesome

I like the high Road

Hello Grog,

Looking at the two images at the top, I would suggest the high road approach be the better option.

Not only do you get the object in the view, but should there be a void such as those present with stairs, then you will see that as well, and definitely an object to be avoided by a wheeled bot.