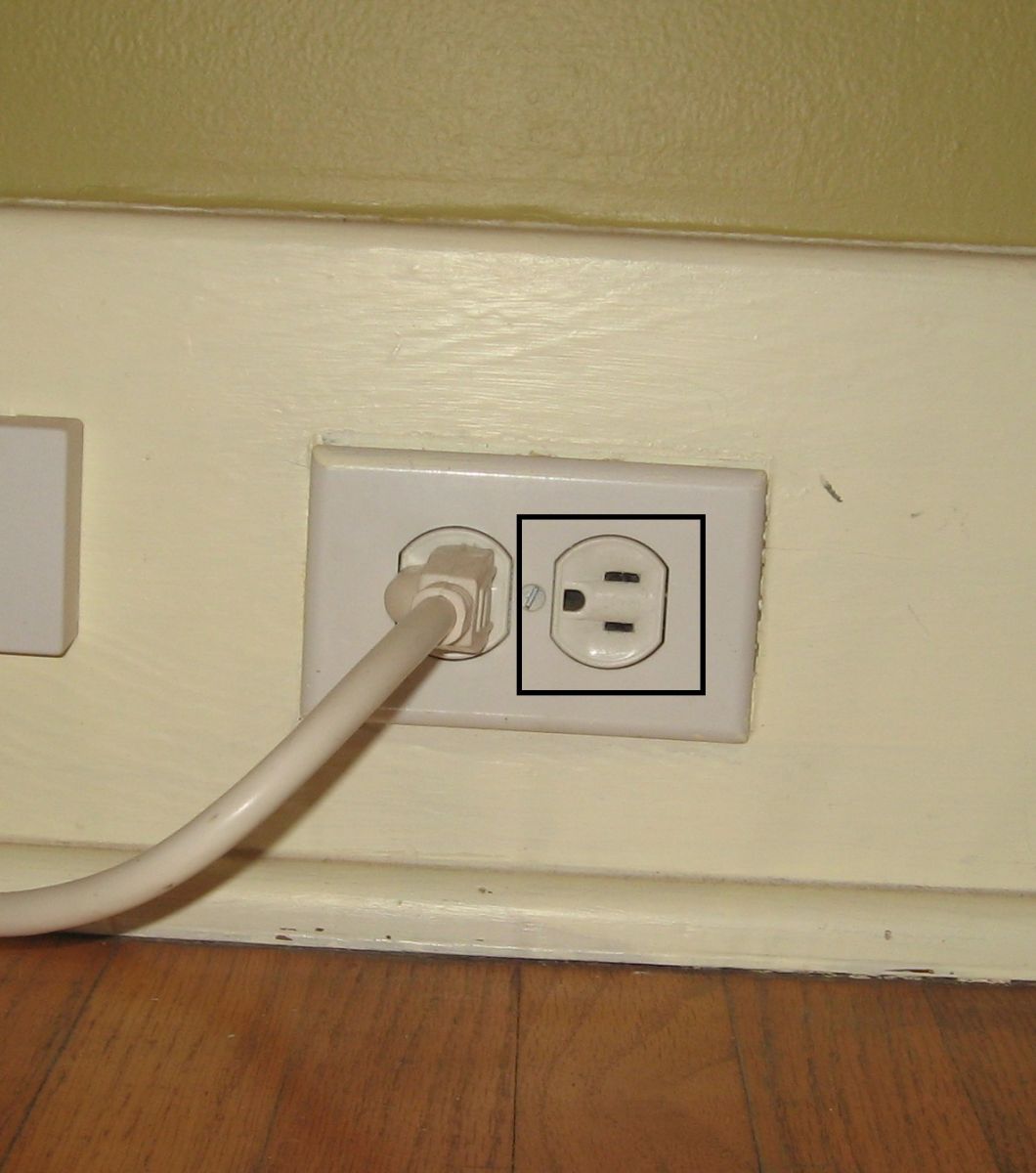

I have been thinking for a long time about how to harness the power of the internet into programming robots. I can imagine a robot here which I give some form of task. Let's say (and this would be relevant) finding a wall socket and plugging itself in. The task of successfully identifying a wall socket, moving the robot to a correct location, and inserting some sort of plug would seem monumental. There is a metric shit tonne (thanks CTC!) of environmental problems or things which could go wrong. Rather trying to think of all the possibilities, we would do better to "grow" the program out of the environment. Let's start with the first step "Identify a Wall Socket". What sensors can we use to find a wall socket? It might be possible to use a gaussmeter or hall effect meter. A map of the room might help too. But the way I am initially going to try to locate the wall socket is the same way people do, by using vision.

Haar Training starts by collecting a large number of "positive" and "negative" images. Positive images are images in which the object exists. Negative images are simply images which the object does not exist. During part of the training the objects in the positive images need to be manually selected by a bounding box. This is one of the most labor intensive parts of training. When I read this, I instantly thought of a fantastic project which involved Michael Jackson's white glove. What if the concept was used to create a library of Haar Classifiers? A site dedicated to train our new robot overlords !

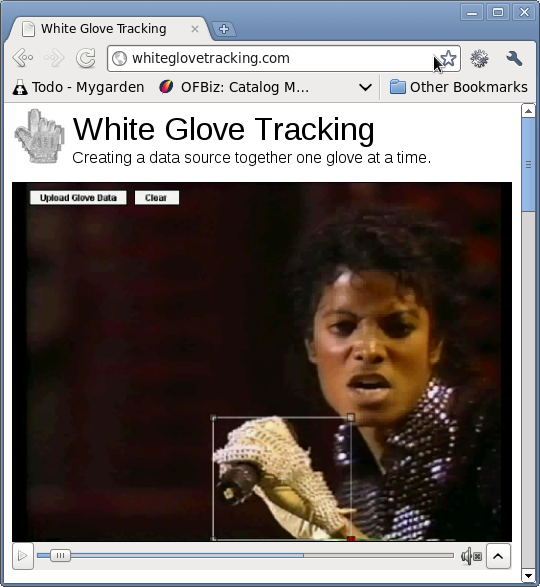

The White Glove Tracking Project

In addition I have thought what if a robot was able to distinguish an object from its surroundings. What if it had the capability to query in an attempt to identify that object?

For example Imagine the following scenario:

- Robot is driving around inside of house

- It finds "something" with blob detection or some other segmentation algorithm

- It sends a message to my phone with a picture attached to it "what is this?"

- I reply a text statement "lamp"

- It categorizes that object to be used as a Future Haar Vision Classifier. If robots could do this, the "localization" might actually improve its future identification of things in your house.

For anyone who has had a child this is what happens for a couple of years. The toddler runs around and asks anyone near by "This?" and points to some person, place or thing. Now imagine that same concept used to train a robot, but distributed over the internet so there is not just one or two parents, but thousands or hundreds of thousands. How quick / accurate would the training be? How useful would the data and libraries be? I don't know... but it would be interesting to try this experiment, No?

Genetic Programming - a Slight Deviation

- First, define a set of all possibilities.

- Define a rule which determines "how well" a solution did

- Run the system - kill off all the losers

- If the solution can be segmented, mix and match pieces of the solution (mating) to make a bigger badder-ass solution

- Add a little randomness (mutation) - mutation is necessary for successful evolution and the X-men !

- Repeat until satisfied

So, you may think. Damn! that's a huge amount of effort just to get my servo to move from A to B. Yeah, it would be - but a one-dimensional Servo positioning problem is not "hard". The thing I'm thinking about is "walking". Human locomotion involves at least 8 joints, hundreds of muscles, inner ear, the sense of touch, and the "where is my foot?" sense. I forgot what this is called, but the name is buried in a RadioLab episode.

Imagine sitting down and trying to figure out all of those commands - Like "I am going to move my knee 3 degrees now", then "I am going to flex my butt slightly more" ... blah blah blah.

Nope, no one does that (although there apparently is a disease you can get where you have to do this)...

What happens, is when your a little shmoo, you start kicking and crawling, and grabbing at stuff.... then you balance yourself ... then BAM ... you fall on the coffee table. FAIL!

Try again... shaky legs, wobble, take first step BAM FAIL!

Well maybe we do a form of genetic learning taking the best of partially successful attempts and glom them together in our brain to be skilled walkers.

Most robot walkers really suck ! They have giant feet and small legs. You could tear off a leg and turn the power off and they would still be standing (try that on a person). So, this is an attempt to make a better walker... it will still probably suck, but I won't know until I try it.

My design will have very small feet (maybe just rubber tipped dowels) and 4 - 6 Servos (cause that is how many I have), and accelerometer and a couple of touch sensors. Here is my first rough sketch.

References

- Introduction to Genetic Algorithms by Marek Obitko

- Cool Applet by Marek - Tree encoding / Genetic Programming

- Wonderful example of an online interactive evolution game

- Brilliant Clock Simulation by ck007 on YouTube

- Riks Genetic Algorithm experiment

- Riks experiment goes online

- Genetic Algorithms

- Genetic Programming

I think there is an "Arty" component of Genetic Programming. The hard part is not turning the crank as with simple or defined algorithms. The hard part is defining the problem and encapsulating the success into something measurable.

I would be interested in assimilating the code into MyRobotLab framework. So I went looking around I found several implementations of Genetic Programming in Java.

This is what I've seen so far.

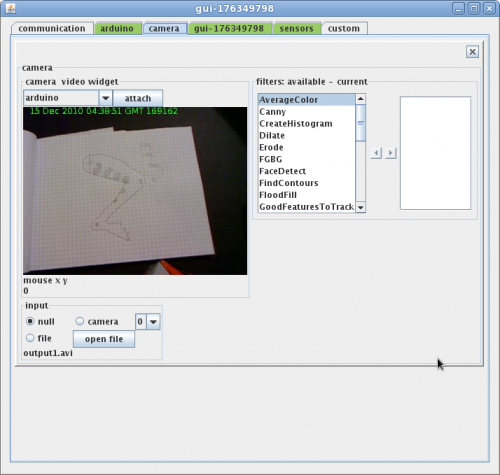

The experiments will run in stages. The first stage is to set some super simple experiments up with MyRobotLab, since I just got UDP video streaming to work. The video should be helpful in future for distributed Haar Training as mentioned previously.

More to come....

2010.12.14

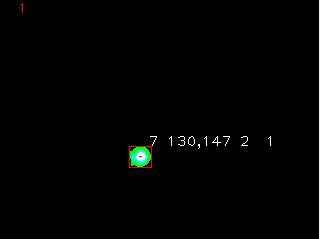

http://frogleg.myrobotlab.org/ - starting to set up the experiment. I'd be curious if anyone gets to the video. If you do please let me know with a screenshot & lag time (I put a clock on the video). The filters are functional if you want to mess around. Don't know if I left out anything which blows stuff up... we'll see. Link to the Applet is at the bottom. Right now it is using UDP exclusively, this was an idea in order to make the video work for longer than 10 seconds. But I may need to go back and send only video on UDP and all other control messages on TCP.

For the record - this is what it looks like on my box.

You should see the clock update, and you should be able to change the OpenCV filters.

Lot's of shoulds.

2010.12.14.Later....

Something is goofy ... this was working (the client/server part) on different machines but now its not so ... don't waste time... I'll try to figure it out.

2010.12.18

As I mentioned before the challenge might not be the "programming" or framework of the system but defining the problem:

So here is my first stab at that definition.

Define the Problem

Find the Function that will send 2 Servos the correct data sequence to move the Frogs Foot in a predefined smooth "Walking Path"

The error will be calculated from a predetermined path which was manually created. The comparison if done visually will be done frame by frame. Within each frame the control path will be compared to the actual path and the proximity between will be considered the "fitness".

There needs to be 2 output variables - So I guess that would be expressed as TWO functions, one for each servo

- JGAP - binary example works!, when I downloaded it noticed there are quite a few dependencies missing. JFreeChart and other third party dependencies. The minimum change example does work. Have begun stepping through some of the code in the eclipse debugger.

- N-Genes - began looking at this. Unfortunately in the tutorial, the pathing for the tutorial was not correct. I started correcting files and the command line syntax until I got frustrated enough to give up with this package.

- GROOVY - Java genetic programming looks like there is no active development - the dated entry I saw was from 2000. The "Home Page" doesn't even exist anymore. Found files here

- GenPro - looks a little more active - having a little trouble getting the examples to work again. Saw a celsius to farenhiet formula generator - looked interesting as an example where the outcome is to derive a formula !

- Genetic Tree Programming Visualizer - here is a package apparently not for Genetic Programming itself but for visualizing the data - interesting

- Java World Article - Interesting

-

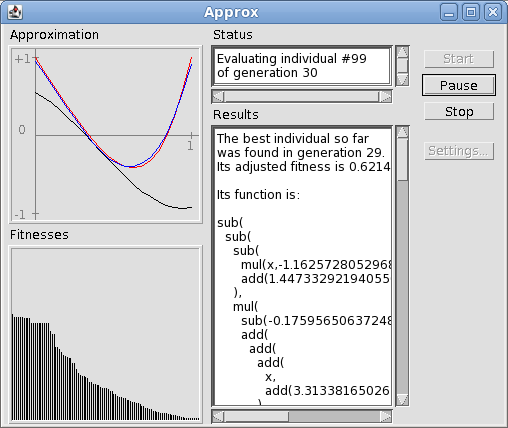

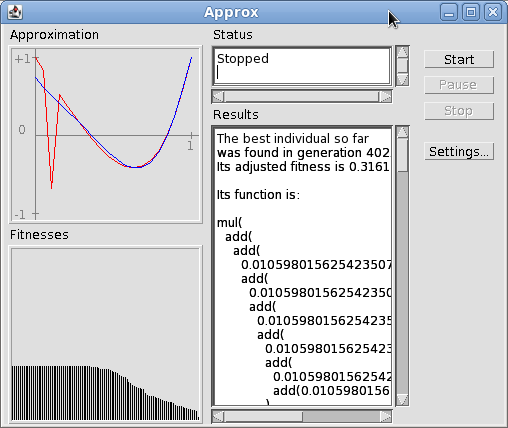

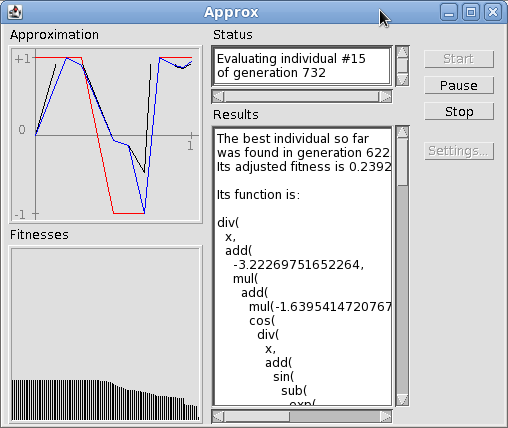

I have found Hansueli Gerber's great and simple demonstration of GP in the form of a Cool Applet - creating a function to follow a curve - wow, this seems very close to what I would like to implement. Woot ! Source is here

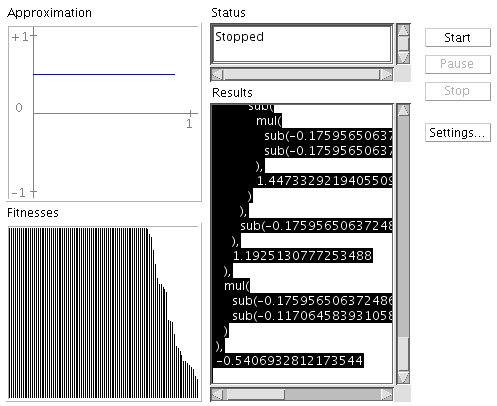

http://alphard.ethz.ch/gerber/approx/technical.htm Very well done "working" example of genetic programming - with an ouput of a function. The code is old - cleaned up a few small errors for Java 1.5/1.6 - Much of the Applet functions are deprecated. The code was neatly constructed with good separation between the GUI and the GP.

The program does very well in generating a function to match the current (default) curve.

I through in a large sharp spike to see what would happen. After 500 iterations it failed to match the spike. I'm not really surprised as I believe it was constructed with the idea of following more smooth curves. And the goal which I am interested in is a smooth curve. The reason I did a spike is that was the easiest data I could tweak artificially.

If anyone knows how to create a 10 point data set and make some funky (sinusoidal?) waves I would appreciate the input. Hmm... wonder what a circle would produce.

Or Theo's data set - Hey Rik do you have plot values of your legs we could test as input values of the fitness curve !?!?

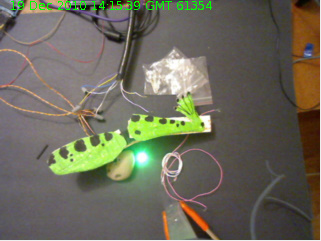

2010.12.19 - Easy Filter - Frog Hunting at Night

I found the appropriate "green" LED and applied the following filters. Have not mounted LED to FrogLeg yet. As Gareth had mentioned I could possibly be doing this with a wii remote, but I have not purchased the PC bluetooth dongle. Getting a robust tracking was pretty easy with the lights off.

- PyramidDown - to scale the image from 640x480 to 320x240 - its easier to work with and the frame-rate is more smooth

- Threshold - this will filter out reflections and make a nice round target

- FindContours - find the contours (should be only 1) and get center - print out the coordinates

Now, I just need to save the coordinate positions off, scale them < 1, and put them in the applet...

Captured Froggy - is really a test of the motion capture. I used my hand to generate the motion to test the basic process. Also to test the export format of the data being captured was correct and in the range of data expected by the GP Applet.

Solving For Froggy - the red line represents the path of the LED with my hand. It came out relatively well. There was a bit of loop and other garbage at the end which I trimmed off. So it did not take long (131 iterations) for the GP Applet to solve for a nice curve - certainly nicer than my jiggly hand movements (too much coffee!)

What the graph and the formula represent is a simple X Y plot.

At the moment this would equate to a linear actuator Y and time X. As time proceeds a simple formula could be used to move the linear actuator up and down to follow the curve.

The Arduino'ish code snippet might begin to look like this:

int X; int Y;

for (X = 0; X < paceMax; ++X)

{

Y = "the generated formula" (X)

analogWrite(legPin, Y);

delay(delayAmount);

}

Next Steps:

So I think the next steps would be to actually mount the LED to froggy's foot. There is no linear actuator but 2 servos (Polar coordinates hopefully avoided by the whole GP thing). Another task would be to incorporate the GP into MyRobotLab... always more steps for froggy foot.... hop hop hop...

Trying to assimilate Frog GP into MyRobotLab... you will be assimilated... Riddipp... Riddipp...

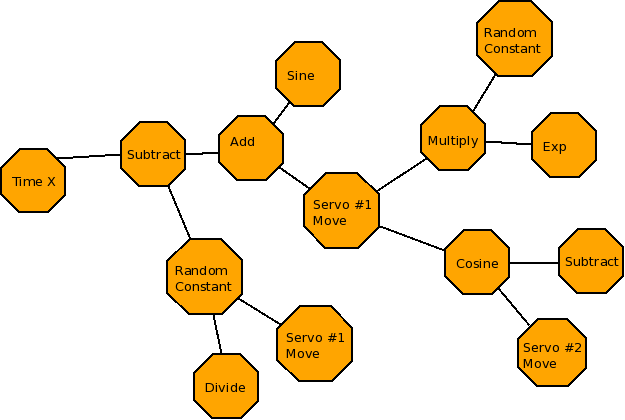

5 Legged Horse

Here is an example of GP finding a solution but not necessarily the optimal one. A 137 node formula was used to derive the graph Y = 0.5

The Simplest Case

Here the input data was a single point (0.5, 0.5).

The Genetic Program of fitness 1.0 (perfect) was created in the first generation.

As you can see the program evaluated to Y = (X - X) + X, which is Y = X .... Yay !

2010.12.20 - GP Code Assimilated

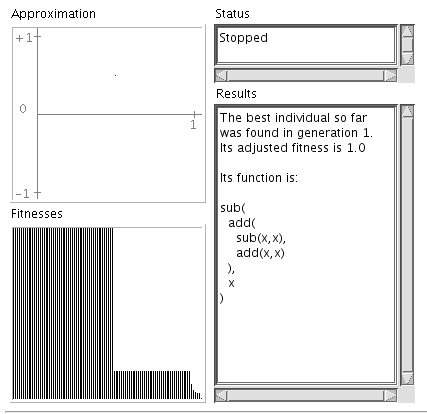

Some of this stuff is now starting to congeal. First in order to generate a program you need a pool of possible nodes.

This will be the pool of my proposed nodes for the first simple tests. It is what Gerber's Applet had with the addition of (Move Servo #1 (value) & Move Servo #2 (value)).

The other work which will need to occur is to get the feedback of the LED position to interact with the Fitness Function / evaluation.

Pool Of Possible Nodes - to create a Genetic Program

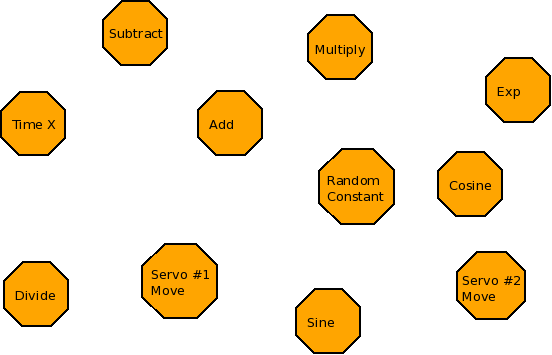

Possible Solution

This possible solution would get evaluated with the feedback for the fitness function coming from the LED position

2010.12.26 - Feedback enabled

It doesn't look like the camera capture is following the leg in the video, but that has to do with frames dropping in the screen capture program.

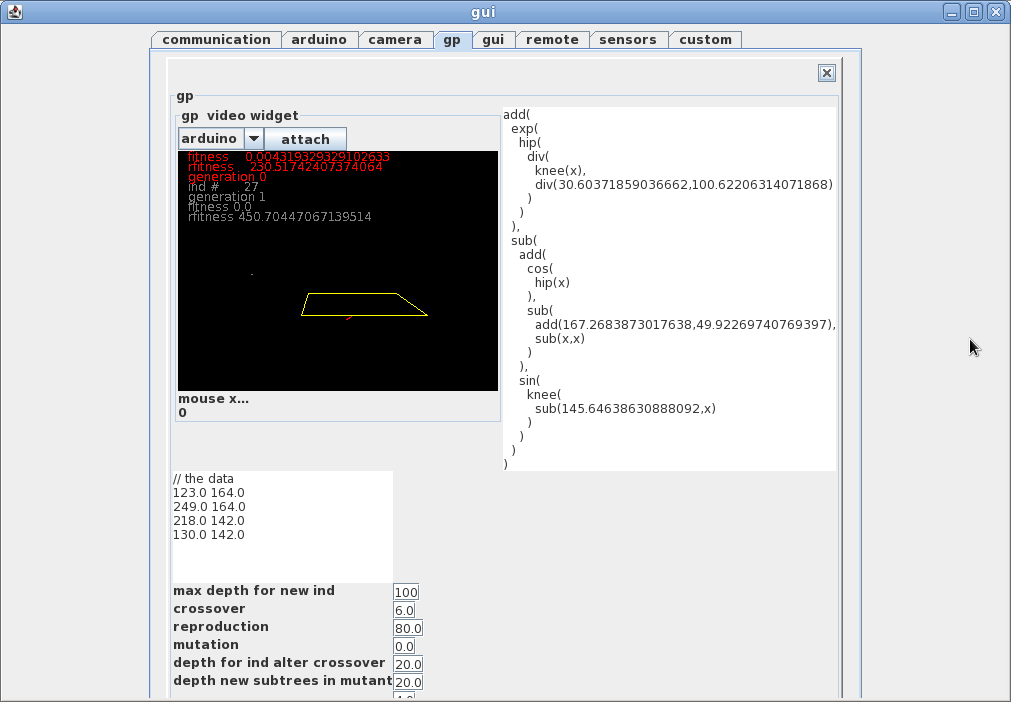

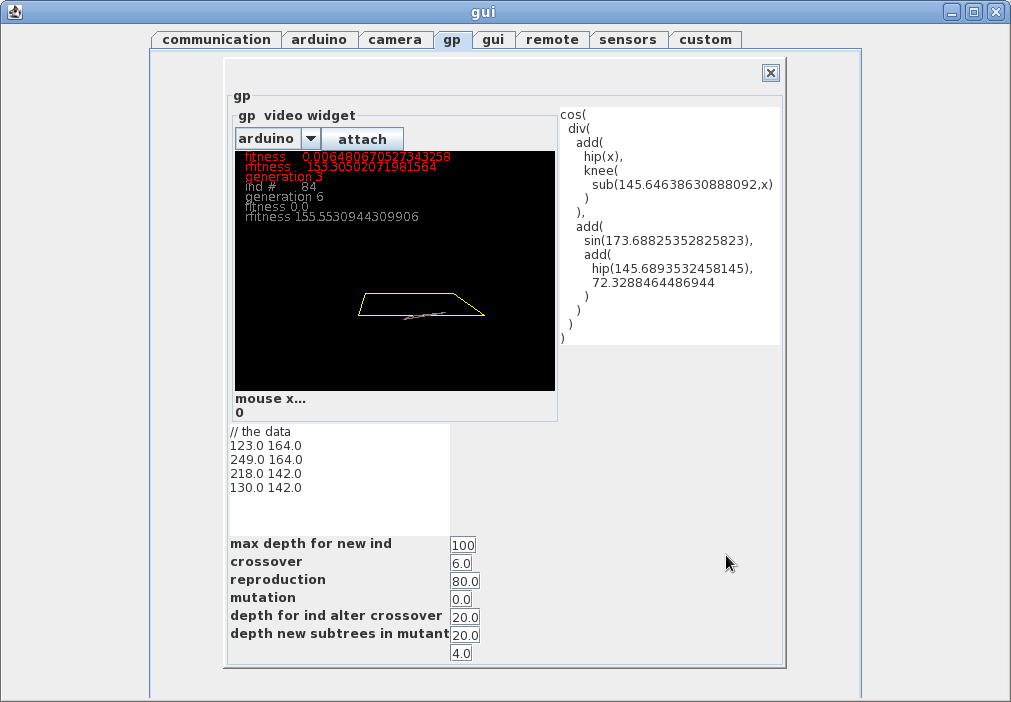

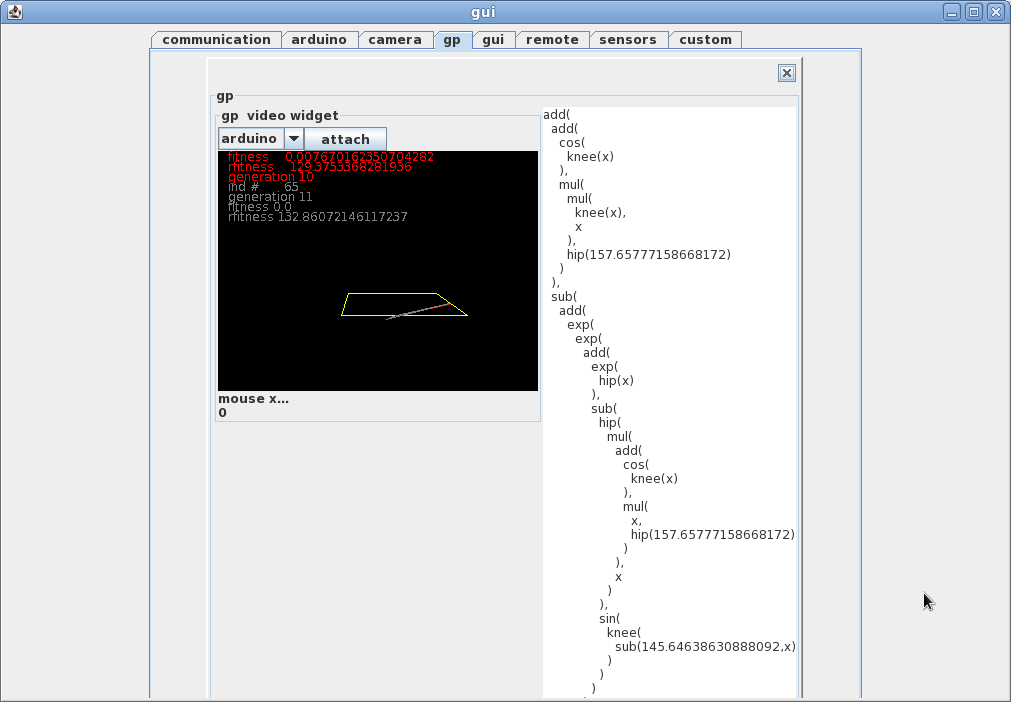

Here is the result after 43 generations. It follows the grandfather program (from generation 36) pretty closely. I can see mutants appear occasionally. I think they "look better" than grandfather#36. There is some "adjusting" on fitness values which now may not be appropriate. In addition the programs trees have grown to about 150 nodes, with ~120 calls to the servo in evaluating 1 program. The calls are not being processed because the ~120 calls to the servo make no relevant changes. They basically overrun the servo. I was thinking in modifying the program tree building functions so that there could be only 1 call to each servo (knee & hip). The actual values can be modified 120+ times, but it will only be 1 call to each servo.

Telefox and Rik mentioned the amount of "useless" material in DNA, but I think there is plenty diversity for the values of the servos versus the actual call to the servos. For example moveKnee( (x + 10) - 10) would still be possible or moveHip ((x + 0) - 1) + 1) , moveHip(x / x * x ) ... etc So, there is plenty of room to create garbage.

2010.12.27 - A few goofy things

I came to the realization that the fitness factor is evaluating the points in exact order. But if the generated program created a movement which "matched" exactly but was rotated. For example, if the generated program created a movement where step 1 was at point 2, and step 2 was at point 3, step 4 was at point 1 - it would throw the solution out, even though as a cyclical movement it is perfect. Since there are 4 possible rotations, does this mean I'm throwing out 3/4 of the possible solutions?

Somethins Screwy

Something is just plain wrong in the fitness evaluation - I believe I have goofed something up - I hand calculated some of the "Best of Breed" Programs and can see ones being rejected that are better values. Humbug, time to debug.

I thought I was doing this

- move servos

- wait until image is stabilized by constantly comparing video feed

- when image is stabilized continue processing

I think its still a good routine - the part I miss calculated was the fact that even though the program commands the servos to move the delay between when they are actually moving and when the servos get the command is enough time to snap 2 stable pictures.

I will add a delay before checking the video stream, to at least get the servos "moving"

2010.12.28 - Found goofy thing behind keyboard

There was a nagging feeling I had previously regarding setting the servos to an initial state. It turned out the nagging feeling was correct. I stepped through the code, when a "Best" was selected which had a single knee move. That would mean the program was completely dependent on some previous individual setting the hip ! Ooops!

2010.12.29 - Summary

Genetic Programming is very appealing because of its organic design.

Unfortunately, I needed to slow the video capture / genetic processing loop down to 1 second per iteration. This was necessary to capture accurate positions of the LED. There are 4 points of evaluation in each Individual fitness test. It is also necessary to set an initial state, for each individual. This comes to 5 seconds per each evaluation. So if we would like to do 999 generations at 100 individuals each with 5 seconds per evaluation, the time involved would be nearly 6 days ! I don't have the patience. Although it was fun to walk away from the computer, and have it busily "crunching" possible solutions. But, the servo noise was quite annoying.

It is important with genetic programming the evaluation of each individual program happens very quickly, otherwise the time needed to create something useful becomes way too long.

Genetic programs working in simulations can work considerably faster, but at a possible cost of not modeling the real environment satisfactorily. Also, creating or interfacing with a simulator may not be worth the effort in some situations. Telefox, shared some links on a project which has done genetic programming/simulator integration. The Starfish robot uses a simulator to run through possible walking modes - here is an example.

The following images are the results of letting the program and feedback system process for 1/2 a day. Not spectacular, but not bad either. I now would like to try something with accelerometer feedback. The feedback system of the accelerometer should be considerably faster than the video system. Balancing should be challenging. The amount of time balanced should be part of the fitness program. I've been kicking around a few ideas but nothing concrete, and now i want to spend some time on WiiDar (wii LIDAR) since Santa gave me a blue-tooth dongle.

I would welcome feedback, or anyone wanting to experiment with a similar setup.

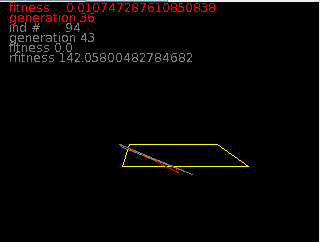

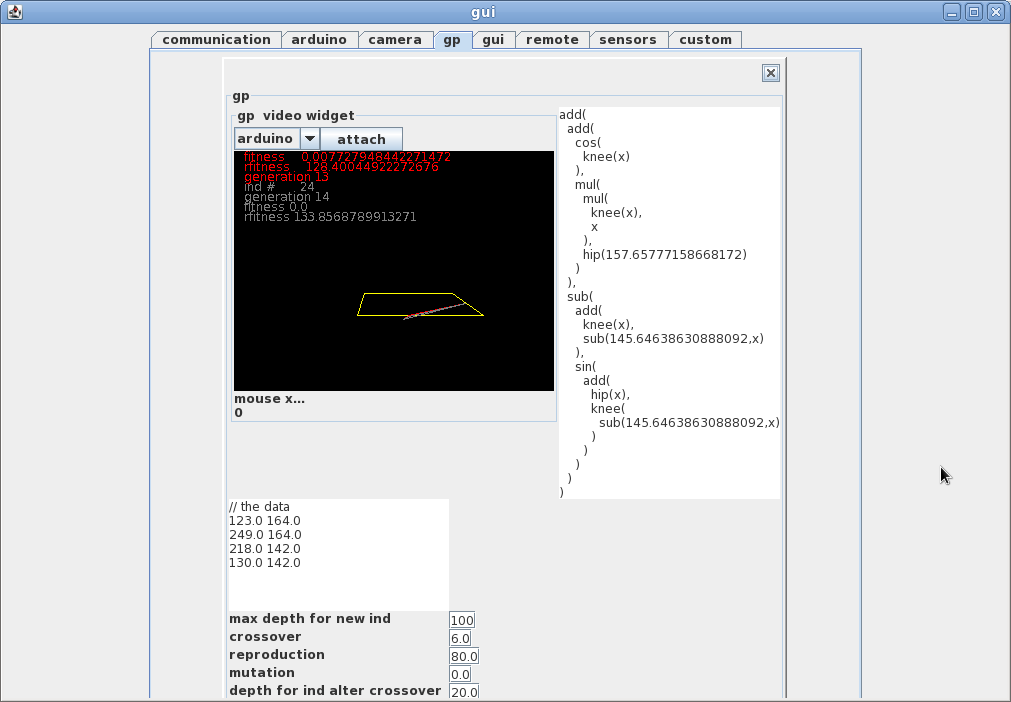

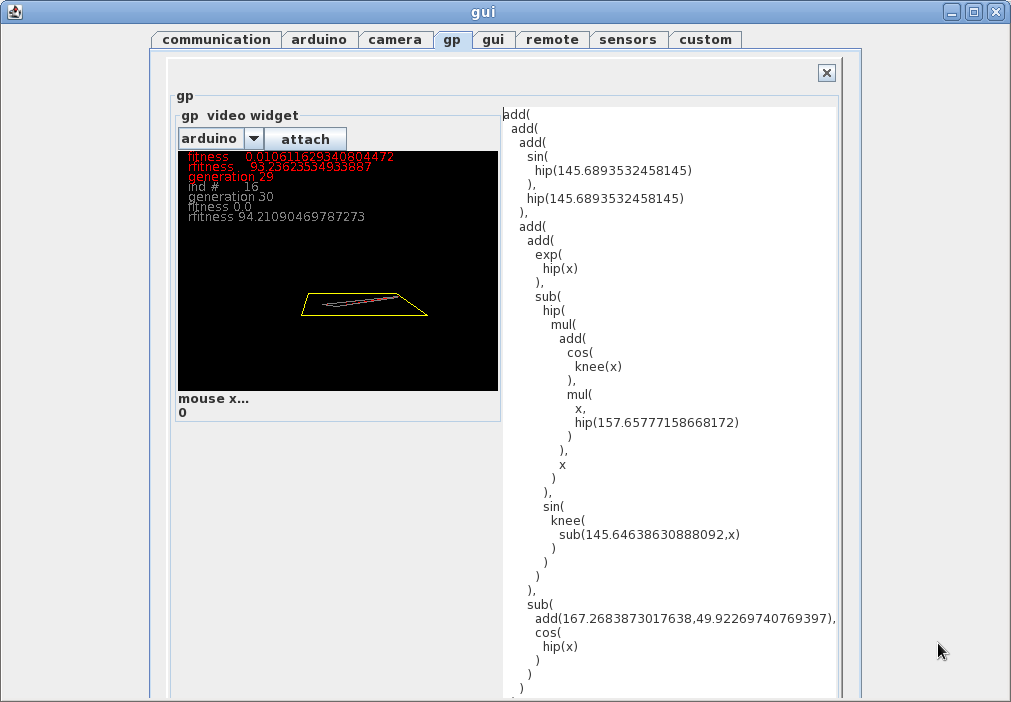

Series of screenshots from 30 generations of the GP trying to find a program

who's optimal solution was the yellow trapezoid. The red letters & red path represent the

current best. The grey text and grey path represent the current individual program

being evaluated.