.png)

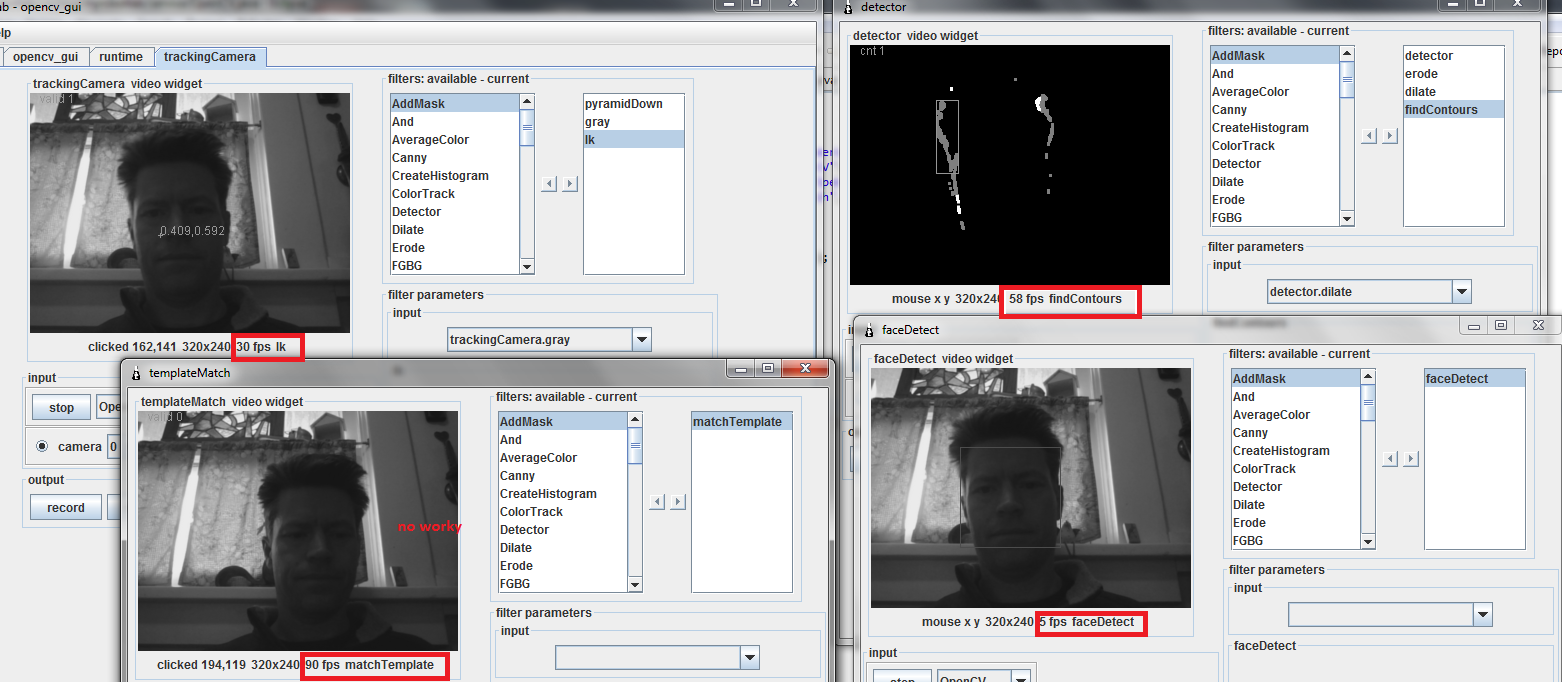

Here is a first attempt to do what the diagram shows.

Things of interest :

- face detect is very very slow - so its great to hang off of the trackingCamera stack - as it will never re-process the same frame

- the detect stack varies greatly - filterContours within it takes a proportional amoun tof time depending on the number of contours it needs to outline - this filter has a lot of potential to tweak - some variables include the minimum or maximum number of pixels to count as a blob, also Alessandruino and I were talking about finding the average location of all blobs found, which would represent the "center" of activity

- templateMatch is WAY to quick - its fps is 90 - while the camera feed is only 30 fps - this means it processing 60 fps twice :P

- templlate is very quick, but I'm interested in saving a large number of templates in memory for more robust matching - this should possibly slow it down to a reasonable rate

- I noticed when I stopped the capture, I could not start it again - this needs to be fixed

- simple non-blocking references of video sources was an easy implementation, but perhaps not the best .. I'm still considering blocking pipelines, so that only "new" frames are processed

- overall it worked ok - lots of potential - but there needs to be a centralized system (Cortex) which tweaks, starts, stops, and changes these stacks depending on need

- the code I posted in the web page (vs checked in), because I consider it only a "test" and likely - it will not work as the system evolves..

- there needs to be a new filter which takes a bounding box or contours and creates "cut-out" tempates to be used later

- bug in displaying pipeline details when done in code back to the gui

- match template needs to accept an arraylist or map of images to match, its result should be matched integer values assigned to each image

- capture stopCapture needs to work as expected

LoggingFactory.getInstance().configure();LoggingFactory.getInstance().setLevel(Level.WARN);OpenCV trackingCamera = (OpenCV) Runtime.createAndStart("trackingCamera", "OpenCV");OpenCV detector = (OpenCV) Runtime.createAndStart("detector", "OpenCV");OpenCV faceDetect = (OpenCV) Runtime.createAndStart("faceDetect", "OpenCV");OpenCV templateMatch = (OpenCV) Runtime.createAndStart("templateMatch", "OpenCV");// filters begin -----------------trackingCamera.addFilter(new OpenCVFilterPyramidDown("pyramidDown"));trackingCamera.addFilter(new OpenCVFilterGray("gray"));trackingCamera.addFilter(new OpenCVFilterLKOpticalTrack("lk"));detector.addFilter(new OpenCVFilterDetector("detector"));detector.addFilter(new OpenCVFilterErode("erode"));detector.addFilter(new OpenCVFilterDilate("dilate"));detector.addFilter(new OpenCVFilterFindContours("findContours"));faceDetect.addFilter(new OpenCVFilterFaceDetect("faceDetect"));templateMatch.addFilter(new OpenCVFilterMatchTemplate("matchTemplate"));// filters end -----------------GUIService gui = new GUIService("opencv_gui");gui.startService();gui.display();// setup pipelinesfaceDetect.setInpurtSource("pipeline");faceDetect.setPipeline("trackingCamera.gray");detector.setInpurtSource("pipeline");detector.setPipeline("trackingCamera.gray");templateMatch.setInpurtSource("pipeline");templateMatch.setPipeline("faceDetect.faceDetect");trackingCamera.capture();faceDetect.capture();detector.capture();templateMatch.capture();trackingCamera.broadcastState();faceDetect.broadcastState();detector.broadcastState();templateMatch.broadcastState();