Lucas and Kanade developed an algorithm which allows tracking of object through a video stream. The algorithm requires less resources and is usually faster in response than many other forms of tracking.

Pros :

- low resource

- quick response time

- can track many points at once

Cons:

- requires or prefers high contrast corners over plain backgrounds

- initial tracking points must be set manually, or by some other algorithm

- will not "re-aquire" a corner if the corner moves out of the field of view

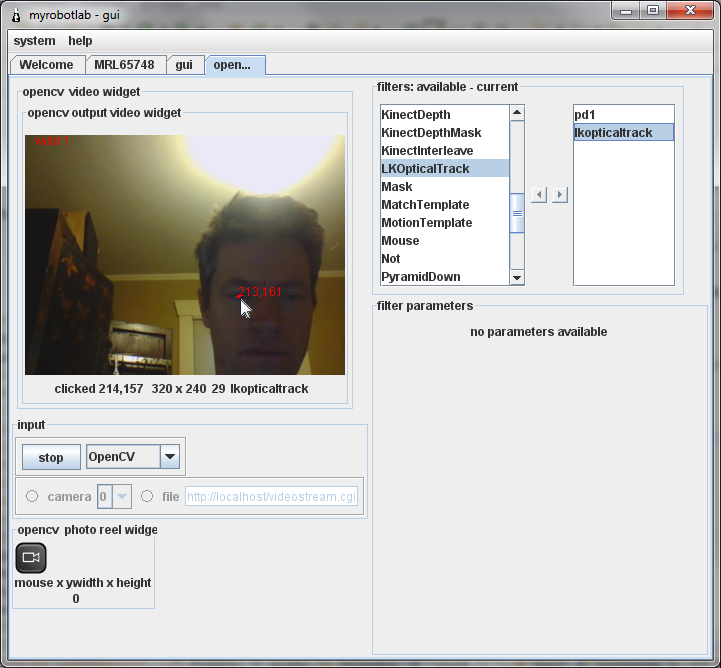

The OpenCV service has this algorithm incorporated into the LKOpticalTrack filter. Start MRL and load an OpenCV service. I usually use PyramidDown too, so the display is more responsive. After PyramidDown, load LKOpticalTrack, name it something creative like lkopticaltrack :)

Highlight the filter, then pick something you want to track in the video. Click on it with the mouse. A red dot should appear and follow whatever you selected until it runs out of the field of view.

This point is published as a message so other Services can consume it, like the Jython service, which in turn could control a pan / tilt kit.

Programmatic Control

# lkOpticalTrack.py

# to experiment with Lucas Kanade optical flow/tracking

# starts opencv and adds the filter and one tracking point

from jarray import zeros, array

import time

opencv = runtime.createAndStart("opencv","OpenCV")

# scale the view down - faster since updating the screen is

# relatively slow

opencv.addFilter("pyramidDown1","PyramidDown")

# add out LKOpticalTrack filter

opencv.addFilter("lkOpticalTrack1","LKOpticalTrack")

# begin capturing

opencv.capture()

# set focus on the lkOpticalTrack filter

opencv.setDisplayFilter("lkOpticalTrack1")

# rest a second or 2 for video to stabalize

time.sleep(2)

# set a point in the middle of a 320 X 240 view screen

opencv.invokeFilterMethod("lkOpticalTrack1","samplePoint", 160, 120)

#opencv.stopCapture()