Overheated ! (it's hot today and it doesn't help!)

Started again : Remote Oscope in progress - simultaenously logging to ThingSpeak

.png)

Overheated ! (it's hot today and it doesn't help!)

Started again : Remote Oscope in progress - simultaenously logging to ThingSpeak

.png)

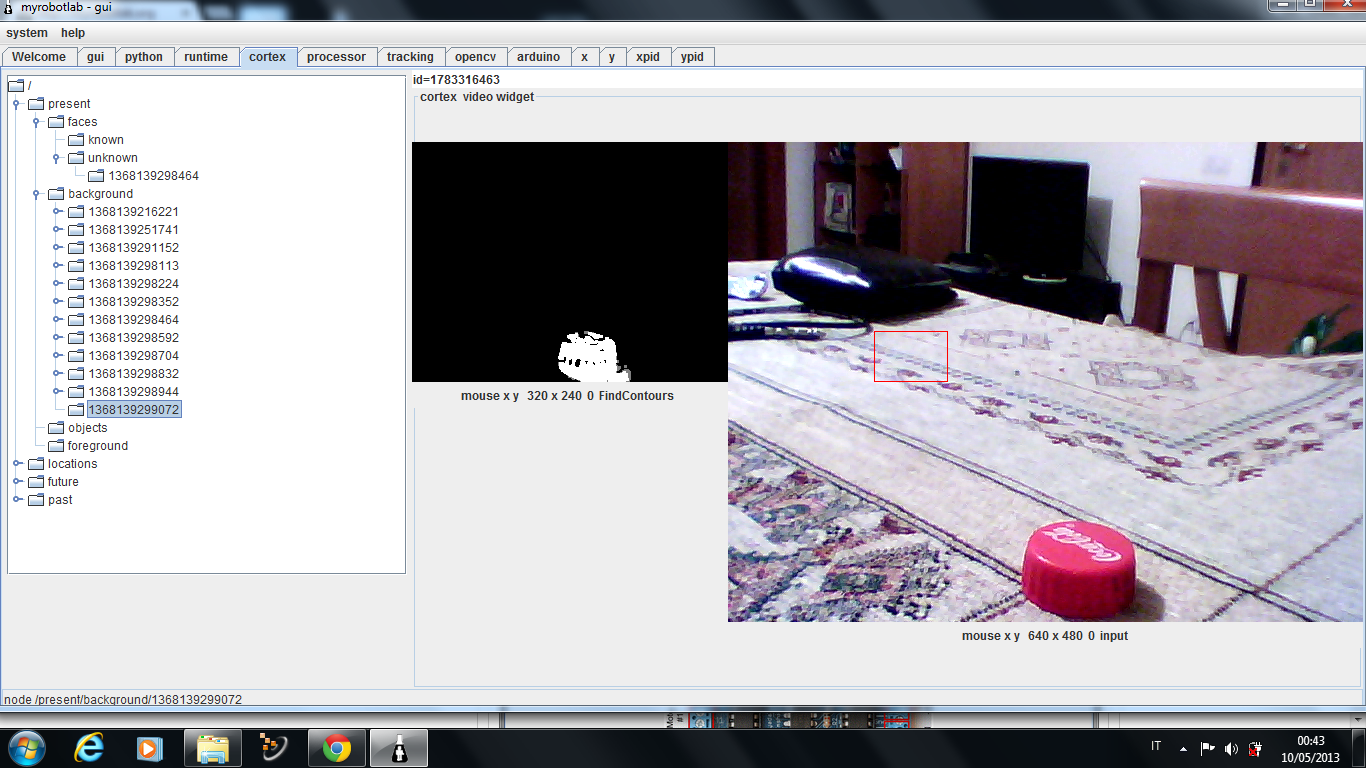

Here is my first Cortex Experience... Open Cv was searching for foreground, then i've introduced a cap of Coke...Then the image was stable for 3 seconds and the picture was sent to Cortex.

The Picture is wrongly saved in Background instead of foreground-object but it is a Bug mentioned by GroG in his Cortex service Page and it will be fixed soon.

You can see also the red square bug.

Kind of nifty it could decode the avi movie though :)

from java.lang import String from org.myrobotlab.service import Sphinx from org.myrobotlab.service import Runtime # create ear and mouth ear = runtime.start("ear","Sphinx") mouth = runtime.start("mouth","MarySpeech") # start listening for the words we are interested in ear.addComfirmations("yes","correct","ya") ear.addNegations("no","wrong","nope","nah") #ear.startListening("hello world|happy monkey|go forward|stop|yes|correct|ya|no|wrong|nope|nah") ear.startListening("hello world|happy monkey|go forward|stop") ear.addCommand("hello world", "python", "helloWorld") # set up a message route from the ear --to--> python method "heard" # ear.addListener("recognized", python.name, "heard"); # ear.addComfirmations("yes","correct","yeah","ya") # ear.addNegations("no","wrong","nope","nah") # ear.addCommand("hello world", "python", "helloworld") # set up a message route from the ear --to--> python method "heard" # ear.addListener("recognized", python.name, "heard"); # this method is invoked when something is # recognized by the ear - in this case we # have the mouth "talk back" the word it recognized #def heard(phrase): # mouth.speak("you said " + phrase) # print "heard ", phrase # prevent infinite loop - this will suppress the # recognition when speaking - default behavior # when attaching an ear to a mouth :) ear.attach(mouth) def helloworld(phrase): print "This is hello world in python." print phrase

!!org.myrobotlab.service.config.ServiceConfig listeners: null peers: null type: Sphinx

Sphinx is a speech recognition service (Speech To Text)

A WebServer service - allows control through AJAX and web services

The WebServer service load the contenent of the MRL folder on : http://localhost:19191/

localhost is : 127.0.0.1 and the defaulf port is : 19191

.png)

audiocapture = runtime.start("audiocapture","AudioCapture") #it starts capturing audio audiocapture.captureAudio() # it will record for 5 seconds sleep(5) #then it stops recording audio audiocapture.stopAudioCapture() #it plays audio recorded audiocapture.playAudio() sleep(5) # setting the recording audio format # 8000,11025,16000,22050,44100 sampleRate = 16000; # 8 bit or 16 bit sampleSizeInBits = 16; # 1 or 2 channels channels = 1; # bits are signed or unsigned bitSigned = True; # bigEndian or littleEndian bigEndian = False; # setting audio format bitrate sample audiocapture.setAudioFormat(sampleRate, sampleSizeInBits, channels, bitSigned, bigEndian) #it starts capturing audio audiocapture.captureAudio() # it will record for 5 seconds sleep(5) #then it stops recording audio audiocapture.stopAudioCapture() #it plays audio recorded audiocapture.playAudio() # save last capture audiocapture.saveAudioFile("mycapture.wav");

!!org.myrobotlab.service.config.ServiceConfig listeners: null peers: null type: AudioCapture

A simple service to record using a microphone. The recording line can be configured with a variety of settings.

######################################### # Speech.py # description: used as a general template # categories: general # more info @: http://myrobotlab.org/service/Speech ######################################### # start all speech services ( to test them ) # local : marySpeech = runtime.start("marySpeech", "MarySpeech") localSpeech = runtime.start("localSpeech", "LocalSpeech") mimicSpeech = runtime.start("mimicSpeech", "MimicSpeech") # api needed polly = runtime.start("polly", "Polly") voiceRss = runtime.start("voiceRss", "VoiceRss") indianTts = runtime.start("indianTts", "IndianTts")

The speech service is a speech synthesis service. It will take strings of characters and attempt to change them into audible speech. There are two parts to the Speech service, a front end and a back end. The front end simply accepts a string, but the back end can be switched from FreeTTS or Google.

FreeTTS is an open source speech synthesis system.

.png)

Template matching with video feed going over Video Streamer service.

My gamepad is LOCKED !

Trying to keep up with Alessandruino :P

whether the OpenCV library, I can create a project in java face login with webcam. Give me an example program..?

What can be the input voltage 12 volt to 5 volt output..??