Update 2013.05.

Here is the effect of fluorescent lighting, the waves I suspect are caused by the constructive/destructive 60 hz cycles.

I need a easy to work with test system in order to further develop the Cortex service. Right now, I've found the super cheap $6 usb camera is helpful. I need a camera to point at still scenes - so the one in the laptop is not very easy to work with (I would have to move out of the way :P). Somethings I noticed about $6 cameras - they have horrible focus, the driver quits occasionally, the framerate is not all that good - but the worse thing is there auto-balance is very bad. When changes of light occur cameras try to auto balance - cameras which do this well will change the brightness of a pixel in a regular way - but some cameras dont do this well. You can see some of the issues with this video.

Alessandruino pointed out a great site - http://en.akinator.com An expert system based on 20 question game - we were theorizing how an expert system could be created with visual data as input (assisted by human intervention)

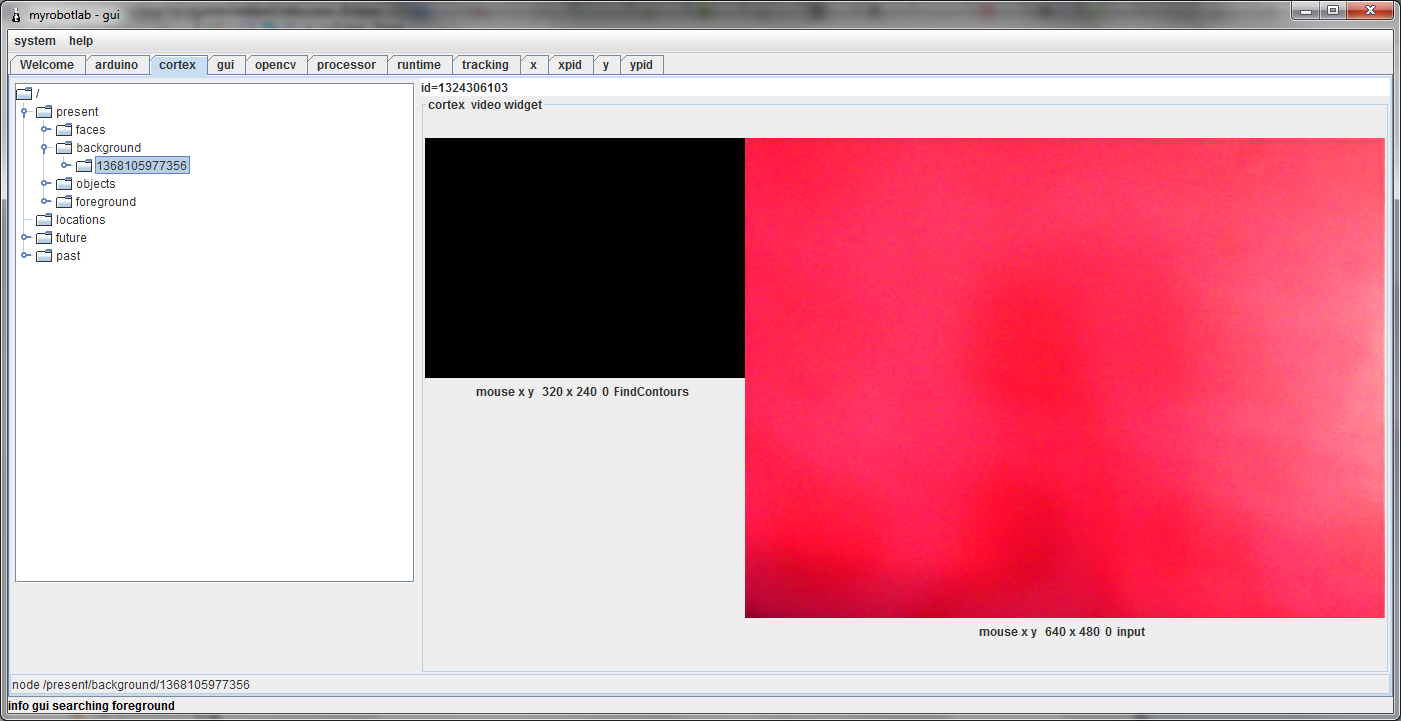

And here is the current state of Cortex with the cheapo $6 camera..

Step 1

Cortex starts

.png)

The strategy is to first stabalize the background - iit does this by adding a set of filters. The really important one is Background Subtraction - this "learns" the background from a short history of previous frames. The other important one is Find Contours - this searches for groups of pixels (objects) .. When it has "learned" the background it switches the Background filter from "learning background" to "searching foreground" - You can see this in the status message at the bottom. Now it's ready for a new object.

Step 2

When the background stabalized in the Tracking service it sent Cortex a message of image data. It said "This is a valid background" - It could send other information too - like "This is the coordinate based on the pan tilt servo values" or "This is the current time" - information which will help the Cortex categorize the image data properly.

Here we can see the memory of Cortex and right now it's placed a valid background into it tree of memory. The location its chosen is /present/background/timestamp . Once it has been placed here Cortex can run its own OpenCV service on the images in memory.

Step 3

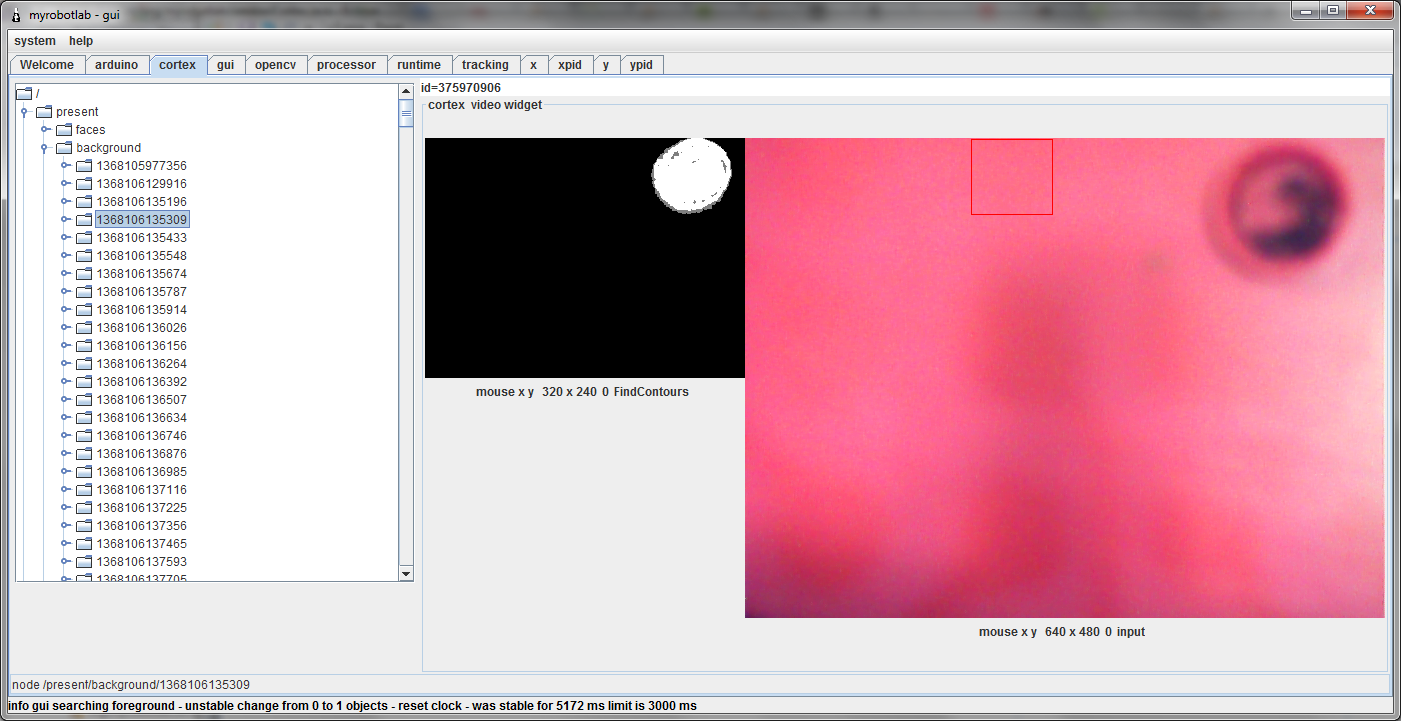

New object enters scene - it's stabalized by the Tracking service. What this means is the Tracking service determines if the scene has a valid object. When you put your hand in the scene or push an object into it - things get pretty crazy. The llight off all the background can change and a bazillion invalid objects are found. But if things are right and you leave a new object in the scene, and its stable (there is only new object and it persists over a certain amount of time) - it is sent to the Cortex.

Step 4

In this picture you can see it's identified a new object and sends it to Cortex. (Actually its sending may frames) .. Cortex is incorrectly categorizing it as background (BUG) .. but the idea is to categorize it as a new object to identify. Some other problems are .. you can see the square of the bounding box for the object, but its not the right scale for the image on the right (its correct for the mask on the left) .. (more Bugs) :P Heh, also the fact that it doesnt stop sending a bazillion forground images of the same thing ...

Time to make a bug "hit list"

- Foreground incorrectly identified as Background in memory structure

- Too many foreground images published to Cortex - if it does not move or change dimension significantly Cortex should only need a "few"

- DATATYPE ISSUE ! - all images should stay in lplimage form - it will become manditory that Cortex and Tracking remain on the same machine since this image form is not serializable

- Pipeline name & type saved with Image

- If PyramidDown is in pipeline, display should account for it

- Have Cortex make a "final" processed image and put it in unknown objects location - the processed image must have a reference to the data is was processed from

Well, that's where it is at the moment... more soon...

Until this has stabilized, it will be very "Blog" like...

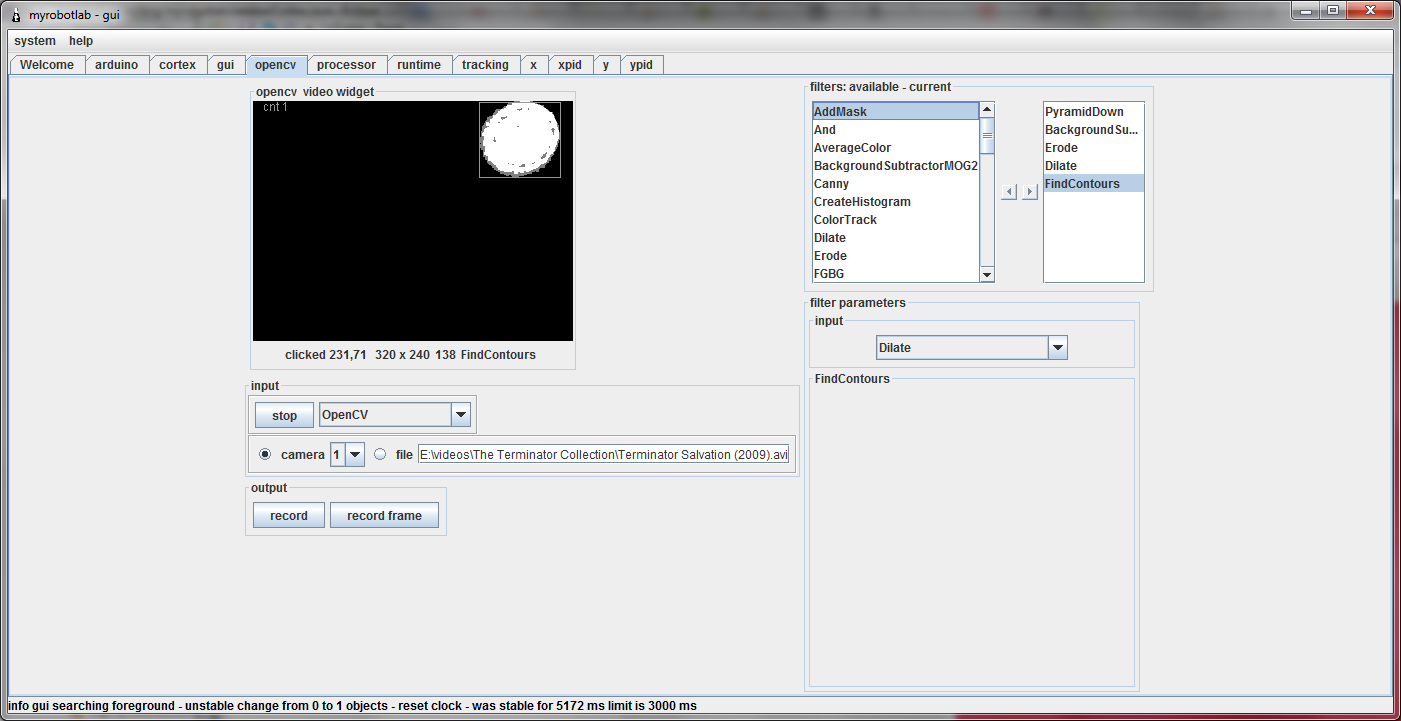

There was another huge update to the OpenCV service.

Previously, all filters were processed in a single list like series, now any filter in the list can grab the input of any other filter. This makes for a much more powerful processing system. Images and masks can be forked off, then re-combined with any other image in the processing list.

Also, the data from the filters previously was saved in a hap-hazard way. Now, its very structured and efficient - all data from all filters can be bundled and sent out as a single message to any service.

Very nice...

Above is a (simple & controlled) example of this. The following filters were used..png)

input - is just the raw input

pyramidDown - reduces the size

mog2 is a background subtractor

erode and dilate removes noise

find contours - finds blobs

and addMask (new filter) combines 2 images together - to get a colored segmented object..

Woohoo !

Next Steps? - send and this data from OpenCV to the VisualCortex - so it can store it in memory and start building relationships to it like the word ... "Cup" :D

This is the beginning of service which will orchestrate several other services to "learn" about its environment. This is a development project so many of the ideas are in "flux", but a general outline will initially have this process flow. At the moment it is catering to - "grab a specific object" objective, but this can be augmented in the future.

- initial calibration - determine fps, latency, feedback control delta, field of view, resolution, etc - (save)

- load serialized memory - <from last or foriegn sessions>

- move head to known location & heading

- if no data then learn the background - scene is now considered all background

- if data exists load it and compare previously known background with current scene

- if movement - capture data from movment - wait for stabilization

- isolate differences - make masks of image - masks will segment original fram into object segments - put into memory

- memorize differences and process images

- query memory

- if in memory and % match good- offer guess (yes | no)

- no data no match ask resources (question "what is it" - query email, web, other) - attempt to establish relationship

- load data and relationships into memory

- foreground becomes background

- prepare for next event

Heh, in the beginning ... there is this

It's the inside of a womb. :)) Actually, its not.. It is what happens when you look at a static background for a while... You loose interest in the scene and consider it all background. This is one of the states in our new Cortex service. It has learned the background and is now waiting for something to happen.

.png) Trying different filters and cameras. This is a "very" cheap $6 camera. The image is out of focus, which is not typically a problem. The issue it has in isolating objects, is the pixel luminosity has a tendency to "not return to original value" - or even near original. I think this may be more an issue of the driver, which is trying to "auto-balance" contrast, and is doing a very poor job of it.

Trying different filters and cameras. This is a "very" cheap $6 camera. The image is out of focus, which is not typically a problem. The issue it has in isolating objects, is the pixel luminosity has a tendency to "not return to original value" - or even near original. I think this may be more an issue of the driver, which is trying to "auto-balance" contrast, and is doing a very poor job of it.

The current combination of filters seem to work reasonably well. Gray filter helped considerably, threshold doesnt do anything useful, but it might if I put it in the correct location in the pipeline.

Here is the effect of fluorescent lighting, the waves I suspect are caused by the constructive/destructive 60 hz cycles.

Wow, this is going to be

Wow, this is going to be incredible! I just can't wait to get a little bit of time for to process this.

Cortex

Hmmm.... I like the description and feature actions. Really will expand the capability of the wandering minstrel.

I think you got something

I think you got something there Bstott ...

Computer generated songs & stories.... or is that to much like formula writing? :)