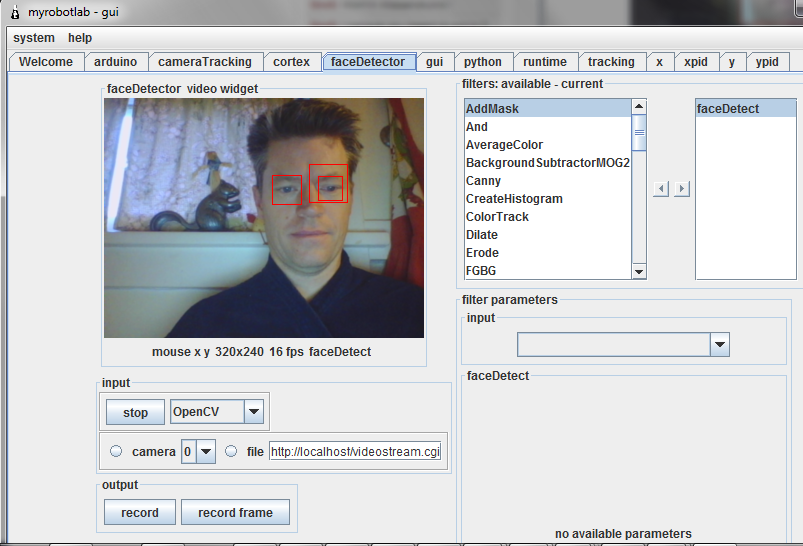

trying a different Haar classifier .. there seems to be serval pre-made ones face, eye, full body, profile, mcs eye pair, upper-body, lower-body... Makes me wonder about creating new classifiers...

.png)

Little updates - changed units to Frames Per Second (FPS) - and have it updating at a reasonably sane rate

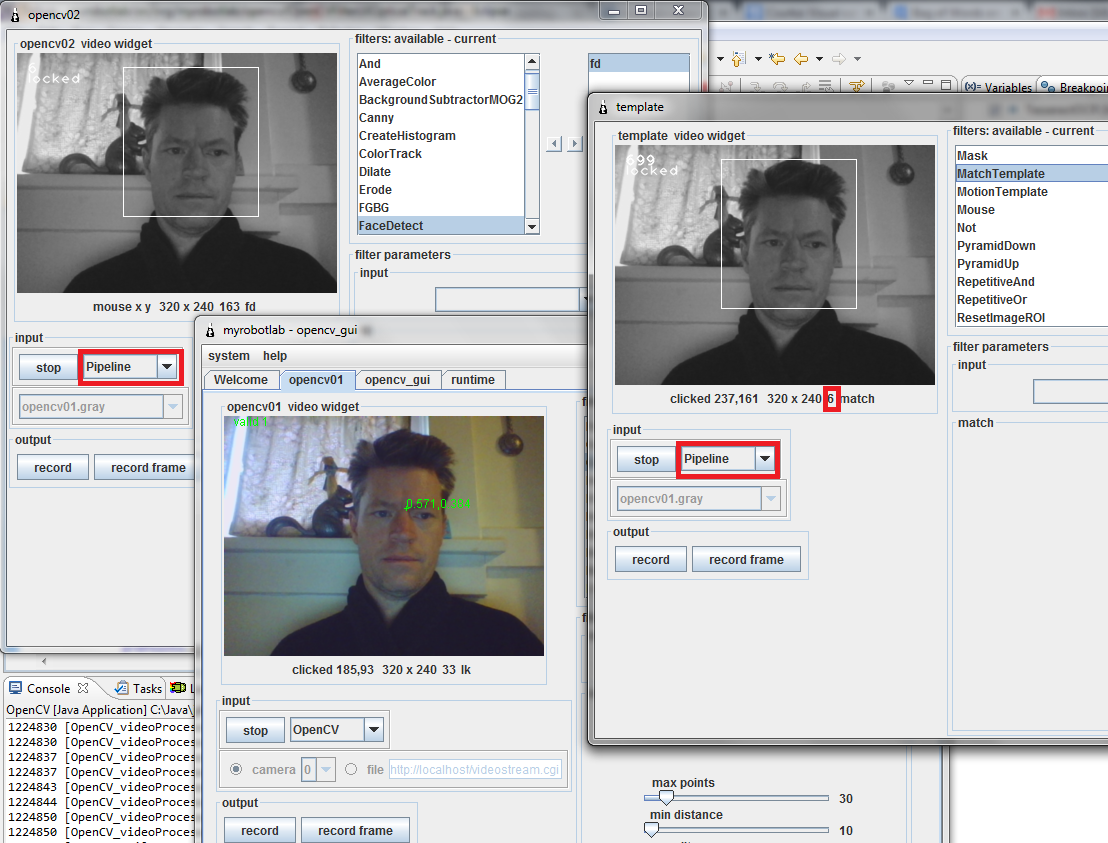

Here you can see the FaceDetect is 8 fps & the camera + lk optical track is 32 fps. So face detect should be the pipeline without the camera. Next a new filter which takes rectangle coordinates (from face detect for example) and publishes template images - Cortex will then put them in its tree of memory.

Here is an update of the Pipelining process in OpenCV. Any video stream filter's output is now accessable as input to another filter even in a different service.

To demonstrate - Here are 3 OpenCV instances. The middle one is the only one getting data from the camera (opencv01) - it does a pyramid down, lkoptical, and gray filter.

The other two opencv02 & template which do face detect and template matching respectively - are both using the Pipeline grabber to hook into the output of the gray filter from opencv1.

For the most it seems to be all working very well. HOWEVER, the 6 in the template match is a bit of a problem.

Long Story - There is a biig data structure called VideoSources and it is shared between all OpenCV instances. This is good because it allows access from any opencv service to any other opencv service. Since they are all operating on different threads, there has to be coordination between them all to happily share this global data !

Sharing between threads can get complicated - Initially I was using LinkedBlockingQueue - which is great. One thread puts the video frame on (opencv01), and another will take it off like a regular queue. Opencv02 & template are the consumers.

Problems : there is 1 frame put on - but 2 consumers - who gets it? This means copies have to be made of each frame for each consumer.

I thought I'd try to make it much more simple throw out the blocking queue and just have a simple reference. It works great ! But....... If you see the template speed - it says it takes only 6 ms to process a frame. Yet, the camera feed is 33 ms .. how is this possible? The reason is the template stack works quickly, and since it just has a simple reference to a frame it processes it quicker than the camera & opencv01 can produce. So, it's re-processing the same image multiple times ! :P

Hmmmmm...

References :