So the Kinect stuf is not quite ready for prime time... If you have a working MRL install you can do the following to get MRL + Kinect (stuff)

Download this zip

https://drive.google.com/file/d/0BwldU9GvnUDWdXliYTFSWVozYlE/edit?usp=sharing

unzip the 2 directories and drop them in your (mrl install directory)/libraries/native/x86.64.windows/

So that you'll have a

(mrl install directory)/libraries/native/x86.64.windows/NITE2

(mrl install directory)/libraries/native/x86.64.windows/win64

Also copy the SimpleOpenNI64.dll into the (mrl install directory)/libraries/native/x86.64.windows/

Copy the SimpleOpenNI.jar into (mrl install directory)/jar

WHOOOHOO ! - we got angular data for omoplate & bicep servos ..

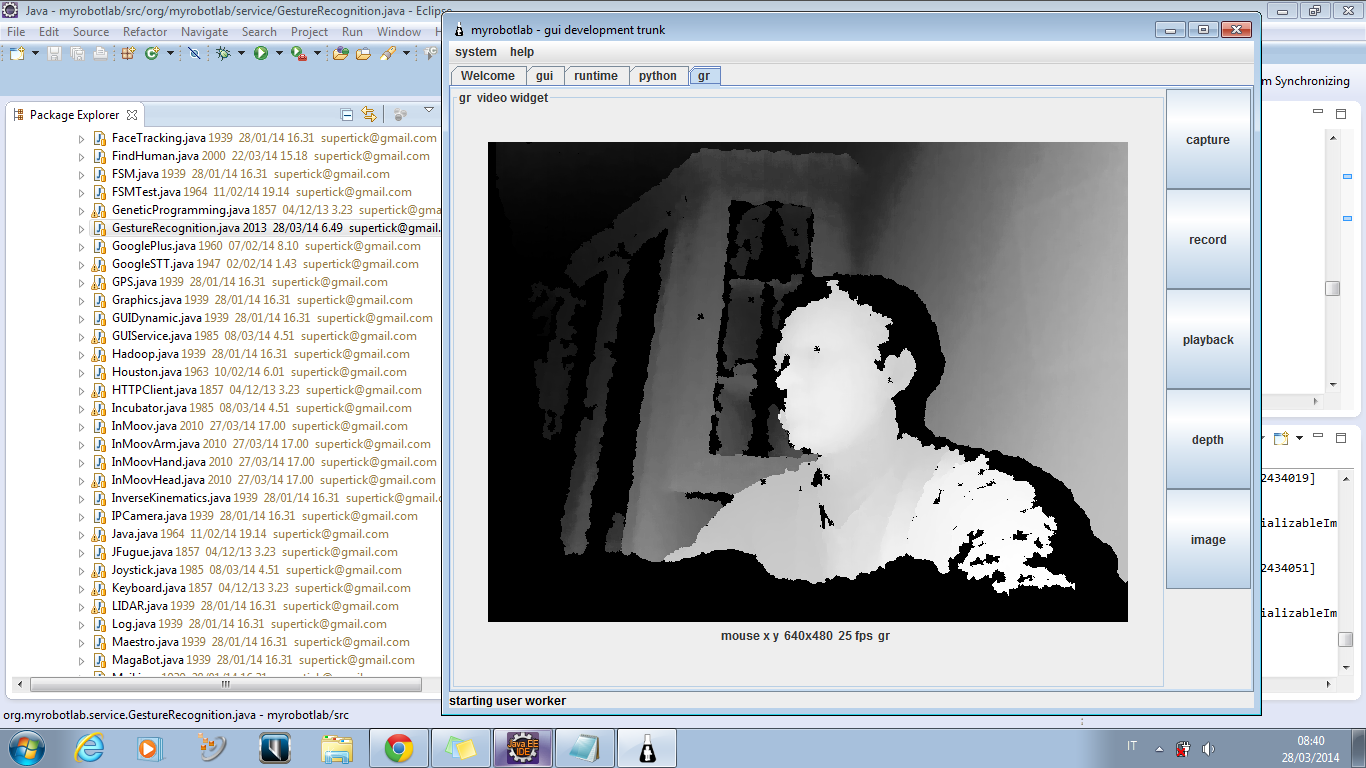

I'll have to save off a series of pictures so we can analyze them but this single frame (in a silly position of getting a screen captuer :P)

right bicep (elbow) 97

right omoplate 6

left omoplate 38

left bicep (elbow) 178

.png)

Updates - Service Name is no longer GestureRecognition ... It is OpenNI ..

A Skeleton's data is being filled and published and you can get to it in python with the above script...

The Skeleton's parts should be pretty straight forward. They are float vectors - PVectors (from processing)..

I'm printing out just the head xyz point - this is an abosolute cartesian coordinate system (don't know where the orgin is exactly).

others would be skeleton.rightShoulder.x ... skeleton.rightElbow.x .. skeleton.rightHand.x etc.. .. the last value is supposed to be quality, but i have not seen it vary at all..

Have Fun :)

.png)

Look I found a skeleton !!! :D Looks like I got most of the pipes hooked up. Data is flowing in and out of SimpleOpenNI. The skeleton does crazy dancing when it can't find my real legs. SimpleOpenNI was designed specifically for Processing. But I've hacked it up pretty well.

This is a summary of the work I've done and what I've learned.

- OpenNI & Nite can be a PITA to work with - there are many pieces, native files, jni files, jar files, drivers.

- SimpleOpenNI has does a spectacular job of packaging all the stuff up and trying to make the shakey platform stable

- SimpleOpenNI was made for the Java application Processing.

- It still takes vector math outside of NITE to make a skeleton.

- SimpleOpenNI was not designed to operate with "any" Java application .. "only" Processing

- It would be "nice" I think if SimpleOpenNI was designed to work with "any" Java application - and Processing could use is as it did in the past.

- Point Cloud currently is broken, since I'm using SimpleOpenNI

- There is still a lot of work to be done :P

.png)

Some of the parts mapped out

LOOK ! - when the robot moves .. it makes you move :D

UPDATE 03.28.2014

This is what i got in the debugger.... gr works but with no skeleton tracking

OpenNI 2 - Cool idea - totally Jenga software

NITE 2 - Impressive - very Jenga distribution

SimpleOpeni - great project to control Jenga software distribution - but uses Processing (Big Big Project)

+ Elvish Hack Job - bits bytes and parts flying all over = what may be working Gesture Recognition in the near future......

Cons - its very ugly at the moment, haven't tried NITE gesture recognition, but don't expect too much trouble. pauses occasionally, don't know where that is coming from.. Needs optimizations. Also need to organize it all and get SimpleOpenNI to work with all platforms in its modified state...

Pros - it uses SimpleOpeni AND I carved Processing out ... (someday I'll make a service for processing, but we need GestureRecognition for MRL) And SimpleOpenNI has been just been BORGED !

RESISTANCE IS FUTILE !

Reference

Interesting video on the Microsoft Kinect SDK - could be another service to borg in :)

Whooo hooo... I m waiting for

Whooo hooo... I m waiting for a relase to test :) can t wait !!!

You da man!

SWEET!

Very cool progress on that

Very cool progress on that field!!

Ahahaha ... your fast neo !

Ahahaha ... your fast neo ! ...

Ya .. it finds user's but doesnt skeleton track them.. but I think I know why....

We are clang clang clanging on it now...

Awesome Skeleton

Man I can hardly wait to get mine going with all the new things you are adding.

Interesting work.

Whooo hooo... Skeleton

Whooo hooo... Skeleton Tracking is worky for me too... it goes crazy if it finds only my arms and not my legs.... but it's really cool :)

How can i access coordinates of each joint???

Whoo Hooo... i can see each

Whoo Hooo... i can see each joint printed on the screen... Now how we can access each 3d point of the joints ( for example left hand) in python?

Almost ready .. the labels

Almost ready .. the labels were manually done for as a map for myself to figure out what data is coming from OpenNI / NITE.

I'm making a Skeleton object which will correspond to what is available - each joint will be a PVector.

A PVector is a copy of the of the "Processing" Vector - you can see Processings Javadoc of it here - http://processing.org/reference/javadoc/core/processing/core/PVector.ht…

It will be set with the 3D coordinates of each point at the end of line of the skeleton..

YAY !!! CAN T WAIT TO

YAY !!! CAN T WAIT TO TEST...GOT TO GO TO WORK WORK NOW...BUT I LL TEST THIS NIGHT !!!

Cool!

I'm guessing the 0,0,0 point is the kinect sensor. I'm guessing the X is the left or right of center, and the negative number would make sense for your head being left of center. The Y is probably vertical (above center?) and the Z is distance/depth. So now all we need is a way to make the hand/arm point along a vector to that PVector and then Robocop style targetting will be complete. Then we'll need a ballistics table for various armaments and their corresponding projectile drop over distance. Lasers are always best because they're WYSIWYG even for submerged targets or reflections of targets.

Laser = 0

AirSoft = 2mm/1000mm

Nerf pistol = 20mm/1000mm

Squirt gun = 60mm/1000mm

law of cosines for skeleton tracking...

I've been working through the skeleton tracking stuff also.. I have come up with the following script (based on the law of cosines) to compute the angle of the left bicep servo for the inmoov so it can track my motions when I'm standing infront of it... The angle returned is 180 when the arm is straight. I am mapping the computed angle of 180 to 0 degrees to 0 to 90 degrees for the bicep servo to give a reasonable response..

This only computes it in 2D space and doesn't take into account rotation... But, hey it's a start...